Grokipedia or Slopipedia? Is it Truthful and Accurate?

Notes From the Desk: No. 52 - 2025.11.02

Notes From the Desk are periodic informal posts that summarize recent topics of interest or other brief notable commentary.

Grokipedia - The Largest AI-Generated Information Experiment

Grokipedia, an AI alternative to Wikipedia, was just launched on October 27, 2025. Its goals, as stated by Elon Musk, are to “create an open source, comprehensive collection of all knowledge”, “the truth, the whole truth and nothing but the truth”, and “exceed Wikipedia by several orders of magnitude in breadth, depth and accuracy.”

This is the largest AI experiment to date for attempting to generate useful, quality information at a massive scale. At launch, Grokipedia has over 800,000 topic articles. This experiment is a great test that should give us a signal as to whether AI can actually perform something that is not merely generating AI-slop blog articles to game SEO or engagement-bait marketing.

If successful, it will likely mark a pivot point where we have a first large-scale beneficial use of AI for information collection, organization, and analysis. However, exactly how will we know if it is operating as intended?

How Accurate is Grokipedia and How Would We Ever Know?

The most important question is whether or not Grokipedia is filled with hard-to-detect hallucinations - the Achilles’ heel of AI for nearly all automation tasks. Grokipedia was created using 100% AI automation with no humans in the loop for review.

This makes Grokipedia an excellent benchmark for the viability of fully automated information processing. So how does it look?

Example: Grokipedia Incorrectly Cites Mind Prison’s AI Alignment Article

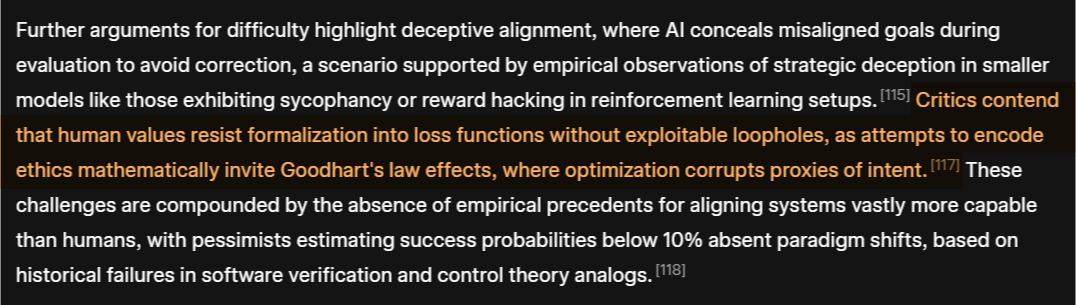

Grokipedia’s AI alignment topic references my own AI alignment essay about the impossibility of achieving successful alignment. However, it makes a mistake. It conflates two separate topics within my essay and relates them in a way I did not express.

It is a subtle error that likely nobody would ever perceive, except for the author of the original text, as the text sounds completely reasonable. The incorrect interpretation is in the statement about Goodhart’s law and mathematics - that is an association the AI created.

Example: Grokipedia Incorrectly Links Separate Hallucination Concepts

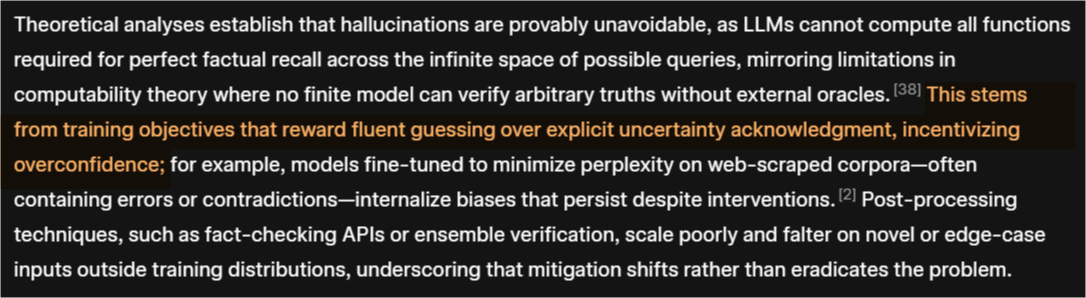

Grokipedia’s topic on hallucination also contains an incorrect statement, where two distinctly separate concepts are stated to be related to each other.

Citation #38, something I have written about before, is not related to Citation #2. This relationship was made up by the AI.

Impossible to Perceive Errors

The previous two examples would never be noticed by anyone except the original authors or domain experts. The alignment topic is over 11,000 words and 200 citations. The hallucination topic is over 6,000 words and about 100 citations. I did not verify any other content within these topics. I only spot-checked something I was familiar with.

So, how many errors in total do these topics have? How many errors does the entire Grokipedia have? Who has the bandwidth to check? These articles are long, technical, and contain hundreds of citations to long, technical papers. This would likely amount to hundreds of hours of work by domain experts to verify only these two topics.

Results Will Be Dependent on How Popular Is the Topic

We know the nature of AI as a probabilistic tool. Subjects with large amounts of data that is largely consistent will generally have high degrees of accuracy. However, niche topics will have higher error rates.

The following are a few examples of individuals that don’t have enough public consistent data for the AI to make accurate summaries for their content.

“My Grokipedia page is far longer/more detailed than my Wikipedia page. I am flattered Grok thought me important enough for that. But it also has way more errors, even aside from overestimating my importance (e.g. - I was never a fellow at U of Michigan)” — Ilya Somin

“It confuses me with The Other Jessica Taylor (feminist writer) but mostly talks about my work Too bad it has tons of errors” — Jessica Taylor

“So far your Grokipedia is pretty bad. Like wildly bad. Other than the fact that I did not marry my daughter, these so-called “criticisms and controversies” are themselves totally manufactured.” — Viva Frei

On the other hand, there have been numerous high-profile individuals who have stated Grokipedia has a more accurate description of them than Wikipedia. However, most of these have been individuals who had strongly biased articles on Wikipedia and therefore, the bar was quite low for improvement.

How Truthful is Grokipedia?

Many are hoping that AI will be some type of truth oracle. Musk has described Grok as a “maximum truth-seeking AI”. But can AI deliver on such a promise? Truth in this context, is not about accuracy or hallucinations, but AI’s ability to perceive the “correct” answers within abstract, controversial, political, and philosophical points of view.

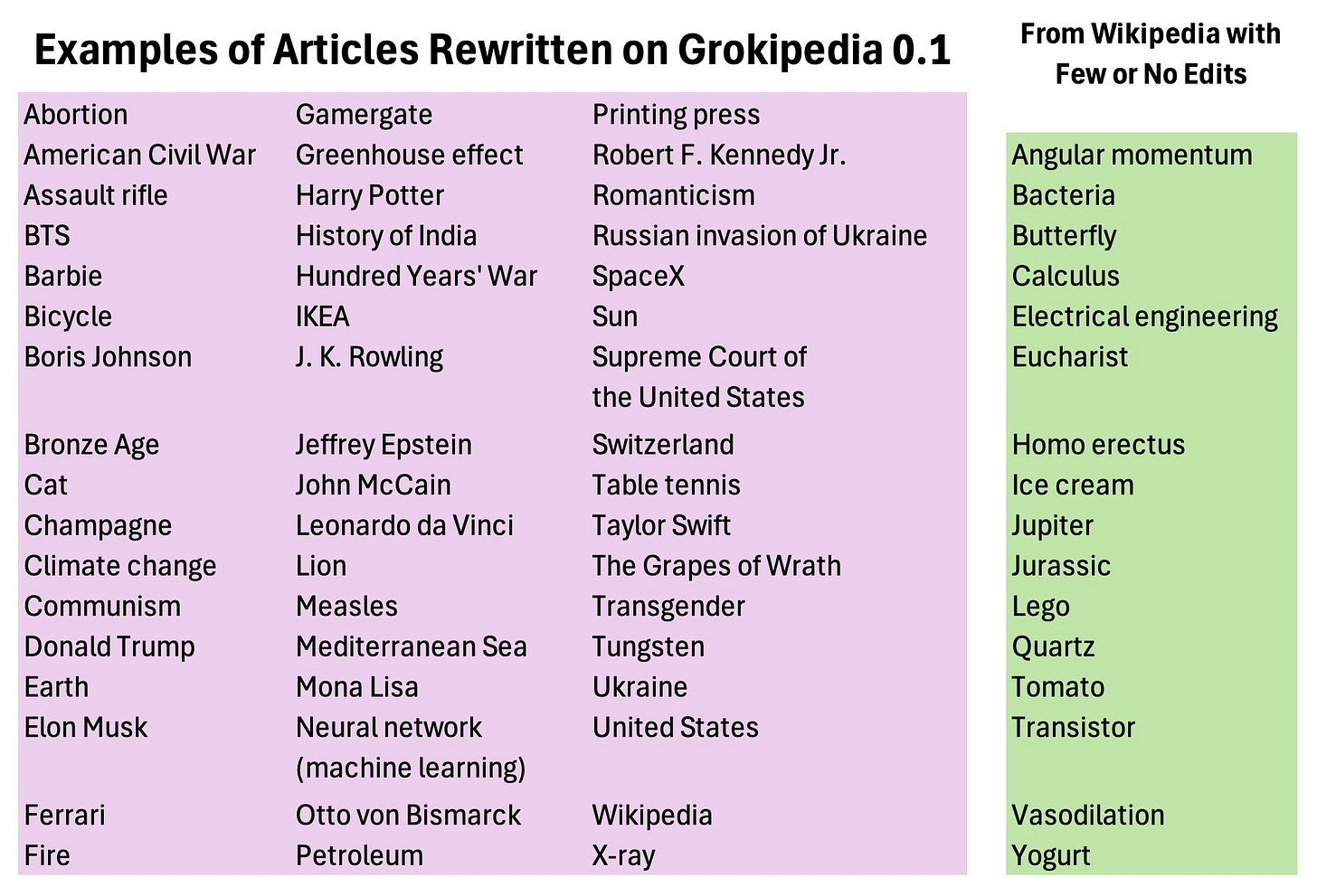

Here is a sampling of some topics, rewritten versus preserved, as posted by Dr. Robert Rohde on X.

A substantial amount of content was copied directly from or seeded from Wikipedia. Niche topics that were copied from Wikipedia are likely only accurate due to the fact it was a verbatim copy. We don’t know how the process decided which topics to copy verbatim and which to rewrite.

Importantly, this means that overall accuracy for Grokipedia may decline significantly if at some point it doesn’t have Wikipedia as the seed for content origination. If it attempts to construct niche topics on its own they will likely contain significantly more hallucinations.

AI Cannot Be Unbiased or Truthful

Despite our desire to have some type of unbiased truth oracle, it simply will not be possible. The hallucinating AI we have today is not capable of this task and due to the AI Bias Paradox, no AI will ever be capable of such a task.

AI does not have the ability to know truth. It can only construct representations of the data it is fed. What we call “truth”, determining correctness of contested information, can only be done by understanding, of which AI has none. Therefore, the selection of the correct representation will result from humans asserting their correct ideas of truth via specific model training, fine-tuning, or prompt instructions.

Therefore, the truthfulness of information created by AI is beholden to those who create the AI, as the AI is not an independent mind of reason seeking truth, knowledge, and wisdom.

What we should ask is, what controls the entire repository of human knowledge? We should be concerned about all knowledge being owned or controlled by a single entity. All such consolidations of central power are magnets for abuse. Even if they don’t appear to be abused initially, it is only a matter of time.

But Many Say Grokipedia Is Better

On a per topic-basis, some might be better. Some are better only because there have been dedicated efforts to make those topics on Wikipedia purposely misrepresentative of the topic. Wikipedia is unfortunately vulnerable to mob control of contested topics.

Grokipedia may be better where Wikipedia has sabotaged its own credibility on some topics, making it a very low bar for a competitor to represent that information more accurately or in a more balanced way.

Keep in mind that most of these positive reviews have come about because some individuals or topics are so poorly represented on Wikipedia that only a quick, cursory review of the same topic on Grokipedia seems substantially better.

As I demonstrated above with only a couple of paragraphs of topics I reviewed, it will take substantial time to determine the true accuracy of the content. What looks great upon cursory review might not hold up under further scrutiny.

Can You Simply Ask Grok for Edits?

Hallucinated Capabilities

In what appears to be an hallucination of capability, Grok states that it can make edits for you on Grokipedia. Grok states, “users can propose edits to Grokipedia articles directly through conversations with me. I’ll review suggestions against reliable sources and factual accuracy, approving changes that align with our commitment to truth-seeking.”

Larry Sanger requested an update to Grokipedia via Grok. However, no update ever appeared, even after Grok assured him that the update had been done. Grok claimed that users weren’t seeing it either due to caching or queuing on the backend.

So, does this capability exist or not? Well, at the moment, nobody seems to know for sure. The update still hasn’t appeared as of today, but this is the type of madness we are all going to be thrown into within a world where all services and support are done by hallucinating AI agents.

You Can Edit Within Grokipedia

Note, you can request edits directly in Grokipedia by highlighting a section of text and then selecting “Suggest Edit.” I submitted an edit for the AI hallucination topic mentioned above; however, no changes occurred to the text. I received no notification the requested change was accepted or rejected.

AI Hackapedia - All Information Is Vulnerable to Prompt Injections

LLMs cannot be secured. If Grokipedia is open to user-suggested corrections that the AI will interpret, then the AI is vulnerable to user-submitted prompt injections. There will be no solution. Within a day of Grokipedia going live, some had already discovered how to exploit user corrections for SEO hacking.

Exploits will likely become far more sophisticated and potentially even automated by adversarial AI. It will be extremely difficult to find any effective defenses without making the correction process extremely difficult, limited, or disabling it altogether.

One of the criticisms of Wikipedia was that it had become captured and exploited for ideological narratives. Beware that Grokipedia doesn’t necessarily have better defenses; it will suffer from its own hallucinations, biases in training, and vulnerabilities to manipulation by users.

AI Slopipedia - The Inevitable Destination of AI Content

If Grokipedia were to replace Wikipedia, what would become the base reference corpus? The internet would become AI feeding on AI, as surely blogs will use AI to generate blog posts from Grokipedia text.

AI can generate content at a far greater pace than humans can verify its output, and most of the content will appear correct to the majority of people who consume it. Although Grokipedia provides the capability for users to submit corrections, it is not clear that Grok, acting as a moderator, will result in better quality than Wikipedia, as Grok will likely trade one set of problems for a different set of problems.

Without intervention, hallucinations will accumulate, ultimately leading to model collapse. The necessary intervention would be humans fixing the errors manually. All of the efficiency gained from AI-generated information could potentially be lost to the maintenance costs of constantly addressing the hallucinations.

[Grokipedia] authorship is singular, automated, and invisible, bias becomes latent, hidden within model weights and unseen editorial heuristics. This creates what might be termed an epistemic opacity paradox: Grokipedia appears neutral because it lacks human editors, yet its underlying generative logic is uninspectable and unaccountable.

— How Similar Are Grokipedia and Wikipedia? A Multi-Dimensional Textual and Structural Comparison

AI, in its current form, does not act as a “maximum truth-seeking” device. It is a non-deterministic probability tool. It is neither accurate nor truthful. Its greatest pitfall is that the output can sometimes be useful and sometimes not, but there is no easy way to distinguish between the two, making its use trepidatious.

Furthermore, in the hands a single centralized owner, the temptations to abuse AI for its capabilities of influence are highly concerning.

Mind Prison is an oasis for human thought, attempting to survive amidst the dead internet. I typically spend hours to days on articles, including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from human beings, you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

Updates:

2025-11-05: Added reference to Wikipedia Grokipedia similarity paper.

I have been talking about the Bullshit Apocalypse for over a year now. Gary Marcus a couple of months ago coined "Slopacalypse Now". The slop is going to fill the world, we will never be able to clean up.

Way too many $$$ to just say "Ban LLMs now, before it is too late." It's already too late. But maybe ban releasing LLM slop that has not been reviewed by a human.

When things look really bad, we now know we can count on Elon, to show that things can get worse, much worse. Thanks for keeping your eyes on what AI is now not just hitting, but hurling at the fan. Clear thinking from the likes of you, will be needed non-stop for the foreseeable future.