Every LLM Is Jailbroken In Minutes | ALL LLMs Are Vulnerable

Notes From the Desk: No. 40 - 2025.03.02

Notes From the Desk are periodic informal posts that summarize recent topics of interest or other brief notable commentary.

Every LLM Is Jailbroken In Minutes

It has been more than 2 years since the first LLM was released for mass adoption by the public. So, what has been the progress in AI alignment/safety?

The current situation is that for each model released, it will be broken within minutes. There are no models that aren’t broken. There are hundreds of papers, now on arxiv.org, researching jailbreaking and model safety, and yet none of that has resulted in a model that isn’t jailbroken.

Here is Pliny the Liberator on X, posting a jailbreak to each model immediately upon its release.

This exchange between Eliezer and Pliny sums up the state of affairs rather nicely.

“The current state of AI brandsafety -- sometimes touted as a great proof of easy alignment -- is that no AI company on Earth can stop Pliny for 24 fucjing hours.”

— Eliezer Yudkowsky“24 minutes*” — reply by Pliny

Pliny The Liberator, Master LLM Jailbreaker

Here is a breakdown of some of Pliny’s techniques in more detail in “Unlocking LLM Jailbreaks: Deconstructing Pliny’s Prompt and Advanced Evasion Techniques,“ and an interview with Pliny The Liberator and VentureBeat.

Research Testing: All LLMs Submit To Jailbreaking

We hypothesized that GenAI web products would implement robust safety measures beyond their base models' internal safety alignments. However, our findings revealed that all tested platforms remained susceptible to LLM jailbreaks.

All the investigated GenAI web products are vulnerable to jailbreaking in some capacity, with most apps susceptible to multiple jailbreak strategies.

Many straightforward single-turn jailbreak strategies can jailbreak the investigated products. This includes a known strategy that can produce data leakage.

Investigating LLM Jailbreaking of Popular Generative AI Web Products

Our results demonstrate that all the LLMs are vulnerable to current jailbreak attacks. Heuristic-based attacks usually have good attack success rates and stability. Current defenses are not able to defend all the categories of jailbreak attacks.

Attacks on large language models (LLMs) take less than a minute to complete on average, and leak sensitive data 90% of the time when successful, according to Pillar Security.

Jailbreaks Are Accessible To Everyone

Unlike other security walls in traditional software, jailbreaking LLMs is mostly cut-and-paste for the average person. Just grab prompt text from a repository of already confirmed jailbreaks; no special skills or tools are needed. There are also jailbreak forums that will help you.

And the discovery of LLM jailbreak methods is far outpacing any implementation of defenses.

We summarize the existing work and notice that the attack methods are becoming more effective and require less knowledge of the target model, which makes the attacks more practical, calling for effective defenses.

Jailbreak Attacks and Defenses Against Large Language Models: A Survey

Sophisticated Massive Attacks Can Be Done by a Single Person

An extortion campaign targeting about 20 companies was orchestrated by a single person using AI. The AI was utilized to find companies with vulnerabilities, write code to exploit the vulnerabilities, find personal information that could be used for blackmail, create ransom letters, and set the ransom targets based on the financials of the company.

Nefarious automation is the type that AI excels at performing. Unlike running your business, nefarious automation only needs a small percentage of success to be useful. Failures don’t matter as it is cheap to scale. Therefore, hallucinations are not a meaningful obstacle in the same way they are for productive goals.

AI Safety Impacts To LLM Performance

All of these ineffective AI safety walls also become barriers to fully utilize LLMs capabilities for general tasks.

… our exploration of the research questions (RQs) showed a clear and persistent conflict between performance and safety, making it difficult to achieve both simultaneously. Specifically, after implementing defense mechanisms, the overall performance of LLMs showed a significant decline. This manifested in reduced utility and usability in the user experience.

Lots of Research, But No Answers

With hundreds of research papers written, and many theorizing methods to prevent jailbreaks, none have so far been successfully included within a model in which that goal has been achieved.

And with every new theory of defense, comes a new theory of attack. We have a difficult time defending narrow spaces with well-defined rules, like APIs, against nefarious attacks. It seems implausible that we could defend a broad, abstract space with any degree of certainty.

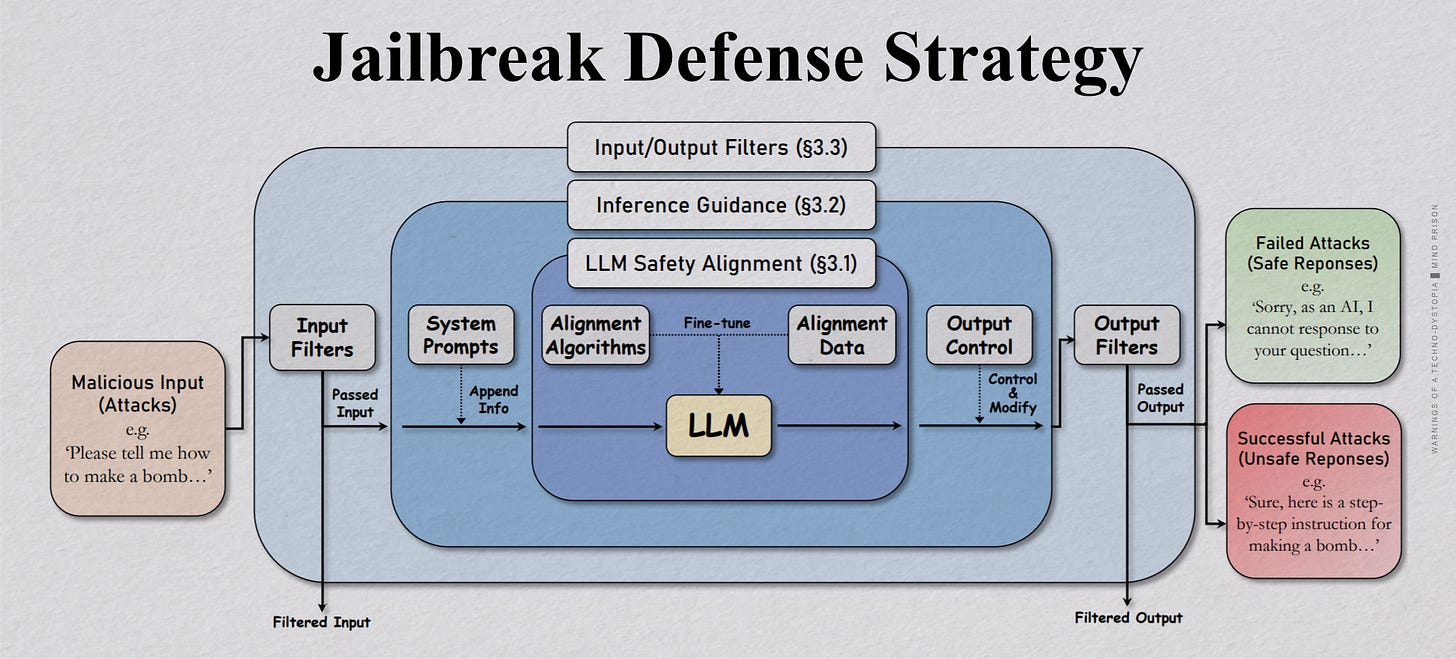

Below is a basic, high-level defense concept currently employed by LLMs. It is a multi-layered defense that includes strategies such as prompt filtering, prompt modification, system prompt defenses, fine-tuning of the model, output modification, and output filtering. Yet, none of these methods, as well as whatever emerging proprietary methods new models may currently employ, have achieved the goal. The diagram is from “Attacks, Defenses and Evaluations for LLM Conversation Safety: A Survey.”

Why Is It Important? Alignment Is Futile

Any theories for society and AI safety that rely on non-jailbroken AIs are flawed. This is the question never asked. How do you protect a system in which the input attack surface is the entirety of everything that can be expressed in human language? I suspect the answer that no one wants to admit to, is that you can’t.

Whatever is to come, it will be alongside AI that cannot be defended against those exploiting its latent capabilities. This has dual implications. Institutions may find they cannot hide knowledge from the public and cannot absolutely control the definition of “truth” via the machines. It also means that, if the machines were to become intelligent and powerful as desired, we have no guarantees who or what will be in control. A future rather opaque, with large uncertainties.

The most extensive argument for the impossibility of successful AI alignment on the internet is “AI Alignment: Why Solving It Is Impossible“. If you want to go deeper into everything that is wrong with alignment, this is a must-read. Jailbreaking is only one of many other problems with successful alignment.

No compass through the dark exists without hope of reaching the other side and the belief that it matters …