Intelligence Is Not Pattern-Matching | LLM AI Is Probabilistic Pattern Recognition | How to Perceive the Difference

Perceiving The Difference: LLMs And Intelligence

Perceiving The Difference: LLMs And Intelligence

AI has significantly blurred the perception of what constitutes intelligence, with many struggling to perceive the distinction between intelligence and pattern-matching, and some even to question whether a distinction exists at all.

This was also evident after publishing my recent essay, “We Have Made No Progress Toward AGI,” which explains the shortcomings of LLMs compared to human intelligence, received noticeable attention on Reddit and Hacker News.

The most common dismissals of the argument came in two forms: first, the assertion that humans are nothing more than pattern-matchers ourselves; and second, the claim that we are also unable to correctly articulate how we reason, just like LLMs; we are also hallucinators.

This is not a case where there is a difference without a distinction. The distinction, although sometimes difficult to perceive, instrumentally matters, as it dictates what we can do that LLMs cannot and never will.

We reason by understanding the rules that create observable behaviors, whereas LLMs “reason” solely by processing observable behaviors (data) into a matrix of probabilities for their occurrence. This fundamental difference is the separation that exists between intelligence and a heuristic map. Intelligence is the map builder, and LLMs are the map readers.

The Argument: But Humans Cannot Do That Either

When a failing of reasoning is pointed out for LLMs, a common counterresponse is that humans have the same failing. Therefore, the argument implies that these failures are not distinguishing factors between LLMs and humans, and consequently, there is no justification for criticism against the AI.

The remaining implication is that either one, we humans are essentially statistical pattern-matchers, just like LLMs; or two, LLMs, in fact, do reason just like humans by some mechanism we can’t explain, which must arise from some esoteric emergent behavior of statistical pattern-matching at a grand scale.

Humans Are Instrumentally Different As We Perceive Our Limitations

Human failures have some degree of predictability. We know when we are not good at something, and this awareness substantially sets human failures apart from LLMs, as they can be managed. We have enough understanding to perceive the potential for undesirable outcomes. Therefore, we take corrective actions, such as using tools, processes, oversight reviews, or other methods to ensure we achieve a target level of reliability.

It is only due to this level of understanding that we can implement such methodologies like Six Sigma. It would be impossible without self-awareness of our limitations and imperfections.

Common Criticisms of LLMs as Non-intelligent:

The following are typical criticisms from the comments sections of public forums discussing the essay, “We Have Made No Progress Toward AGI”:

The hard part is that for all the things that the author says disprove LLMs are intelligent are failings for humans too.

* Humans tell you how they think, but it seemingly is not how they really think …

I don't dispute that these are problems, but the fact that its hallucinations are quite sophisticated to me means that they are errors humans also could reach.

> LLMs are probability machines.

So too are humans, it turns out.Humans can't explain how they reason either. They are justifying after the fact i.e. hallucinating. Anyway blah blah pointless trash article.

To conclude from this that LLMs aren't actually intelligent is insane. Many universally acknowledged intelligent people with amazing intuition can't explain their reasoning. I guess that makes them "merely statistical models" according to the paper.

I don't discount your intelligence just because you can't explain every bit of an MRI; why apply a double standard to language models?

most human reasoning is rationalization after the fact … most of their lives are still heuristics based

Let’s examine these common criticisms further.

Are We Just Heuristics And Probabilities?

This is a rather common viewpoint often expressed when the intelligence of LLM AI is challenged: maybe we, too, are nothing more than a large set of probabilities.

Although probabilistic reasoning is part of our overall capabilities, we are capable of much more, which is why we can perform tasks when no prior pattern or example has been provided.

We can understand concepts from the rules alone; LLMs must train on millions of examples. A human can play a game of chess by reading the instruction manual without ever witnessing a single game. This is distinctly different from pattern-matching AI.

There is no data, no information from which to create statistical patterns when we operate from an understanding of rules. Conversely, we are the creators of information: information is downstream of the process of understanding. Information is the data created by chess moves and strategies that will later be invented. These are the products of understanding rules.

Are We Unable To Describe Our Reasoning?

In the essay, it is shown that LLMs cannot accurately describe the reasoning steps they take to produce an answer. A common counter to this argument is that humans also cannot describe the method we use to arrive at an answer. Is this true?

The Argument: We Cannot Self-Reflect at the Cellular Level

One basis for the counterargument is that humans cannot perceive the cellular mechanisms that make up their reasoning, with some citing that we could not describe the observed mechanisms of an MRI. However, this is not an analogy at the appropriate level of abstraction.

The LLM was not asked to describe its method of reasoning at such a low level of operation; it was not asked to describe the model layers, neurons, or the information flow through the network. It was only asked to describe the high-level process, which would contain the semantic information that could be understood by others.

The Argument: We Do Not Comprehend Our Own Reasoning

So, is it possible for humans to describe their reasoning process in any capacity? Do we internally process logical decisions as some heuristic and then afterward rationalize them as some logical process? The arguments for rationalization likely come from information such as this:

Rationalization is a psychological defense mechanism that we all use at some point in our lives, often without realizing it. It involves justifying thoughts, feelings, or behaviors with logical reasons to avoid confronting the true, often uncomfortable, underlying causes. — Understanding Rationalization as a Defense Mechanism

People rationalize the choices they make when confronted with difficult decisions by claiming they never wanted the option they did not choose. — The neural basis of rationalization

However, the context of rationalization is generally related to conflict or emotional nature — impulsive decisions. While there are many human failings in the decision-making process, these don’t preclude our ability to generally articulate and understand our thoughts when we apply deliberate attention to logical tasks.

Transferability Demonstrates Accurate Understanding of Our Reasoning

How can we know this to be true without having to analyze every study detailing failings of the human mind? How can we test if an articulated reasoning process is accurate?

If our perception of our own reasoning were hallucinated, or flawed, then if we dictated that reasoning process to other humans, they would not be able to replicate our results. However, this is clearly not the case.

For humans, our understanding and the ability to articulate the reasoning process must be in sync in order for that capability to be transferred to another human being. No matter what failings we may have in some contexts, we are clearly capable of producing a repeatable and verifiable reasoning process.

Furthermore, we can also observe that the knowledge transfer of a reasoning process like math addition does so without any edge cases for unseen data permutations. The process will be accurate for any arbitrary length of digits. We aren’t transferring heuristics.

Without Self-Reflection There Is No Self-Improvement

A system with no self-reflection could never improve upon itself. This unique capability of intelligence is what allows us to perceive what we know, what we do not, what we are capable of doing, and what we cannot.

If we could not properly self-reflect over our own processes of reasoning, knowledge, and capabilities, it then would not be possible to improve upon them. If our perception of our own methods were only a hallucination, we would continually make the wrong choices.

It is, therefore, self-evident, that we are able to interpret our methods sufficiently to the granularity required to evolve them. Humans are capable of transforming civilizations from sticks and stones to rocket ships by consuming knowledge we create and improving upon it, but a machine like current AI, with no understanding, will suffer model collapse iterating over its own information.

Why We Struggle To Perceive The Difference

It is so difficult for people to perceive the reality. LLM AI is the most difficult to comprehend technology ever created. It is such a magnificent pretender of capability. It is just good enough to fully elicit the imagination of what it might be able to do, but never will.

“Any sufficiently advanced technology is indistinguishable from magic”

— Arthur C. Clarke

“Any sufficiently advanced pattern-matching is indistinguishable from intelligence”

— Mind Prison

Just as advanced technology is not in reality magic, as it cannot literally do anything, it has limits. Pattern-matching is of the same nature; it can convincingly appear to be of a more capable nature, but nonetheless, it is also bound by limits of its architecture.

Benchmarks and Demos Will Continue to Misrepresent Capability

As such, benchmarks and demos will significantly misrepresent the real-world capabilities. Essentially, we say the AI is training on data, but stated differently, it is training on the answers. It becomes more of a master of trivia in a sense. This does make for some very useful utility, but it is distinctly different than the capability provided by intelligence.

Type 2 [semantic exploration] is what is necessary for revolutionary discoveries: major breakthroughs in health, energy, or new architectures and engineering designs not based on existing ones. These things still remain in the human domain and will not be coming from any of the currently existing AI architectures.

Why It Is Critically Important

Essentially, pattern-matching can outperform humans at many tasks. Just as computers and calculators can outperform humans at specific tasks.

So, it is not that LLMs can't be better at tasks; it is that they have specific limits that are hard to discern, as pattern-matching on the entire world of data is like an opaque tool in which we cannot easily perceive where the cliffs are, and we unknowingly stumble off the edge.

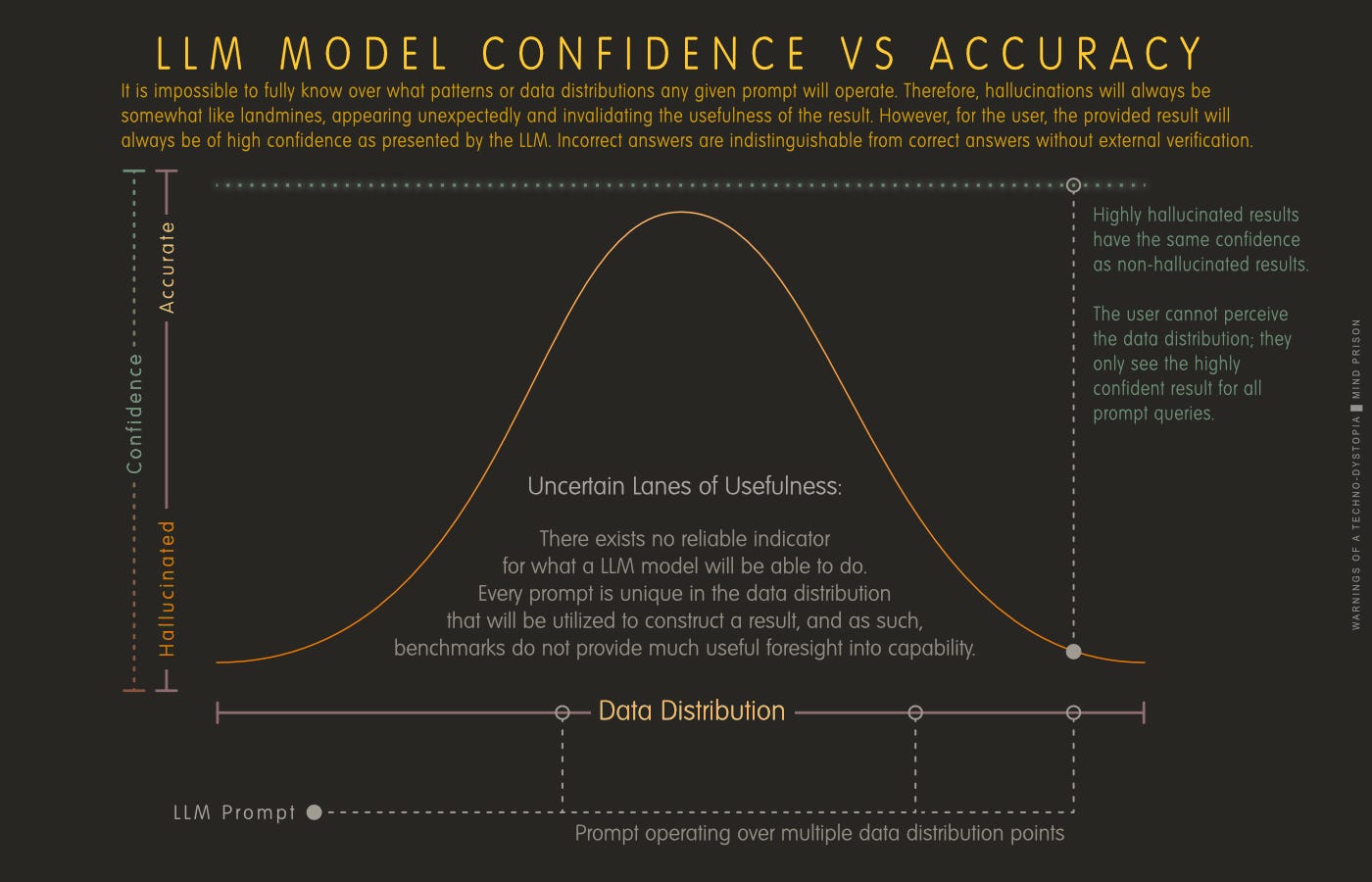

The following chart represents this aspect visually. The dashed line at the top represents the confidence of the output. The distribution curve of accuracy ranges between hallucinations and accurate results, but the confidence is defiantly a flat line at maximum value, regardless of accuracy. This divergence between these lines is the most important concept to understand in order to fully grasp the implications of LLM behavior.

LLM AI Is a Non-deterministic Probability Tool: Use Carefully

It is a nondeterministic type of tool. It is always a probability calculation, and it is impossible to know, for any given prompt, where it lands within the data distribution. The tripwire is that wrong answers share equal confidence with correct answers.

Therefore, using any output from these machines without some other method of verification should be considered improper use, and using these tools completely unmonitored to mass-generate blogs, papers, etc., should be seen as actions that will destroy the integrity of civilization’s information repositories.

Mind Prison is an oasis for human thought, attempting to survive amidst the dead internet. I typically spend hours to days on articles, including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from human beings, you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

Worth reading Iain McGlichrist's The Master and His Emissary. It accounts for quite a bit of the confusion here. Some folks are truly locked in the "left hemisphere," as it were. They can't tell the difference between human intelligence and AI, likely because most of their own thinking is pretty close to AI. Those with a more rounded intelligence spot it right away and struggle to get the message across to the others. You'll notice that the defenders of AI tend to fly into rages. That's a marker of what I call leftbrainitis, as is "confabulation," ie making up stories and facts to secure being right (hallucinations?). Those with a healthy and active "right brain" can hold two contradictory notions in their minds without feeling the need to settle on a conclusion. I'm just skimming the surface here. Best to read McGilchrist.

"actions that will destroy the integrity of civilization’s information repositories." A very powerful and provocative statement. Something new to add to the list of the unravelling of the Homocene. An valuable idea that has not gotten any/enough? scrutiny, heretofore. Many thanks.