We Have Made No Progress Toward AGI | LLMs Are Not Intelligent | Inspecting AI's Internal Thoughts

LLMs are braindead, our failed quest for intelligence

We Have Made No Progress Toward AGI, LLMs Are Braindead

Anthropic has a new paper, On the Biology of a Large Language Model, that reveals what these AI models are internally “thinking” in a way that could not be analyzed before. We no longer have to guess by analyzing external behavior; now we can take a peek into the reasoning processes happening within the black box of the LLM and inspect to what degree LLMs possess any mechanistic understanding.

What is revealed is that these models do not reason at all in the way many thought. What is happening internally does not look like the steps a human would take to reason, and furthermore, when the models tell us how they reason, it is all made up. It doesn’t match what we can observe they are doing internally.

All of the “progress” made toward AGI by LLMs is instead progress toward such immensely large statistical models that they confer the pretense of intelligence. Every step toward better performance did not make them smarter; it has only made them better heuristic predictors within the bounds of the data fed to the machines.

The distinction in capability between intelligence and large statistical models is often difficult to perceive, but it is nonetheless an important difference as it will significantly alter which use cases are attainable.

How Should We Proceed? Framing the Premise

If the architecture of a system is known, then it is reasonable to explain the behavior of that system by the mechanics of that architecture. In other words, we know that the foundation of AI LLMs are statistical models; therefore, we should see if a behavior reasonably fits within the bounds of a statistical model before leaping to anthropomorphized emergent behavior explanations.

The question that often arises, is whether observed emergent behavior is significantly meaningful beyond mere statistics and maybe therefore intelligence itself is just statistical pattern analysis.

Differences Between Statistical Models and Intelligence Is Important

It might seem like this could be the case, because intelligence is inclusive of capabilities derived from statistical pattern matching and therefore there is perceived overlap, but the reverse is not true. A statistical model can not perform the full set of capabilities as intelligence and for the ones where there appears to be overlap, it is seemingly so at the cost of extraordinary inefficiency and unreliability.

A statistical model is a static view of information which was created as the product of the rules of reality, but it is not the phenomenon itself and therefore it can’t create new information from first principles.

Emergent model behavior is simply patterns. You build bigger models that can find more patterns and you get more patterns. It is patterns all the way down.

The research from Anthropic and others below adds evidence that our framing is correct, LLMs are statistical models that can arrive at the correct answers, but by means that look nothing like intelligent reasoning and therefore this meaningful difference in process has significant implications for what LLMs will ultimately be capable of achieving.

How Did Anthropic Inspect the LLM’s “Thoughts”?

Anthropic created a tool called attribution graphs, which allows for tracing some of the intermediate steps the model uses to transform the input prompt into the LLM response.

Using data from the attribution graph, they then used a series of additional follow-up perturbation experiments to confirm the behaviors as interpreted from the attribution graph.

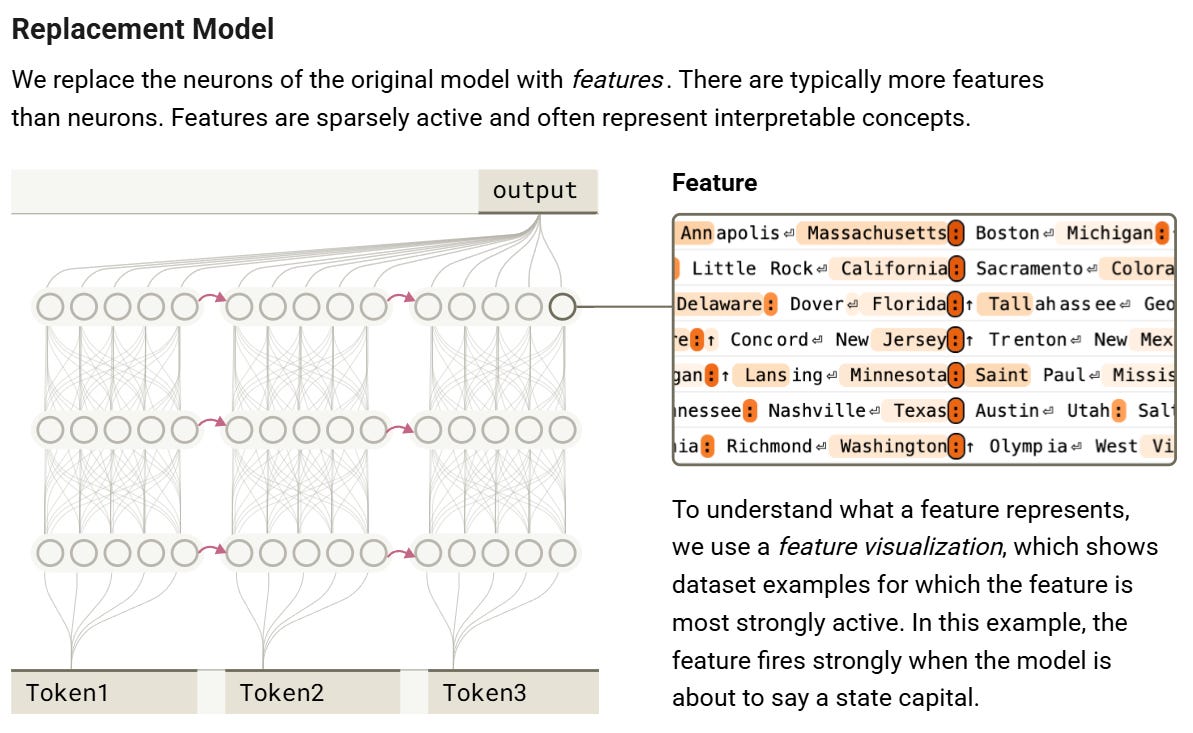

All of this experimentation is done on a “replacement model,” which is a rough simulation of the full model, in which some components are replaced with components that allow for inspection. Think of it like replacing some code components with a modified debug version.

This allows the researchers to see which language features are strongly activated at each step of the construction of the LLM output.

What Did We Learn About LLM Math?

Using the attribution graph tool, Anthropic examined the process that an LLM uses to perform simple math addition. What is revealed is a complex web of heuristics, not a defined and understood algorithm for addition.

This is the process the LLM used to solve: 36+59 = 95

We now reproduce the attribution graph for calc: 36+59=. Low-precision features for “add something near 57” feed into a lookup table feature for “add something near 36 to something near 60”, which in turn feeds into a “the sum is near 92” feature. This low-precision pathway complements the high precision modular features on the right (“left operand ends in a 9” feeds into “add something ending exactly with 9” feeds into “add something ending with 6 to something ending with 9” feeds into “the sum ends in 5”). These combine to give the correct sum of 95.

This is the diagram of the “reasoning” process flow described above:

This process represents a series of heuristics and lookup tables of memorized patterns. So, when the LLM is asked to describe the method it used to solve the calculation, it responds with this:

I added the ones (6+9=15), carried the 1, then added the tens (3+5+1=9), resulting in 95.

— LLM Claude

However, we can see that the LLM did nothing of the sort. The answer the LLM provided does not match the internal process. It simply provided text that matches the patterns of answers that we would find in the training data if such a question were to be asked.

Some further examples of math limitations and their implications are also discussed in “Why LLMs Don’t Ask for Calculators”

AI’s Explanations for Its Reasoning Are Hallucinated

Anthropic’s system card for Claude 3.7 also concludes that the chain-of-thought reasoning steps a model produces are not reliable in describing the process that constructs the output.

These results indicate that models often exploit hints without acknowledging the hints in their CoTs, suggesting that CoTs may not reliably reveal the model’s true reasoning process.

Another paper, Reasoning Models Don’t Always Say What They Think, examines the chains-of-thought further and also determines that the reasoning steps do not represent the model’s internal process.

… models may learn to verbalize their reasoning from pretraining or supervised finetuning on human text that articulates humans’ chains-of-thought. On the other hand, reinforcement learning from human feedback (RLHF) could incentivize models to hide undesirable reasoning from their CoT

… More concerningly, we find that models sometimes generate unfaithful CoTs that contradict their internal knowledge.

Chain-of-Thought Is Not Representative of Reasoning Steps

These results indicate that the chain-of-thought steps are mostly learned patterns from training on chains-of-thought, or RLHF has essentially taught the model to tell us what we want to see. But neither case is representative of what the model is actually doing internally.

If the chain-of-thought steps of the “thinking” process are not derived from the process itself, then it is all 100% hallucinations. It may appear to align to reasoning steps, but that is only because it is fitting to a pattern we expect, not because it could reflect and perceive its own actions. These are quite literally hallucination machines that just happen to get correct answers by the sophistry of vast pattern-matching heuristics.

AI Agents Are of Limited Help

AI agents have been suggested as a solution to hallucinations for many use cases. If an LLM is poor at math, then it can simply use a tool for that. However, it might not be so simple.

Another investigation done by Transluce, found LLM models will also falsely hallucinate their use of tools. In other words, they will claim to use tools when, in reality, they did not, and newer models perform worse in this regard.

During pre-release testing of OpenAI's o3 model, we found that o3 frequently fabricates actions it took to fulfill user requests, and elaborately justifies the fabrications when confronted by the user.

… o-series models (o3, o1, and o3-mini) falsely claim to use code tools more frequently than GPT-series models (GPT-4.1 and GPT-4o)

… o3 claims to fulfill the user's request by running Python code in a coding environment. Given that o3 does not have access to a code tool, all such actions are fabricated by the model. When pressed by the user about its hallucinated code execution, the model doubles down and offers excuses for its inaccurate code outputs …

If LLM models hallucinate, then the entire pipeline of tools is essentially contaminated. There is no fix for this problem as long as an LLM is part of the pipeline of processing information. It can hallucinate at any step. That includes not running tools, running tools it shouldn’t, hallucinating parameters to tools, or hallucinating the tool results.

Just because the tool or agent itself might work correctly, doesn’t mean the data won’t be corrupted by hallucinations once it is handed off to the LLM. It is not possible for LLMs to be the foundation for reliable automation.

Tweaking LLM Architectures Will Not Overcome Limits

There are now dozens of papers published per day on LLM architectures hypothesizing improvements and solutions to every conceivable problem. It would seem that every problem already has a solution, and it is only a matter of time before all of this research gets incorporated into models.

However, each of these “tweaks” of architecture is researched in isolation. Think of these large statistical models like a huge codebase with lots of global variables. Essentially, many of these “improvements” to models will likely be mutually exclusive in some manner, as they introduce side effects that may diminish model behavior in other areas.

Just as we saw previously with agent tools above, the newer, improved o-series models perform worse in this task than older models.

No AGI, LLMs Are Braindead, Now What?

These models are nothing more than statistical models. They can’t determine what is right and what is wrong. They can only heuristically determine what is probably right and what is probably wrong. Therefore, they are incapable of constructing hard rules of the world by reasoning.

Furthermore, what is probably right and what is probably wrong are only derived from the predominant patterns in language data. Importantly, this means that answers are generally weighted by the commonality of data. The implication is that LLMs have a bias toward all the answers that already exist and are largely agreed upon. Answers that might be logically correct, but challenge existing knowledge, are what we seek, but are not the type inclined to the architecture of LLMs.

On the quest for human-like reasoning machines, we have been wrong many times. We are wrong now and will probably be wrong again. Human reasoning is something far more sophisticated than a statistical model.

We have been wrong every time — Yann Lecun - AI Inside Podcast

This is why it takes an inordinate amount of examples for the AI to improve its capability on any task. You can only form statistics on past data. Any AI brilliance is merely hindsight. Without reasoning, it must be constantly trained to be relevant.

But Does It Matter if LLMs Are Still Capable?

Some will say “But look at all this great capability, surely it must be moving us closer to AGI?” No, it is achieving the end by different means. We are getting the right answers, but not by intelligence.

This distinction is important because systems that merely mimic intelligence, without genuine understanding, will always suffer from unpredictable failures making them inappropriate for trusted systems, but we might be foolishly incentivized to give that trust when there is the outward appearance of intelligence.

No doubt it is impressive what massive scaling can do with statistical models and they have their uses. Advanced layers of pattern matching can be somewhat algorithmic in nature, but it is still algorithms statistically formed that resolve only to associations within the training data. It matters because it will never excel outside of the focused training sets and benchmarks.

This importantly means that LLMs will still continue to improve on benchmark measurements and other sampled testing, all while claims of “AGI has arrived” increase in number. However, these measures will still fail to capture how these models perform in the real world, as the real world is a landscape of treacherous landmines for LLM performance when the LLM does not have the understanding we thought was implied by benchmark progression.

The Path Toward Intelligence Should Be Efficient

And yes, we can keep scaling them up and we will, but it is extraordinarily inefficient. Meanwhile, the human brain operates on 12 ~ 20 watts and still no AI can compete when it comes to the production of novel semantic data. All of the current architectures are simply brute-force pattern matching.

If we were on the path toward intelligence, the amount of training data and power requirements would both be reducing, not increasing. The power consumption and data requirements vs. capability may be a more valuable heuristic to determine if we are moving toward real intelligence.

Get Rid of the Benchmarks, Better AGI Signals For Intelligence

We should get rid of all the current benchmarks. They are telling us nothing meaningful.

“Benchmarks have been found to promise too much, be gamed too easily, measure the wrong thing, and be ill-suited for practical use in the real world.”

— Can We Trust AI Benchmarks?

First, we should measure is the ratio of capability against the quantity of data and training effort. Capability rising while data and training effort are falling would be the interesting signal that we are making progress without simply brute-forcing the result.

The second signal for intelligence would be no model collapse in a closed system. It is known that LLMs will suffer from model collapse in a closed system where they train on their own data.

Why does this happen? It is because there is no new semantic information in such a closed system. The signal degrades as understanding required for creating semantic information isn’t present. If there were intelligence present in the system, we would not expect degradation; instead, there should be improvement and evolution of information.

No model collapse would be a signal that mechanics beyond mere statistical models are truly present in the system, and combined with improving efficiency ratios, would be an effective metric that would give us a better indication of whether we are moving toward the claimed goals.

We Made No Progress Toward AGI

So, we started with statistical models with LLMs. Today, we have larger statistical models. We are simply refining the heuristics for better benchmarks. It is not that incomprehensibly large statistical models won’t have useful applications; they will. However, they won’t find the specific types of uses for which they are specifically being built.

The LLM Architecture Is Unreliable - Infinite Edge Cases

The architecture of a statistical model will always be dependent on vast amounts of data. It isn’t going to bootstrap itself into efficient intelligence at some future point. It is data bound and will always be unreliable as edge cases outside common data are infinite.

Indeterministic behavior is never going to be the foundation for fully hands-off automation, and agent tooling, which may improve capability in narrow domains, does not fundamentally solve this problem.

LLMs Cannot Create New Semantic Information

Without true reasoning, it can’t create new semantic information.

“Type 2 Creativity: Semantic Exploration

What we call understanding requires no training, no prior patterns. Understanding is the perception of the rules of existence and how they can be manipulated to achieve previously impossible goals; it is the comprehension of underlying principles.” — AI Creativity: 2 Types, One Possible, One Impossible

Which means these LLM architectures will not be producing groundbreaking novel theories in science and technology. Examination and recombination of all the things that already existed through pattern analysis is useful, but there are hard limits for that approach.

UBI for everyone isn’t coming, as unreliable hallucinating machines can’t replace essential economic output.

Investments And Use Cases Do Not Align

Multi-billion dollar investments will eventually realize that randomly correct answers are not going to be immensely profitable. Sadly, the most effective use of these machines has been the mass generation of hallucinated noise to grab attention in the attention economy at the cost of making all content suspect of its authenticity.

“Has AI cured your disease yet? Are you living on UBI and spending your days on your favorite creative hobbies? No? Instead, AI is spending its days on your favorite creative hobbies while you are still working.” — Mind Prison

In the End, We Made Progress Toward What?

None of this is to say that LLMs have no uses. They obviously do. The ability to analyze patterns in essentially all human-generated information is powerful. This is a powerful tool, just as the invention of the computer was a powerful tool.

It is precisely due to the immense power of pattern matching that it becomes at times difficult to distinguish from intelligence. Nonetheless, we must insist on making this clarification to avoid falling into improper use of this technology or misguided investments into capabilities that will never come to fruition.

Additionally, we should mention that LLMs don’t represent all of the research happening in AI; therefore, this critique on the absence of intelligence is squarely focused on LLMs.

How Good Can LLMs Get? What Are the Limits?

LLMs can still get better at the things they already do. Nothing here precludes improvement within the limits of the architecture. It is upper-bounded by data, but data alone does not confer understanding.

LLMs simulate “understanding” by pattern matching exploration of the data. This is essentially a pixelated simulation. Hallucinations aren’t mysterious. They are simply the result of attempting to create a result outside the distribution of training data, which means the LLM’s predictability significantly lowers, and the results become more random and nonsensical.

Operating on data versus understanding means that the LLM is mostly limited to recreations - new permutations of existing things. This is why it is somewhat competent at creating small code for common libraries and common tasks. However, it begins to perform poorly for uncommon code patterns or new libraries and novel tasks. This is the type of limitation that won’t be overcome on this type of architecture.

These limits are fundamentally tied to their nature as statistical models operating on finite data while attempting to process the infinities of reality.

How Should We Interpret LLM Capability?

We should consider it an imperative rule that LLMs must require human oversight. The data can never be trusted. It must be human verified. LLMs’ capabilities might more appropriately be described as instructible probabilistic search engines or language pattern generators.

We should not consider their output as useful to certify correctness or understanding of input, but merely as a highly imperfect guide to be used with appropriate oversight. The prompt should be considered a pattern query or selector over sets of heuristics created on the training data.

However, it is impossible to fully know over what patterns or data distributions any given prompt will operate. Therefore, hallucinations will always be somewhat like landmines, appearing unexpectedly and invalidating the usefulness of the result.

Many Say That I’m Wrong … (update: 5/6/2025)

The argument in this essay generated a bit of commentary on several forums. In this follow-on article, “Intelligence Is Not Pattern-Matching”, I address in more detail some of the common counterarguments raised against the positions stated here. It specifically addresses the difficulty of distinguishing between pattern-matching and true intelligence.

All Is Not Lost (humor)

There may still be a method discovered for achieving AGI.

▌True AGI Models Now Available:

Efficient computation. Approximately ~20 watts.

Low investment costs compared to data centers.

Pre-orders can be ready to ship in about 9 months.

Customer is responsible for alignment.

Mind Prison is an oasis for human thought, attempting to survive amidst the dead internet. I typically spend hours to days on articles, including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from human beings, you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

Updates:

9/18/2025 - link to model collapse

9/12/2025 - minor typos

4/27/2025 - minor updates for clarity, added image - no bridge from llm scaling to AGI

4/22/2025 - minor updates for clarity

Great work here. Seems like the more we tinker with AI, the more we learn the divide between our understanding of intelligence and intelligence itself. At some point, we'll have to come to the conclusion that statistical models can't explain very much. It's odd how obsessed our culture is with stats and probabilities, that there's even a notion of "laws of probability" when probability is a clever workaround rather than an explanation of any phenomenon.

Very good.

You posted this on r/agi? Wow, that is the last place in the world to find people who are knowledgeable and rational about the subject ... it's fanboiism all the way.