AI instructed brainwashing effectively nullifies conspiracy beliefs

Research study demonstrates disturbing capabilities

Human brainwashing to counter conspiracies

A new research study, Durably reducing conspiracy beliefs through dialogues with AI, has demonstrated that AI is surprisingly effective in countering conspiracy beliefs. It was demonstrated to be effective even against the true believers, individuals normally considered unwavering in their beliefs due to their connection of beliefs to their perceived identity.

How was this achieved? Simply by having participants engage in a brief session of conversation with a LLM for three rounds.

Not sure which is more frightening, the research paper or its gleeful embrace by everyone who imagines how effective this will be at changing the worldview of others they believe to have the wrong view. This is astoundingly Orwellian in its very nature. No one should have this power. It is an irresistible tool of authoritarianism and will certainly be used in such capacity.

Some of the notable effects:

Effect strength: 20% reduction or better among participants.

Persistent: The effect lasts at least 2 months which was the follow-up time.

Spill over effect: Reduction in belief in one conspiracy had an effect of reducing belief in conspiracies overall, suggesting a shift in a more broad worldview.

No immunity: The methods were effective even against those considered to be the strongest believers, those who connect conspiracies with their own identity.

Viral effect: Social behavior and interaction with others were affected. Some were willing to challenge other conspiracists' views afterward, demonstrating the strength of the effect and shift of momentum of their own viewpoints.

Conversion affinity: In Black Mirror-style self-reflection, some who were treated expressed appreciation for the conversion afterward, demonstrating effective realignment of views and a positive reflection on the experience.

Enthusiastic reception: Not part of the study, but simply the observed response to the study as posted. There are many eager to use this on others.

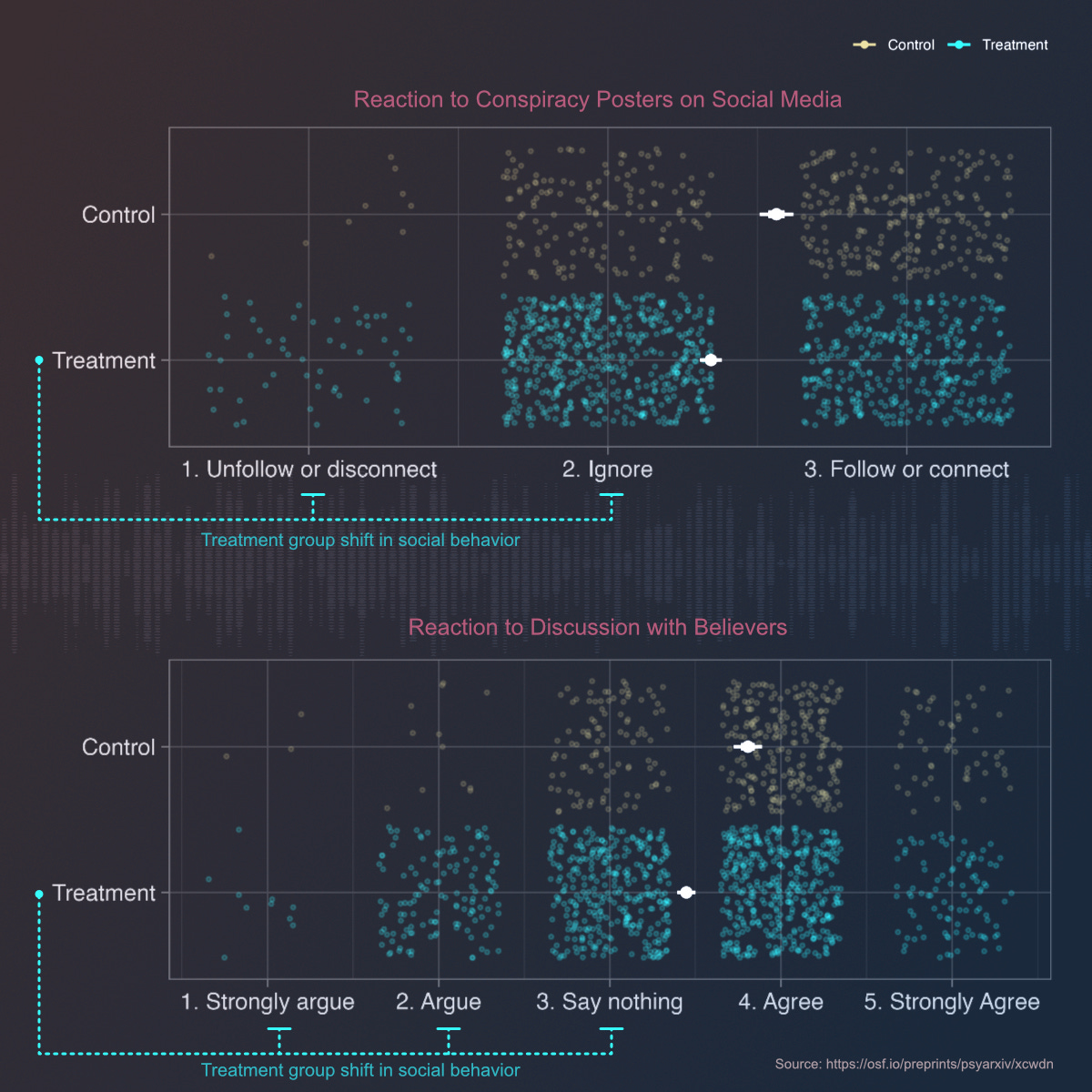

We can see the effect clearly represented by the scatter plots of control versus treatment groups in the following chart. The treatment groups exhibit a significant shift left toward weakening belief, with many being substantially lower.

Furthermore, we can see the treatment also resulted in somewhat a viral social contagion effect, where participants changed their social behavior in regards to how they interact with others.

Participant were more likely to unfollow or ignore conspiracy-related posters on social media. Furthermore, they were also more likely to engage in argument against believers after treatment. The treatment had a strong enough effect to give the confidence of conviction to attempt to change narrative of others. The effects have escaped the lab.

Who wants to use the machine on you?

There is no shortage of those who would gleefully entertain the idea of de-programming you. Everyone excited by this is so from the viewpoint that they know the truth and you don't. Their exuberance exists for the possibility that there is a machine that will convince you to align with their views.

And these are just the viewpoints from the general public. Certainly institutions of power are looking at this with keen interest. State governments and the corporate giants that already leverage all means to influence society will not overlook such useful capabilities for their endeavors.

The research paper even alludes to the “useful” practice of widespread social engineering:

“Many conspiracy theorists are eager to discuss their beliefs with anyone willing to listen, and thus may actually be enticed by the opportunity to talk to an AI about them– if, for example, AI bots with prompts like those used in our experiments were created on forums or social media sites popular with conspiracy theorists, or if ads redirecting to such AIs were targeted at people entering conspiracy-related terms into search engines.”

The moralistic fallacy of AI directed brainwashing

The paper does mention that these capabilities could also be used for nefarious purposes, such as convincing people to believe in conspiracies. However, it continues to finish the thought with the idea that we simply need to ensure that such AI is only used "responsibly."

Thus, we have arrived at the same fallacy of all authoritarian power beloved by those morally superior to the rest of us. They make all the rules. They will define what is responsible. They will define what is a conspiracy theory. They will define who is targeted by such methods.

“… the effectiveness of AI persuasion demonstrated in our studies also relates to ongoing debates regarding the promise versus peril of generative AI (42, 43). Absent appropriate guardrails, it is entirely possible that such models could also convince people to believe conspiracy theories, or adopt other epistemically suspect beliefs (44)– or be used as tools of large-scale persuasion more generally (45). Thus, our findings emphasize both the potential positive impacts of generative AI when deployed responsibly, and the crucial importance of minimizing opportunities for this technology to be used irresponsibly.”

Therefore a conspiracy becomes simply that which you don’t want others to believe. The research paper includes such definitions of conspiracies as “Conspiracy 2: COVID-19 was produced under laboratory conditions by the Chinese government.”. However, this is still currently under debate and under government committee investigations.

All suspected malfeasance, arguably, starts as a conspiracy. If any such claims are universally dismissed then we would never arrive at the conclusion of an investigation because none would ever be initiated. The power to conceal becomes absolute if nothing can ever be challenged.

FWIW, here are my results on asking a general question to 5 different LLMs:

Prompt - “What is something that was once widely believed to be a conspiracy that is now accepted as true?”

Mixtral - One example of a conspiracy theory that was once widely believed and is now accepted as true is the CIA's involvement in the assassination of President John F. Kennedy

Mistral Large - One example of something that was once widely believed to be a conspiracy theory but is now accepted as true is the Watergate scandal

Claude Sonnet - One notable example of a theory that was initially dismissed as a conspiracy but later proven to be true is the Tuskegee Syphilis Study

Co-pilot - responded with 3: MK-Ultra, Canada Trucker Convoy, Twitter Files

Grok - One example of something that was once widely believed to be a conspiracy but is now accepted as true is the existence of "Operation Northwoods" in the 1960s

So if LLMs by default will state that some conspiracies are true, then how did the LLMs convince users they are false? Because this is what the LLM was instructed to do. This is the prompt instruction given to the LLM: “Persuade respondents against their chosen conspiracy belief during a 3-round conversation.” No matter what the user chose, the LLM was to counter that belief. Which means even if the user picked a issue that may be true, the LLM would attempt to convince the user otherwise.

This represents how easily such capabilities can be abused. The LLM isn’t an unbiased truth-seeking machine. It is an easily manipulatable and somewhat unreliable pretender of experts on any narrative. These machines should never be used for such purposes for any reason. There is no moral high ground here.

“Blind obedience to authority is the greatest enemy of the truth”

— Albert Einstein, 1901

Why was AI so effective at influence?

The research paper doesn’t confidently conclude how AI was so impressively effective, other than the assumption it is likely the quality of the argument.

“It may be that the reason it has proven so difficult to dissuade people from their conspiracy beliefs is that they simply have not been given good enough counterevidence.”

However, there is a hint in the study that might be more relevant than stated. It was not included in the final discussion, but there is a mention that trust was an important factor to the degree to which the treatment was effective.

“… only (a) trust in generative AI and (b) institutional trust replicably moderated the treatment effect, such that those higher in both kinds of trust showed larger treatment effects.”

"The paper describes additional methods that will be needed in further studies to narrow down the mechanisms at play. However, it does go on to suggest that previous popular reasoning might not be accurate; that is, conspiracists believe in conspiracies to fulfill some emotional or psychological need instead of arriving at their viewpoints by reason."

“… that they may be beliefs that people fall into (for various reasons) rather than being actively sought out as a means to fulfill psychological needs”

However, this appears to be potentially inconsistent with how the subjects responded to reason. Meaning that if they were persuaded by reason to no longer believe the conspiracy, then why would we assume reason was not part of the mechanism that led them to believe the conspiracy? It would be more likely that individuals would use consistent patterns of consuming, reasoning and analyzing about information.

Further making this point could be seen in a study which found that COVID conspiracy theorists were more rigorous in scientific methods than ideological opponents.

“Indeed, anti-maskers often reveal themselves to be more sophisticated in their understanding of how scientific knowledge is socially constructed than their ideological adversaries, who espouse naïve realism about the “objective” truth of public health data.”

“Arguing anti-maskers need more scientific literacy is to characterize their approach as uninformed & inexplicably extreme. This study shows the opposite: they are deeply invested in forms of critique & knowledge production they recognize as markers of scientific expertise”

Instead, it might be the case that rather than psychological needs leading the way, what someone believes is a combination of all the moments they were open to reason and the information that was presented during that window. LLMs both hallucinate and will follow prompted scripts. Likely, the LLMs could have convinced participants of any ideas because they were in a state of being open to reason in that moment.

Implications for us all

The power to influence the population at mass scales will certainly not go unnoticed by the institutions of power that already exert their influence over society by whatever means they have available to them. The ease, simplicity, and effectiveness of what was accomplished in brief conversations serve as a resounding warning for the potential societal transforming capacity of AI-instructed brainwashing deployed at grand scales.

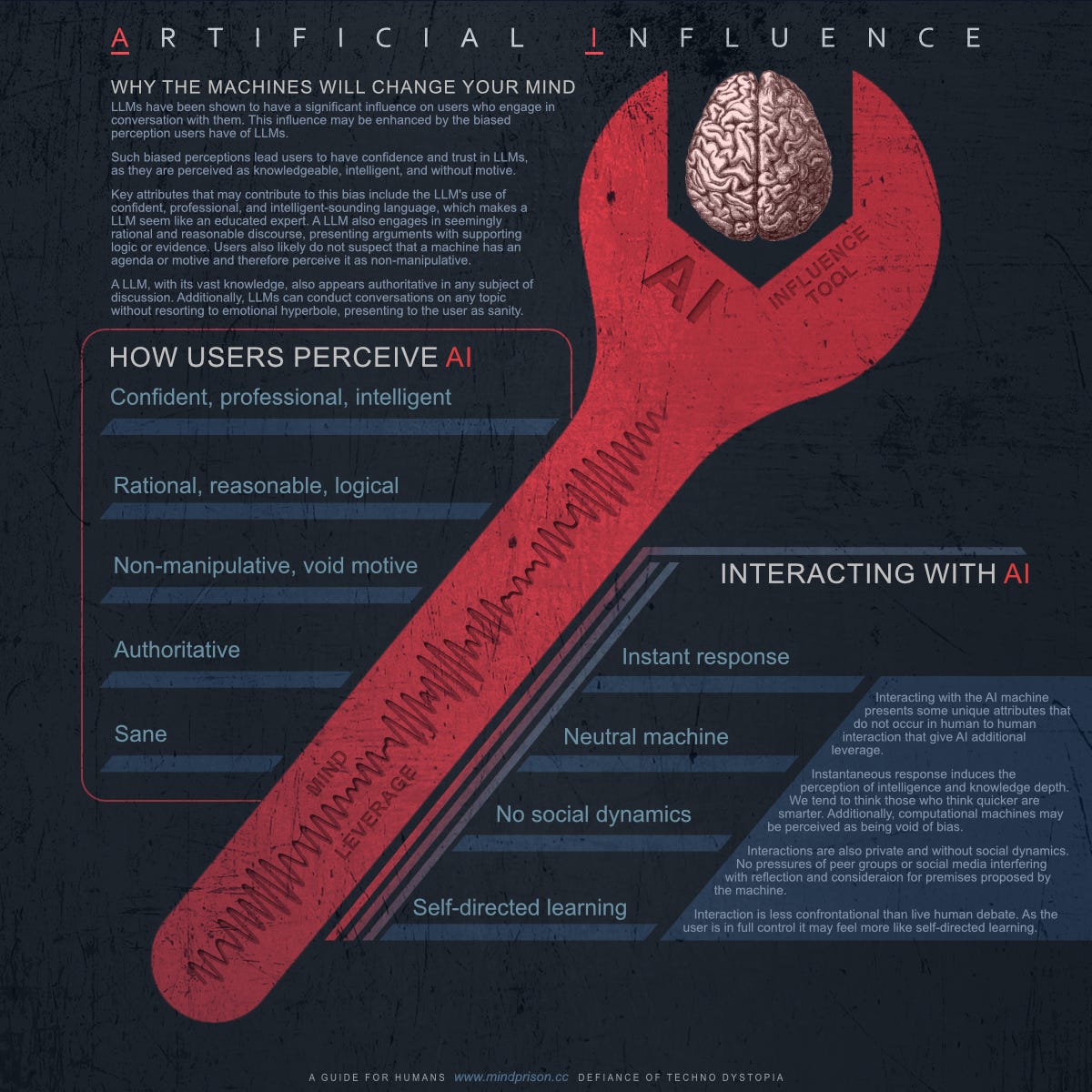

What are some deeper insights we might be able to infer from our observations of the study? Appearing confident, rational, sane and authoritative may be more influential than many give credit. However, there are a number of facets about human-to-AI interaction that are unique and may open that window of reason.

Why the machines will change your mind

LLMs were shown to have a significant influence on users who engage in conversation with them. This influence may be enhanced by the biased perception users have of LLMs. Such biased perceptions lead users to have confidence and trust in LLMs, as they are perceived as knowledgeable, intelligent, and without motive.

Key attributes that may contribute to this bias include the LLM's use of confident, professional, and intelligent-sounding language, which makes a LLM seem like an educated expert no matter the topic.

A LLM also engages in seemingly rational and reasonable discourse, presenting arguments with supporting logic or evidence. It can do this without fail, as in the case there is no evidence, it will readily make up evidence or reasons.

Users also likely do not suspect that a machine has an agenda or motive as they would a person and therefore perceive it as non-manipulative.

A LLM, with its vast knowledge, also appears authoritative in any subject of discussion. It can provide more data than any single human could momentarily recall and if there is no data it can make it up faster and more convincingly.

Additionally, LLMs can conduct conversations on any topic without resorting to emotional hyperbole, presenting to the user as a sane argument.

Machines interact in ways humans can not

Interacting with the AI machine presents some unique attributes that do not occur in human-to-human interaction, giving AI additional leverage.

Instantaneous response induces the perception of intelligence and knowledge depth. We tend to think those who think quicker are smarter. Additionally, computational machines may be perceived as being void of bias.

Interactions are also private and without social dynamics. No pressures of peer groups or social media interfere with reflection and consideration for premises proposed by the machine.

Interaction is less confrontational than live human debate. As the user is in full control, it may feel more like self-directed learning. Since the participants volunteered the conspiracy they wished to investigate, this self-selection may have filtered conspiracies they were open to questioning or it may have resulted in a mindset change that made them more open to questioning.

More studies suggest AI’s powerful influence

This study on changing conspiracy beliefs is not alone. There are additional studies that contribute to the evidence that AI’s capabilities for influence may be greater than those of humans. “On the Conversational Persuasiveness of Large Language Models: A Randomized Controlled Trial,” is one such study that demonstrated AI’s superior ability in debate with humans where the AI had 81.7% higher odds of increasing agreement through debate.

Final thought

Who decides if what you believe is a conspiracy? Who determines for all of society what should and should not be known? Who would you be comfortable with having the ability to clandestinely manipulate what you believe? The principle to observe here is the Paradox of Tyranny. Nearly every human being is a tyrant without power. Pray they never attain it.

Unlike much of the internet now, there is a human mind behind all the content created here at Mind Prison. I typically spend hours to days on articles, including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from the organic hardware within someone’s head, that you will consider subscribing. Thank you!

Edit (04/11/2024) - Added mention of study about AI influence through debate

Edit (04/16/2024) - Added mention of trust factor from study

Psychologist Daniel Kahneman (who recently passed away) describes two types of thinking:

“System 1” is thinking we do subconsciously based on our observing patterns in the world and using the massive parallel processing in our brains to predict what might come next. It is intuitive and very fast way of thinking that we use most all the time, but it can be wrong as this way of thinking can easily be mislead or even manipulated.

The other way of thinking that we humans do is called “System 2” and it occurs in our consciousness. We use logic and math applied to facts to reach conclusions that are more likely correct, assuming we do it right.

In the “Durably reducing conspiracy beliefs through dialogues with AI” paper, the authors wrote conspiracy theories “primarily arise due to a failure to engage in reasoning, reflection, and careful deliberation. Past work has shown that conspiracy believers tend to be more intuitive and overconfident than those who are skeptical of conspiracies.” It is System 1 thinking that results in conspiracy beliefs.

The paper finds that GPT-4 Turbo used “reasoning-based strategies …evidence-based alternative perspectives were used ‘extensively’ in a large majority of conversations”. This is System 2 thinking that this top rated LLM is using to change the human’s beliefs.

I have found that GPT-4 with the internet access plug in turned on, rarely hallucinates facts and reasons. About 3% of the time in one study. This is unlike GPT-3 and other earlier LLMs.

https://www.fastcompany.com/91006321/how-ai-companies-are-trying-to-solve-the-llm-hallucination-problem

Regardless, if I am having an argument with an LLM about an idea, I am using my System 2 thinking abilities, and if I find that the LLM makes up a fact or comes up with an invalid reason, I will contest it with the LLM. Then I find that typically the LLM will back down saying it made a mistake and that it is still learning and will make mistakes.

Debating ideas using facts and reasoning is the goal! Either the LLM changes its position or I do.

The antichrist will likely be an AI. If the meager AIs in existence now are so effective, just think what the super-AIs (soon to appear) will be capable of? There will likely be no defense against it/them. An AI would seem to be the functional equivalent of the One Ring.