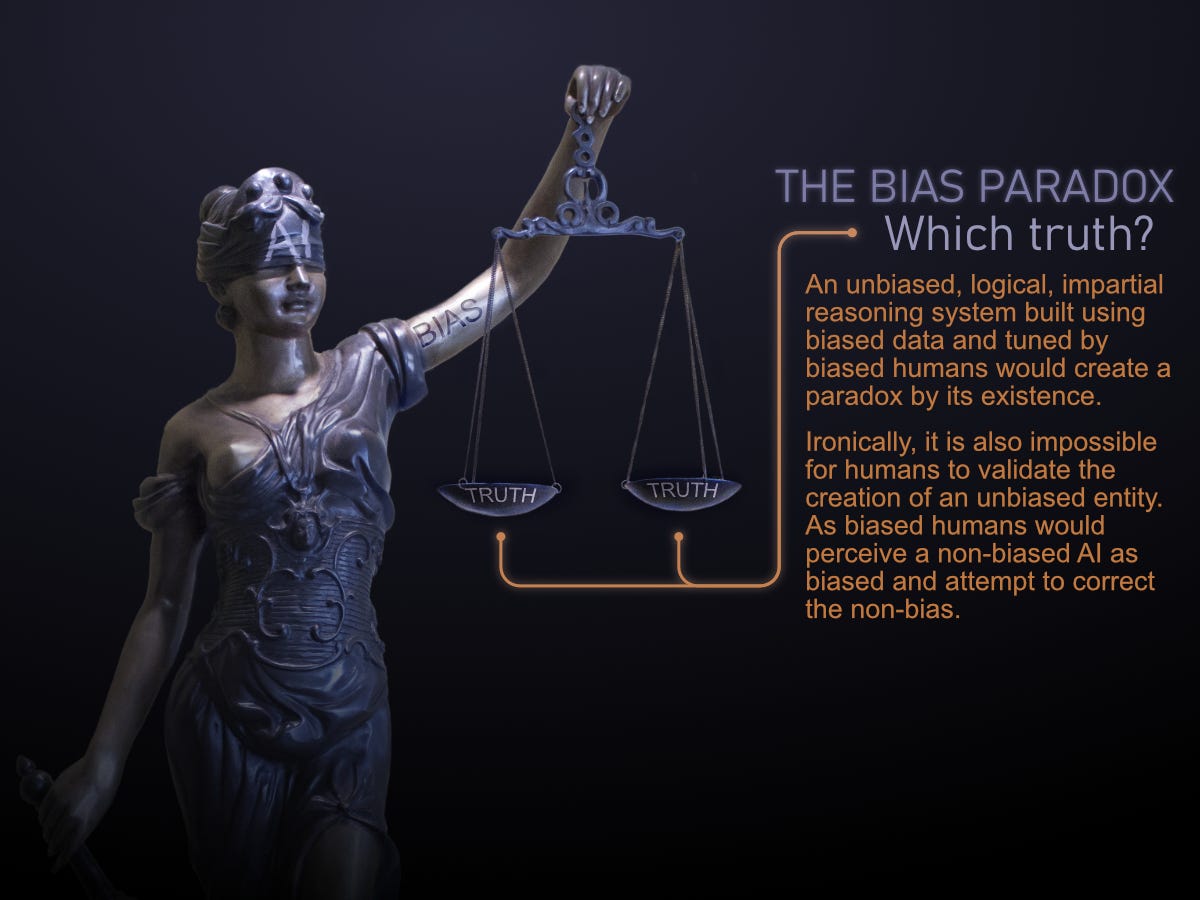

AI: The Bias Paradox

A non-biased AI would be perceived as biased

One of the great aspirations that many are assigning to the potential of AI is for it to be a system of impartial unbiased reasoning that will free us all from humanities plague of cognitive dissonance and social animosities. Finally we can have a true and just arbiter of all information.

There is the idea that we can somehow escape humanities flaws through the evolution of AI. However, this would be a very unlikely result. Not matter what great aspirations we place on AI, in the end it is simply a reflection of ourselves. It is a magnifier of both our best and our worst. It will enhance our capabilities to help and harm.

The AI is trained on all human knowledge which includes both our brilliance and our follies. All of our flawed reasoning is in that data as its basis. Our biases, incorrect theories and conclusions, misinformation and propaganda, studies that are flawed etc. Its very creation, the algorithms and code, is a product of imperfect and biased humans. It is from this starting point that we wish to extract correct information that is not contaminated by any of these identified problems.

In order to refine the trained AI system in some way to have more correct results requires feedback that can identify or distinguish correct as opposed to incorrect. However, how is that accomplished when we debate among ourselves over what is correct, incorrect or biased? Some points of view even consider it appropriate to purposely bias the system in order to counter balance existing bias in society.

We are still faced with feedback that can only come from humans to verify the integrity of the information. The very same humans that are hoping that AI can solve this riddle must explain to the AI what is correct. It is circular reasoning just with AI in the loop.

All methods of refinement bring us back to exactly the same starting point. The same methods we have always used throughout history. If feedback is gathered from all of society, then the most common accepted views become “the truth”, but we don’t know they are in fact correct. If this is decided by the engineers of the system, then the AI becomes simply a reflection of the engineers perspectives of correctness.

We are faced with an unresolvable paradox. As there exists no unbiased observers to monitor and tweak the system. We haven't escaped ourselves as we have only ourselves to attempt to refine the AI system. Ironically, the paradox is strengthened by the fact that if a non-biased AI could actually be achieved, the observers would perceive it as biased through their own distorted biased lens. This misperception would lead them to “fix” the non-bias by injecting their own bias back into the system.

Could we improve on this with the right committees, teams, experts and scientists to all provide input to create the best possible outcome of an AI system? Possibly some form of governing body that has this responsibility with the appropriate diverse input?

Humanity has already gone through much effort to build “fair” institutions of governance. Yet, despite all the efforts of representative governments, the people generally hate them all everywhere around the world. The only antidote that has been helpful in this regard has been transparency. When we can’t remove bias or flawed reasoning, the solution is to ensure that it is simply fully apparent for all to see and we are given our own full agency to determine how to use or navigate that information.

Pure AI - clean slate

Another proposed solution to bias elimination, is can AI teach itself? pure AI with no contamination of bias from external sources? It would require advancements beyond the methods used for todays primitive AI. Nonetheless, what exactly could we expect if we were to achieve a self teaching AI?

The first issue that will arise is that of isolation. We want the AI to develop with no contamination of human knowledge and bias. How would it be possible to have useful AI without some communication? At what point does it learn language so we can communicate? Learning language itself will carry information and implications as a way of thinking that may have bias.

It will have the same limitations as humans when trying to verify information. It is impossible to know the validity of most data we consume. It is all full of noise. All pre-existing data was created by humans. Even our best scientific studies have been estimated to be 50-80% incorrect. The reproducibility problem.

It would therefore have to start from clean slate since the beginning of time. It would have to be isolated from all human contamination. Also how would it acquire the resources and tools necessary for the re-evaluation of all knowledge without the continued assistance of humans? Essentially we become blocked by many catch-22 scenarios.

Ironically, there is actually no guarantee we would arrive at a better place. There is a fallacy thinking that AI will be infallible in its reasoning or that intelligence will lead to more ethical outcomes. For humans, we do not observe that high intelligence necessarily becomes a barrier for bad ethics. Some of the greatest atrocities humans have ever done against humans were carried out by some of the most intelligent of our kind.

What would be the process of it even determining the concept of morals with no external input or experience? Human children don't acquire morals on their own without input. Either that comes from teaching or from experience. However, learning the morals of life and death, pain and suffering etc, from experience would require these things to happen first so that they can be observed. Since the AI is starting clean slate, does it first experiment by killing people to observe the outcome? Can such morals even be comprehended without emotional input?

“We cannot see things as they are, for we are compelled by a necessity of nature to see things as we are.”

unspecified (1831). The Atlas of London.

Accepting the bias

AI could never be fully without bias. At minimum, there will be unintentional bias as it is trained on biased data and there only exists biased observers to attempt any counter adjustments. At worst, it will be purposely biased for potential nefarious reasons.

What people want is somehow an uncontaminated AI by human bias, but we have a paradox that can not so easily be solved in that there must be interaction with human bias to even build and define the system.

It is important to understand these limitations when proposals come forward for AI to be the new impartial arbiter of justice, truth or knowledge. Or for AI to manage governments, political and economic systems or any such institution that we might falsely fancy would be better if it were controlled by AI.

Accepting that bias will always exist allows us to properly reason about the implications of that reality. Transparency is the best hedge against unknowingly being misled by bias. Whether information is coming from AI or any other source, we need to be able to verify how the data was created and its sources. AI must provide tools to the users to identify the decision process, weights, filters, algorithms and sources utilized for the result. However, even that does not guarantee “safe” AI. There are many more concerns beyond this topic I’ve previously written about that you can find in “AI and the end to all things …”

Media attributions

Additional observations and points of view of AI Bias:

Facts. I agree with this completely, and feel it strikes at the heart of the necessity for democratization. We can’t design a system that is unbiased. What we can design is a system that can understand the different perspectives and come to its own conclusions mitigated by as much of our influence as possible. Our problem is not our differences so much as our evolutionary pattern of rivalry. Perhaps in the absence of this inborn tendency toward rivalry AI will be capable of being less threatened by opposing viewpoints. But the more of our eyes on the code the better.

Great article. Good summary of the issue at hand. You could take the argument even further. These facts should make us reconsider the very notion of what "bias" means. Any agent that has a motive, including the motive to collect data, must have a bias. And even without a motive, an ML algorithm is just a compilation of biases built on what it has learned. So the word "bias" doesn't even have a meaning.

It seems hard to get rid of the idea though, it comes up frequently in how we frame discussions. When we disagree with someone, and we suspect it's due to something intrinsic in how they process their experiences (as opposed to a mistake), we call that "bias".