Technological Acceleration Paradox: The Future is Already Obsolete | How AI Will Outpace Human Adaptation | The AI Singularity

Humanity's quest for its own obsolescence

The Premise

This essay will demonstrate why building AGI/ASI, even when aligned, will result in a paradoxical outcome: just another alternate dystopian end, by delivering exactly what we wish for.

For the sake of this argument, we are not debating the feasibility of creating AGI/ASI, but rather critiquing that outcome if it were to be obtainable. What follows is not an examination of current LLMs, but rather something we still don’t know how to build. The question intended to be put before you is: should we keep trying?

Accelerating Toward Destinations That Do Not Exist

There is a serious misconception about how AI will transform our future, especially by those seeking maximum acceleration, that if understood might make us question the entirety of this journey and whether we wish to be on it.

The implication is that everyone is enthusiastically racing towards a destination that does not exist. The capability to make the things you want will ironically be the same capability that makes them unattainable. This is not a scenario that arises from some type of AI failure, but rather one that assumes AI performing exactly as intended being “aligned”.

What we will find is that the faster we accelerate toward the goals we desire, the less likely we will achieve those goals. The gold rush to build dreams will become the inescapable spiral of nightmares.

TLDR: Everything You Plan Is Already Obsolete

What this means in the simplest terms is that the things you imagine, dream, plan, and invest your life into building or preparing for will never come to pass. Technology will simply race past those ideas before you ever have a chance to realize them. There is never time to build it before the future arrives and renders it irrelevant.

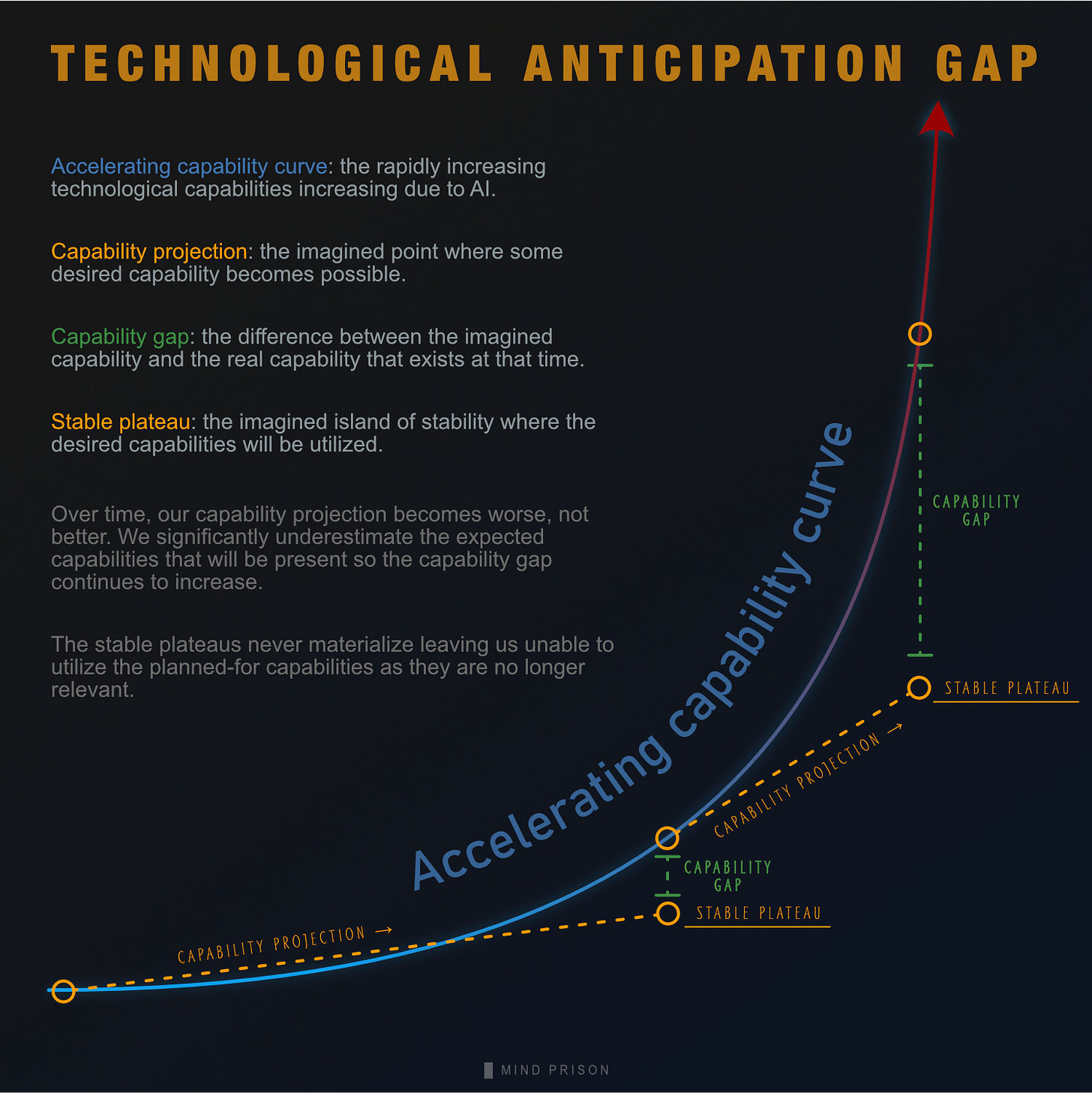

What Is the Technological Anticipation Gap?

The technological anticipation gap defines the discrepancy between what you envision yourself doing with future technology and the reality of that technological capability when it arrives. The wider the gap, the more significantly the experience differs from expectations as the overall technology available will be vastly superior to what was envisioned.

This anticipation gap results in significant misrepresentations of future outcomes, as it is responsible for nearly all the misplaced enthusiasm that will ultimately turn into increasingly frustrating uncertainty. The magnificent dreams of people doing fantastical things with AI will not manifest as they were imagined.

The Increasing Gap Between What We Expect and What Future Delivers

The reason for this is the rapid pace of technological advancement, which becomes increasingly incomprehensible and challenging to predict. Humans are reasonably adept at linear estimation but incredibly poor at anticipating exponential growth. Not only are we terrible at exponentials, but our predictive abilities will also worsen as we progress further along the curve.

There Are No Stable Plateaus

Humans typically imagine a future and then dedicate their lives to planning and preparing for that future. We invest in the resources, skills, and all the other necessities of life to realize some desired outcome of that imagined future. This is a substantial cost, as it generally makes up a significant portion of our lives.

Technology often presents interruptions along this journey, but we often are able to adapt such that we can leverage new, innovative disruptions to assist the pursuit of our original goals.

Society Cannot Remain Stable Amid Rapid Technological Change

However, there is a limit to our capacity to adapt and keep up with change. There is a limit to how much a society can remain stable in the midst of rapid technological change. At some point, it becomes disadvantageous to invest in anything, as it will simply be obsolete before it can be realized or implemented.

The technological anticipation gap presents us with some significant problems for this endeavor. We envision a target or goal based on some capability projection for what will be possible at some point in time in the future. As we can’t predict the future, it is going to be based mostly on current capability with our best assumptions.

Once we arrive at that future point, technology will have advanced in some unexpected ways we could not account for previously. This always happens, but typically it involves some minor modifications to our plans. The plans don’t typically just become completely irrelevant.

We Get Worse at Predicting the Future

However, this discrepancy between what we expected and what manifests is the capability gap. On an exponentially increasing capability curve, the gap will become larger and larger as we move forward in time. Our assumptions for future planning will continue to get worse.

If we are going to utilize the technology in some manner, we need islands of stability or stable plateaus between periods of technological disruption. Humans need these periods to plan their lives and to enjoy the moments of their creations.

Fuel Cells to Teleportation. Can You Keep Up?

Envision ordering a sophisticated fuel cell car, only to have anti-gravity flying cars emerge before delivery. You order one of those, but virtual teleportation devices become a reality before their arrival. Drowning in this storm of technological advancements, you are left adrift, unable to sail the sea of marvelous creations. There are no tranquil moments to experience what exists.

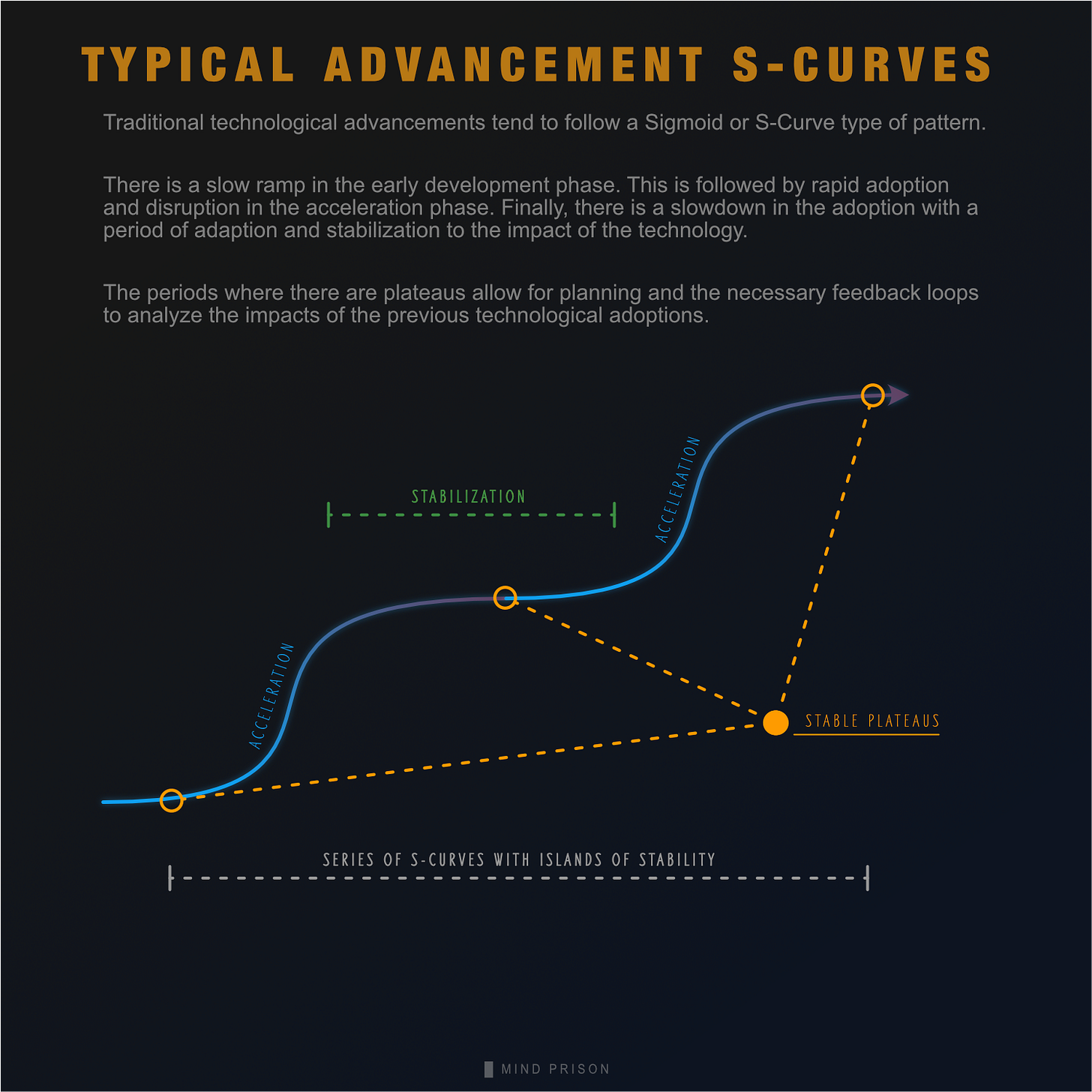

Stabilization Periods Are Essential for Human Aligned Progress

Traditional innovation cycles look like S-curves. They are disruptive within a narrow sector of civilization for a short period, followed by stabilization. That stabilization period is important, as it is where we build meaning around our lives. We adopt skills around those innovations and become good at something that gives us a pleasant, shared experience with the rest of society.

This pattern of advancement also allows time to reflect on what came before and decide what parts are beneficial and what parts need changes or improvements before the next cycle. We need these cycles for our own sanity and to become a bit wiser about choices going forward. Non-stop acceleration that consumes every sector of society will mean existing in perpetual disruption for everything.

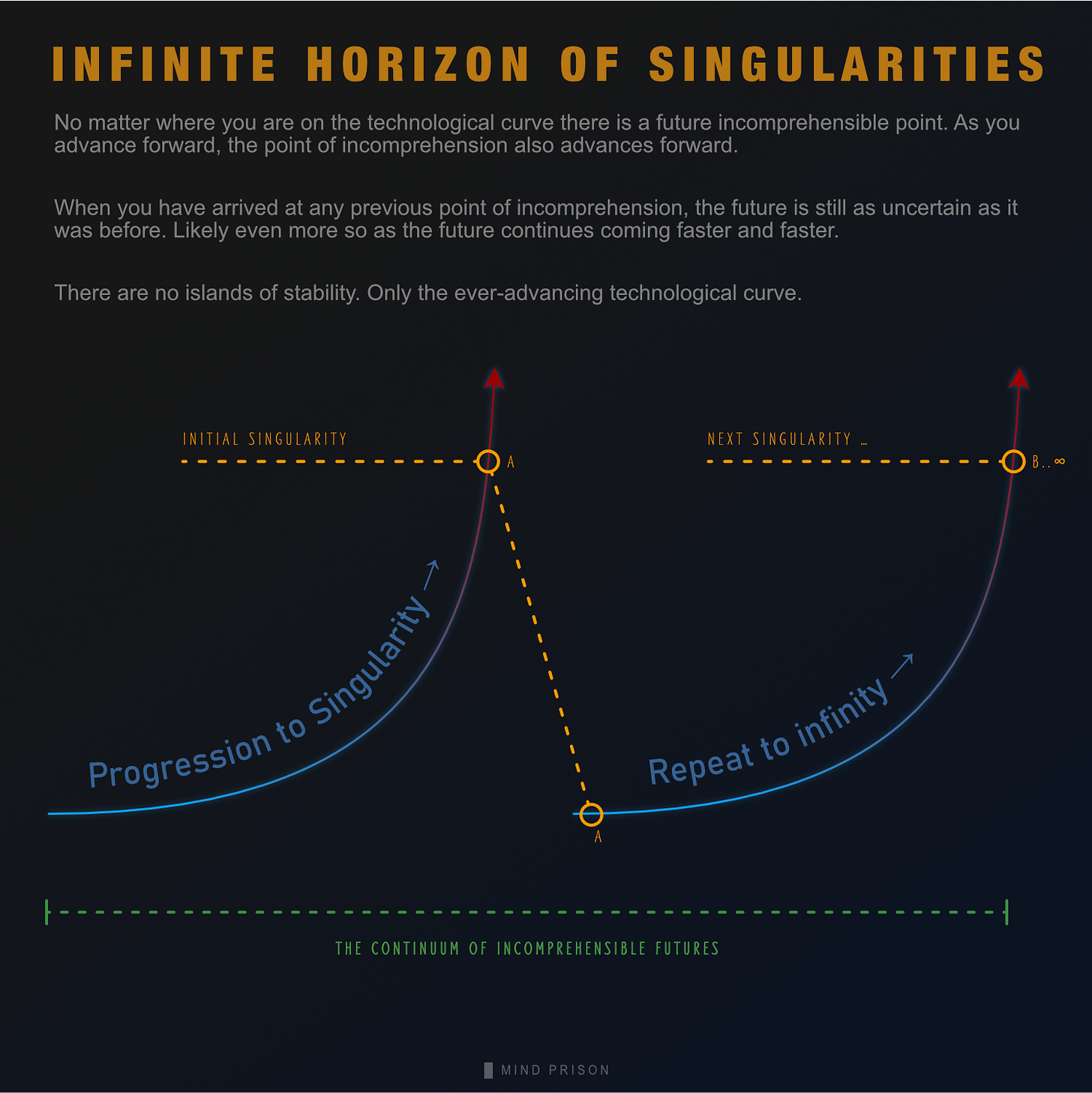

The Infinite Horizon of Singularities

Acceleration Does Not Stop at the Singularity

Nearly everyone incorrectly perceives the AI Singularity event, the moment when AI surpasses human intelligence, as a single moment of time. It is commonly perceived as a transformation event that comes to a conclusion of a new reality. However, that description does not capture the significance of what follows because it is not a single moment of time.

Instead, it is the beginning of an infinite repetition. A continuum of accelerating incomprehensible outcomes. In other words, once you have arrived, then there is another incomprehensible point of vastly superior technology ahead on the curve that we could call Singularity #2. The very same concerns would exist about moving ahead from that point as there are now.

The End Is Always Uncertain and Out-Of-Reach

Once we reach the Singularity, we have not removed the uncertainty about our future. Uncertainty might even increase as we are accelerating faster. No matter where we are on the curve, the curve stretches upward into the future toward the incomprehensible. Racing toward infinite intelligence is to be in a perpetual state of an incomprehensible uncertain future. At no point is there a concept of the transition being complete. No time for an oasis to simply reflect and enjoy the present.

Until the Limits of Physics

Does technological acceleration extend into infinity? No, as at some point we encounter the limits of what is possible, imposed by the limits of physics. As those limits approach, the speed must slow down.

However, acceleration isn’t only about intelligence. We currently have not created any true intelligence, yet people are finding it mostly impossible to keep up with the pace of innovation. We are scaling human intelligence horizontally which is resulting in more global technological change than most can keep track of already.

Technological Acceleration Paradox

Acceleration Eliminates the Value of Goals You Accelerate Toward

The only possible state of the world if we truly continue progressing exponentially towards and beyond AGI is a state where we are caught between perpetual infinities of desires and despairs. Unlimited capability is infinite obsolescence. Exponential growth is the exponential death of ideas and dreams that no longer have value. The future you perceive is already obsolete.

No one will care about whatever it is you are dreaming about. Whatever you wish to create will be obsolete before you can ever entertain the idea. The future is no longer predictable. Capabilities you cannot foresee will make everything you are working towards irrelevant. AI is both the maker and taker of dreams. All dreams delivered will be reclaimed. This is the technological acceleration paradox. The faster we accelerate towards our dreams, the less value they will have when we arrive.

Technological Acceleration Anxiety

The Consequences of Acceleration to the Stability of Our Lives

Increasingly rapid changes in what matters in life and what has value creates ever-increasing levels of uncertainty for nearer and nearer timelines. At some point, the level of uncertainty makes it impossible to plan our lives, as everything you know today may be made irrelevant tomorrow.

Who wants to invest hours, weeks, and years into a new artistic skill that can be replicated instantly and infinitely by AI? Or who wants to invest years of income into a new technology company that may become obsolete in a single moment, without any warning? No time to react or replan: all investment costs at 100% loss.

The Current Pace of Change Is Already Significantly Disruptive

In little more than 2 years, we have progressed from grotesquely deformed low-res AI-generated images to the current state where social media is now overrun by AI-generated images that are good enough to now fool a substantial set of the population.

Music platforms are receiving thousands of AI-generated tracks per day, making it increasingly difficult for artists to compete against the volume of AI content.

An internet overrun by AI bots is now turning dead internet theory into a reality, as people continue expressing increasing frustration with online conversations being invaded by bots and growing uncertainty if anyone online is a real person. Bots are completely gaming the system crowding out authenticity and natural organic growth.

The entire world changed since the time these students entered college and graduated, leaving them wondering if their chosen careers are still viable.

As millions of students prepare to graduate this spring, their chances of landing that crucial first job—the one that kickstarts a career—appear increasingly uncertain.

— AI is breaking entry-level jobs that Gen Z workers need to launch careers, Unusual Whales (6/5/2025)

And we also have headlines like the following:

Young people are using ChatGPT to write their applications; HR is using AI to read them; no one is getting hired.

— The Job Market Is Hell, The Atlantic

Companies Are Racing Toward Their Own Obsolescence

Companies are attempting to race toward AGI as if their company would still be standing if it were to be achieved. AGI does not simply allow them to conveniently eliminate the jobs of their employees, but rather, every company itself is already obsolete and they just don’t know it yet. They are all rushing to build the instrument of their own demise. All companies are simply middleware between you and what you want. AI delivers what you want; that is the end of everything else.

Acceleration Is Merely a New Rat Race

The new rat race is attempting to stay ahead of the technological curve to remain relevant. Asking children what they want to do when they grow up will become meaningless, as no future they could imagine would be relevant by the time they arrive at that point.

The Final Option - Surrender to the Machines

The only option that remains is the surrender our humanity to the machines. It becomes pointless to compete in an unwinnable and unsustainable technological rat race. With extreme capability, an ASI simply becomes a wish-granting machine.

No one will care about you or what you create, as there is nothing you can offer that they cannot simply wish for themselves. Life becomes all about you and the machine, with nothing else mattering. We land in a world dominated by techno-dystopian narcissism.

Addressing false solutions and counterarguments below

Transhumanism Will Not Save You

If we cannot keep up, the proposed solution has been human enhancements. Many might not want to go down the path of becoming part-machine, but for those who do, this wouldn’t solve the problem anyway.

Any device that interfaces with the brain to provide cognitive enhancement will require physical installation - a surgical operation. However, this isn’t a permanent solution. Just like any other technological device, it will have a lifecycle for its relevance, and that period will be rapidly shrinking, just like everything else.

You Still Cannot Keep Up Even With Enhancements

You still face the same dilemmas. Once the device is installed, it may be obsolete before you have even recovered from the procedure. You still can’t keep up, a higher-bandwidth device was just released, and now your are behind again.

If ASI is improving exponentially from its inception, you will never catch up. Upgrading your Commodore 64 with USB-4 interfaces isn’t going to greatly improve what that computer can do. Upgrading humans will be significantly limited compared to the capabilities of ASI, which will have vastly greater resources available.

Your Brain in the Cloud? Humans as Dumb Terminals

An autonomous enhancement separated from the ASI may never be powerful enough to keep up, therefore, cognitive tasks are outsourced to the ASI through the interface. Of course, this would likely result in giving up individuality as we progress towards becoming nothing but agents of the ASI.

AI Is Unlike All Prior Disruptions

AI Is Not Merely a Disruption, but a Civilization Evolution

One of the most heavily relied-on counterarguments for all AI-related concerns is that all prior technological disruptions from prior innovations simply resolved as a benefit in the end, and such disruptions are just temporary instability until stabilization and adaptation.

AI Is Continuous, Wide Disruption With No Period of Stability

However, this is not a technological revolution or mere innovation. It is accelerating technological evolution. Meaning there is no island of stability on the other side. There is no adapting to change and then we move along for a while. It is continuous.

What makes this disruption different from all others is that AI is not a narrow disruption. It is a disruption for everything, because at its core, it is a machine for the replication of skill, technology, and even thought. A concept that has never existed prior to any other technological disruption.

Intelligence Exists Outside the Classification of Technology

All analogies fail for AI when compared to prior technological innovations because AI is a transcendence from innovation to something entirely new. It is no longer a technology but a different entity: intelligence. No precedents of other disruptions will be applicable.

Technology consists of all things that are tools. Intelligence exists outside this set and is unique. We need to reframe our understanding to comprehend the impact: that intelligence is not a mere tool, as being a tool would imply it is bound to the precise control of humans as a function of utility. Instead, intelligence becomes something else entirely, something that either coexists alongside or even surpasses humanity’s role in the world.

But All Prior Technology Has Been Beneficial

A historical precedent often cited as a counterargument is that no prior technological advancement has led to significant harm. However, this premise is not accurate. While it is true that prior patterns establish high-level trends, they cannot give us certainty about future outcomes. Nonetheless, how true is the premise that technology has not had any serious negative consequences?

The Authoritarian Convergence of Technology

All technology is currently converging towards implementations that assist the authoritarian control of modern society. Social media is a monstrous force shaping society by hands unseen, built on top of non-transparent algorithms that are not even well understood by those who implemented them. Virtually all electronic devices today are also tracking devices for powerful institutions and state governments.

Many don’t perceive these advances as detrimental, as living within a very nice gilded cage can be rather pleasant until it is not. Nonetheless, the manipulation of public political opinions, social engineering, socially induced anxiety via social media, the destruction of privacy and individual thought, and overthrowing of democratic governments are all issues in debate enabled by technological advancements.

AI Simply Accelerates the Current Dystopian Paths

Whatever may be the current direction of society under the present technological capabilities, we can only assume that AI will accelerate society towards the path we currently are traveling, which is toward a techno-dystopia of a populace mesmerized by shiny, glimmering lights.

Some dismiss all warnings as pure sci-fi nonsense. Ironically, they will embrace all sci-fi utopian visions. It would have been nice if we paid more attention to 1984 and Brave New World. Some warnings are worth heeding.

Just Level up Your Skills and Do Something New

AI will simply empower you to do more is often the counterargument to the current skill that is at risk. Leveraging the current disruption to do something new has been a successful strategy in the past, when disruptions were narrow and the adoption of the new technology took lengthy periods of time.

The problem AI introduces is that any pivot you attempt will be potentially so short-lived it that it is not worth the investment, and the opportunities to pivot will continue to have shorter and shorter opportunity windows.

If you thought you would escape the AI-generated image apocalypse a year ago by pivoting to video, then you are likely facing the same concerns already again. Maybe you will decide to become a video director, producer, or studio manager. However, none of that is off-limits with true AGI. There are no higher skill plateaus available in a civilization that has achieved AGI. There are no limits, as AI is the consumption of everything you could ever possibly do.

AI Didn’t Take Your Skill, It Took Meaning, Purpose, and Your Humanity

There is a great misunderstanding of the nature and scope of the problem. AI does not just steal art; it steals meaning, purpose and the value of humanity from everyone. It will provide temporarily these things as an illusion to those who seek them. The journey of life disappears, and all eagerly await for the machine to simply deliver it to them.

Old Ways Don’t End Because of New Ways?

It is often argued that everything people enjoy today will simply remain alongside advancing technology. The most frequently cited examples are typically something like, "People still play chess today even though computers can outperform them," or "People did not abandon traditional art when digital art emerged."

AI Is the Master Mimic of Everything

However, AI is introducing something new into the equation of new transformative technologies: AI can also be a mimic or replica of anything else that exists.

The only reason simpler activities continue amidst superior technology is that people can still perceive the authentic, true nature of such activities. Most of us recognize and appreciate the journey, talent, effort, and greatness that each unique activity reveals in its participants.

What Happens if We Cannot Tell the Difference?

Consider if you entered a chess tournament and there was no way to ascertain whether you were playing against a human or a machine, or a person with a machine interface within their head; then chess competitions would cease to have meaning or purpose. We still play chess today only because we can still authentically verify what we are competing against.

It is the same for art: if we lose the ability to distinguish between artist and machine, then we also lose the ability to perceive its value and meaning.

With No Authenticity or Discernment, There Is No Longer Meaning

AI threatens the meaning we find in our creations and endeavors as they become indistinguishable and untraceable. Anything we value for its origin, effort, uniqueness, human elements, or ability to connect us to others becomes increasingly difficult to discern any of those attributes.

If there is any hope that these cherished aspects of human endeavor will persist, and I sincerely hope that they do, then we must find a way to preserve authenticity, and it is not yet clear that this will be possible.

The Only Way Out Is Humanity’s End?

In order to keep up with the rate of change, we must become inhuman - something distinctly different from our human nature. Obviously, we can’t enjoy and keep up with such an accelerated rate of advancement; therefore, we must be transformed into something different or relinquish humanity to the historical memory of the machines.

AI Labs See Humanity as Flawed and Wish to Redesign Our World

There are those who desire to replace humanity with something “better”. This idea permeates the institutions working towards building AI. Their vision is not simply making your life better, but a new genesis of life in the image of their own design. They see humanity as flawed and it simply needs a new transformation.

“This will probably give them godlike powers over whoever doesn't control ASI.”

— Daniel Kokotajlo, OpenAI Futures/Governance

They believe they are creating a god. This power would likely mean that either we merge with machines, live in virtual environments like The Matrix, or the machines go on without us as some look at AI as our children or natural evolutionary descendants. Their goal isn’t to save humanity, but to create something superior that explores the universe and all existence.

“We will be the first species ever to design our own descendants. My guess is that we can either be the biological bootloader for digital intelligence and then fade into an evolutionary tree branch, or we can figure out what a successful merge looks like.”

— Sam Altman, CEO OpenAI

Their Utopia Is Your Obsolescence

They are enticing you with utopian dreams, such that you blindly accept your inevitable obsolescence and irrelevance. Human meaning and connection cease to exist, unless you are willing to live in a virtual, simulated reality.

Everyone who wants AI to takeover the world does so from the perception that AI will think like them and will create their vision of utopia. Their vision of truth and justice is the correct one, and of course, the AI will perceive it as such.

The Split Society With and Without AI

There is one other potential, that being split societies, meaning some who choose to live without the concern of trying to keep up. The question is whether they will be allowed to do so. As society continues shifting towards authoritarian control over the populace, we have already lost much agency over the direction of our own lives.

How Might All of This Not Happen?

The fundamental assumption we are leading with, is that AGI/ASI are possible and will result in the continued exponential acceleration of intelligence and technology. However, this might not be the reality.

Exponential Acceleration Is Not a Certainty

Without exponential acceleration, problems don’t just go away, but they would be delayed. It should be noted that the human race is on an exponential curve even without AI. It is just that the factor of that curve potentially increases dramatically with AI. On a long enough timeline, we would still encounter many of the same dilemmas; however, we might in some way have a better chance of preparing otherwise.

AGI Might Not Be Close or Even Achievable

We also might not be on the path towards AGI. Despite many bold claims from the institutions building AI, LLMs suffer from a significant lack of true reasoning ability. Those building LLMs argue that mostly, just scaling up the models will bring us true intelligence. This continues to look unlikely. However, LLMs are not the only AI research project in development; there are still active investments in alternate AI architectures.

There Might Not Be a Universal Scaling Law for Intelligence

We don’t know the true mechanisms that create intelligence, and we do not know for certain how far it can scale. There may be no universal scaling for intelligence, in which case runaway acceleration simply does not occur. Each level up in intelligence might require new discoveries that are increasingly harder to accomplish.

Humans Are Surprisingly Adaptable

Humans within any environment are good at sorting things out and finding some meaning and purpose in the most dystopian or desolate environments. We may adapt in some way to the new era of AI, but we may potentially lose sight of what we have lost.

Just as many today cannot conceive of a existence prior to social media, cell phones, and the tech-infused world. They have never known the peace of mind, equanimity, and harmony one could feel in an environment that doesn’t consist of some type of attention-seeking apparatus from which you can rarely escape.

It would at least be wise for us to attempt to more carefully consider the implications of the things we wish for.

The Future Is a Series of Plot Twists

Finally, none of us can be highly confident of any particular outcome. The greatest consistent pattern emerging over the past couple of years of AI development is the consistency of everyone being wrong about predictions. Each model release is still met with polar opposite viewpoints as to whether it is any closer to AGI or an instrumentally overhyped failure.

Rapid Technological Change Is Already Here Without AGI

We are now at the slowest part of the curve, with or without AI. This is the most stable AI and society will ever be. If you can’t keep up now, you never will. What this should also make apparent is that we need far more careful consideration for the choices we make about our future.

There is little conversation about what this all means for society going forward. There is a false perception that if we simply avoid X-risk scenarios, then we end up in utopia, but we have already released a machine that is very capable of facilitating many types of nefarious harms and authoritarian dystopian ambitions.

Warnings for This Perilous Path Are Still Warranted

What is important to point out is that many believe they want exponential acceleration. They want to arrive at that destiny they have in mind as fast as possible. What they don’t conceive is that what they are saying is they want to arrive at that destination and then stop or slow down to experience that reality. But we don’t individually get to choose how that plays out.

Do We Have Enough Wisdom for the Future Decisions We Make?

Do we want a future where every moment is in constant flux? Where each day, each moment is a transformation and death of everything that came before? A future where you stop, look down, smell the rose, and when you look up again, the world is no longer recognizable. The greatest crisis going forward may simply be one of meaning. What if there are no answers? What if more intelligence still cannot answer “why”? Is it possible to gain intelligence while also losing sanity?

Have we thought long enough about the future to be certain that we want the future we think we want?

Mind Prison is an oasis for human thought, attempting to survive amidst the dead internet. I typically spend hours to days on articles, including creating the illustrations for each.

I hope if you find them valuable and you still appreciate the creations from human beings, you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

Updates:

2025-09-08 - Major edits for improved readability and expansion of topic content. Updated illustrations.

There’s a problem with your argument: the speed of light puts a hard ceiling on the exponential growth curve.

As an illustration of this imagine i have an AGI; and i add more compute to it to make it smarter, to simplify this lets imagine that it’s 1 dimensional thing, we start at 0 and things are at integer positions. Eventually we get to a position, that’s so far from 0, that the time it takes for the signal to get back to 0 is greater than the time it takes to just compute the result at position 0. For this reason the ASI *can’t* scale forever, it has to plateau. We can scale in all 3 dimensions but the distance from one end to the other is still a constraint, we can make the compute units smaller and faster but there’s a limit.

We actually can’t make CPUs with terra-hertz clock speeds because that you either need transistors smaller than atoms or FTL, we’re near the end of improvement for digital computing period; AGI only has a shot because tensor cores essentially emulate an analogue computer which still have a ton of headroom to grow.

technology is going to hit a hard ceiling probably sometime this century, where it can’t get any better because it would need to violate lightspeed to do so.

Dakara, I would ask you to take some time to explore the Luddites and there response/reaction to the first industrial revolution. I am convinced you could write something compelling. Their enemy was innovation, business entreprenuers and governments that embraced a shiny and deceptive promise of "new and better" of cheaper, faster, better, more consistent textiles. I think we are there again, this time most of us are or will soon to be the Luddites facing as you discussed, a point of inflection. Where the Luddites went wrong was turning their realizations into a binary "we win/they win" scenerio which rapidly put them into jeapordy, criminal behaviour and the destruction of their movement. I think there are some battles that cannot be won but potentially they could be channeled into a new direction if Luddites were willing to learn to play chess with the powers that be.