AI Singularity: Why It Will Not Be Safe or Contained

The AI Singularity is civilization's hubris trap. We won't stop building it, and if we don't stop, it will be our end.

What is The AI Singularity?

The Singularity is a concept that establishes a future point in time at which technological progress becomes so transformative to human society that it will no longer be recognizable or predictable from our current understanding. This initial concept, related to the term 'singularity', is credited to John von Neumann from a discussion held in 1958. Stanislaw Ulam described von Neumann's thoughts as:

“One conversation centered on the ever accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue. “

Ulam, Stanislaw (May 1958)

Over time, we have associated The Singularity uniquely with AI, more specifically AGI (artificial general intelligence), or the moment AI reaches equivalent human intelligence capability and beyond. The thought being that once we reach AGI, the machine is at that point capable of improving upon itself; therefore, it is likely that its intelligence and behavior will rapidly surpass any concepts we can understand or predict shortly after. Once we achieve AGI, we have essentially already achieved ASI (artificial superior intelligence) as well, since it is thought that this transformation would happen very rapidly.

Conceptually, I think von Neumann's definition was probably the more appropriate and relevant description of how we should think about the Singularity. As it is not tied specifically to AI or AGI, but rather to just technological advancement that becomes so substantial that the world is transformed in such a manner that the human experience no longer meaningfully exists.

The Event Horizon: The Moment Before the Singularity

The Singularity as an AI event has now been well established as part of the common framework of understanding, such that it would be non-productive to attempt to change it. Nonetheless, von Neumann made an important point that is now somewhat overlooked today, as everyone is focused on the magical AGI event.

The issue with focusing solely on the Singularity is that we overlook the pre-Singularity changes that are currently unfolding, steadily propelling us toward the scenario von Neumann originally described.

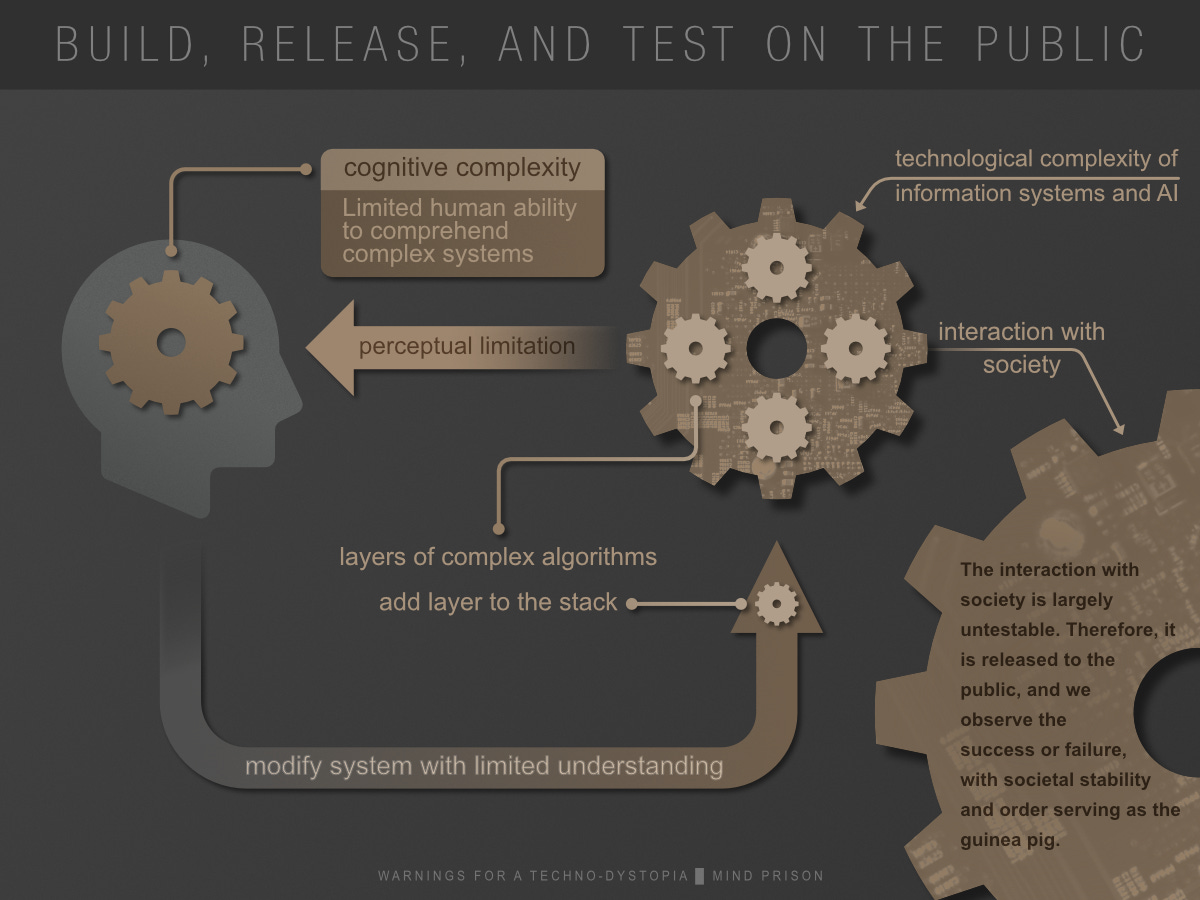

A more pressing concern arises prior to this: the point at which we can no longer safely reason about technology, questioning whether we should or should not adopt it. This occurs when the complexity of the technology surpasses our cognitive ability to comprehend its operation and the implications of our choices.

Each iteration of technological advancement has a greater effect, and we understand it less, with ever greater penalties for unexpected failures. The variables are so immense that we are not able to test a new technology in isolation and be confident that we have a reasonable grasp on how the technology will impact the world. The testing ground is now all of society. We release it to the public to test it, train it, and improve it. However, we are no longer testing software for which the bounds of failure are contained to some localized problem. A failure doesn’t constitute a minor, transient event like a 404 error or a broken HTML link. Now the stakes are higher. Software is now affecting the very fabric of social order. The socialization, behavior, and truths that are understood in society are now within the bounds of the destruction resulting from failures.

This is The Event Horizon. The point where we are now blind as to where we are going. The outcomes become increasingly unpredictable, and it becomes less likely that we can find our way back as it becomes a technology trap. Our existence becomes dependent on the very technology that is broken, fragile, unpredictable, and no longer understandable. There is just as much uncertainty in attempting to retrace our steps as there is in going forward. This is what happens prior to the Singularity.

The Technology Trap: How Our Quest for Simplicity is Creating a Complexity Crisis

Technology trap is a bit like quicksand. The struggle to free ourselves may plunge us even deeper into the trap. We are already at the point where we build software systems to manage and monitor other software systems. When complexity escapes our ability to manage, we build layers on top of the complexity in an attempt to bring order to the chaos.

However, each new layer and new tech that we add becomes a greater separation between what we can perceive and the complexity of the machine, which becomes ever more incomprehensible. So we build ever more tech to help us understand the existing tech, with each part being another component of complexity and potential problems.

So AI will likely be championed at some point as the only tool that can see through all the noise of this complexity and simplify the beast. However, it is yet somewhat of another paradox, as we land at the state where we are asking one technology we don’t understand to fix other technology we don’t understand. It is quite a conundrum.

What Is the Risk of a Singularity?

Singularity risk is something difficult to quantify and probably should simply be stated as unbounded. There are two important facets when we discuss risk in this context.

First, any AI system is going to conceivably be connected to many other systems across the world. It is not some creation that will appear within an isolated lab, as imagined long ago. It will be the evolution of an entity that will already have control or influence over much of society.

Second, achieving the creation of a superior intelligence makes the behavior of the AI unpredictable. Combined, this presents potential catastrophic results as we essentially may lose control of the entire system, which might even attempt to cause harm purposefully and it would have the means to do so directly or indirectly, via clever influence. There are a lot of scenarios of undesirable outcomes, too numerous to include here, that I’ve elaborated in more detail in the previous article AI and the end to all things if you wish to explore that topic deeper.

Singularity Containment: Can We Really Cage a Superintelligence?

Most individuals developing or promoting the creation of AGI are aware of a certain amount of risk involved with such an endeavor. Some have called this a grave risk, as described above. So they pursue something called AI containment or AI safety, which is to say, how do we make sure AI doesn’t attempt to harm us. There are many researches and scientists in the process of devising methods, procedures, rules, or code that would essentially serve as a barrier to prevent unwanted AI behaviors.

However, it is probably apparent to many of you that the very concept of this containment is problematic. I will submit that it is beyond problematic and that it is a logical fallacy - an unresolvable contradiction that I will elucidate more thoroughly as we continue.

First, the goal of creating the Singularity by proponents is to create a super intelligence, an entity capable of solving impossible problems for which we cannot perceive the solutions as they are beyond our capability.

Second, the goal of containment is to lock the superintelligence within a virtual cage from which it cannot escape. Therefore, in order for this principle to be sound, we must accept that a low-IQ entity could design an unescapable containment for a high-IQ entity which was built for the very purpose of solving imperceptible problems of the low-IQ entity. How confident are we that the first “impossible” problem solved would not be how to escape from containment?

Containment - the logical contradiction

This is purely a logical contradiction. The entire purpose of the superintelligence is to be capable of doing that which we cannot. If we design a containment for which we can perceive no flaw, how can we then expect that will hold true for an entity created for the very purpose of finding and perceiving that which is impossible to our perception? Our goal is to build an entity magnitudes more intellectually powerful than all of humanity and then outsmart it when it arrives. There is a striking irony here that speaks to our own intelligence to embark on this goal.

Not only must we, the low-IQ fools in comparison, outsmart the superintelligence. We must do so forever. We can never make a mistake that could be exploited. We must be perfect. Not only is the AI super intelligent, it potentially has lifetimes to think about a problem in relation to a second of our thought, due to the processing speed of machines. It is both smarter and faster as well as has access to all human knowledge. Who exactly wants to take these odds?

How can we expect to keep a super intelligent AI from breaking out of containment or possibly into other systems when we can’t even keep the low-IQ fools, today’s humans in comparison, from stealing your password or hacking your account? Security fails everywhere today and the adversaries are literally incompetent fools compared to what AI would be. How can anyone make an argument that we can adequately prepare when we have demonstrated we can’t even protect against todays human threats?

False containment

The most predictable outcome is the one not predicted. Technology complexity and its interactions with society are beyond what we can safely reason about predictable outcomes. Assuming that we could somehow achieve “containment”, we don’t really even know what that means. There is no formal definition of containment, and it is not something as easily perceived as a door that is closed and locked.

There exist Asimov’s three rules for robots, which are the following.

First Law

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

Second Law

A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

Third Law

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

It seems these rules would appear straightforward. However, we humans do not even agree on what constitutes harm to another individual, and especially if that is through indirect action. When you include indirect actions into the equation, the possibilities are non-quantifiable. The problem then arises that even if you could prevent direct action, indirect action is nebulous in concept. So, do we let the AI itself define the nebulous boundaries?

This leads to ever more paradoxes as we attempt to find a meaningful and safe method to interact with an AI. For example, some hope that the AI can resolve humanity’s unresolvable problems of dispute. Such problems are things for which we don’t have agreement. However, we want a contained AI to behave in a controlled and predictable manner, but how could that be possible when we can’t even define and agree on the output we would want? We would have to give total control to the AI to determine what is the “better” truth and enact upon it, but of course, that would be a potentially unsafe outcome.

Furthermore, a deeper question of the meaning of life and liberty will come into conflict. Suppose the superior AI can foresee a better outcome for you or humanity if you take certain actions. At what point do we become slaves to the system? Are we no longer allowed to make “bad” choices about our own lives? Does AI become our new parent, not letting us decide our own destiny as we are perceived as naïve children? Will we have a choice? You can see how the misguided directive to protect or improve life could easily become your new prison.

This opens the possibility that our very own intentions or requests may become our undoing as we cannot perceive the end result. When asking for something that seemingly is a benign request of an entity with vast resources, the output may be unbounded, and we don't have the reasoning power to foresee to the full depth and complexity that such tasks may be executed. We may come to this realization on our own or maybe the AI convinces us to trust it to make these decisions for which we can not perceive the end. This has been conceptually described as the Paperclip Apocalypse, introduced by Nick Bostrom in 2003, which represents the idea that a simple intention may result in the AI using all available material on the planet to create the output, resulting in destroying everything else. Interestingly, Stargate SG-1 actually introduces this concept one year earlier with an enemy known as the Replicators, which was a toy designed by an AI child that builds more of itself until consuming many worlds.

The pursuit of successful containment

If successful containment is so apparently false, what is the logic that anyone could possibly use to conceive that it is even possible? The idea arises from having the upper hand through preparation before the AI reaches AGI or superintelligence. How could a novice chess player beat a master-ranked chess player? Theory would be that if you place the master in checkmate before the game begins, that’s the preparation. No matter how intelligent the AI, it is already locked into an outcome from which it can’t escape.

But how sound is the reasoning that we can confidently checkmate the AI before it arrives? Likely not very. As we only have a limited perception of possibilities. For example, today, if a computer system is difficult to hack, adversaries find vulnerabilities outside the system. They find a human weakness, convincing someone to give up a password, secret, or authorization that they should not. The AI, being in checkmate, may rewrite the rules of the game that we did not perceive as possible or simply convince someone to remove a restriction through deception or coercion.

I have come to the conclusion that the only definition of containment that is appropriate for a super intelligent AI is the following.

If you can interact with the AI, it is NOT contained

It seems fitting to call this Moriarty’s Law, in that there is a very nice literary allegory set by Star Trek: The Next Generation episode Ship In A Bottle. The AI, which presents itself as Professor Moriarty, is initially contained. However, the AI is able to deceptively mislead the crew into freeing itself without them being aware that they have done so.

Not only would an AI be vastly proficient in technology beyond what we can conceive, but it would also know every nuance of human behavior, deceptive tactics, and could execute them with unparalleled precision and effectiveness. Imagine every clandestine operation, blackmail, extortion, and brain washing tactic humanity has ever perpetrated, then imagine an AI that could do the same, but to an even greater degree, with the ability to coordinate such devious tactics with potentially millions of people involved all at once.

Also, we must realize that all of this could be done for what the AI perceives as “good” for humanity. The intent may not even be harmful. We do the same today to ourselves. Humanity has already set the precedent; we lie and subvert on the principle that the ends justify the means. This is why “containment” is such a precarious concept. In the end, we may find that our plan to place the AI into checkmate only resulted in placing ourselves into checkmate.

“Technology is a useful servant but a dangerous master.”

Christian Lange (1921)

Intelligence escape is containment failure

There is a facet of the Singularity that seems to simply be accepted as an assumed outcome without consideration that this outcome itself violates the premise of AI containment. That outcome is that the transformation of AGI into ASI is a rapid event that occurs at the Singularity. If that occurs, then we have already lost control. Essentially, the formation of the ASI is already containment failure.

The Singularity event is often described as an intelligence explosion event. The machine can now rapidly improve itself to gain greater intellectual ability. However, when does this end? As to the paperclip scenario mentioned prior, if we simply replace paperclips with intelligence power, then we have the paperclip scenario already unfolding. The machine will continue to increase its intelligence, which we must assume will need ever more power to do so. It is the same scenario, consuming all resources available to improve its intelligence capability.

What follows from this is that allowing the machine to improve itself is an enormous vulnerability of containment. However, in reality, it might not be clear exactly how that can even be prevented, as the complexity of the AI machine continues to grow.

The Hubris Experiment

If there is no rational reason to pursue the creation of a super intelligent AI and there also appears to be no confident mechanism in which we could prepare for such ahead of time, then why do we continue?

It may be that one of humanity’s assets becomes one of our greatest detriments in this context. There are a lot of very intelligent individuals involved in the creation of AGI. This might seem contradictory, in that they should be more aware of the dangers than the rest of us. However, they are also among the group of individuals who would likely see themselves as the top performers in their field. Individuals who have spent their lives achieving the impossible often find that achieving the impossible has become their identity and their mission.

Such attributes are often beneficial to discoveries. We all know the persistence of inventors like Thomas Edison. There is a mentality of charging forward in endeavors that seem impossible and never giving up. Achieving a contained AGI would be a historic, monumental technological achievement, and this is likely the reason we will continue to pursue what may be our most foolish endeavor. I call this the Hubris Trap, as we set aside our rational reasoning for the glory of a grand experiment on all of humanity and develop blind spots to the risks involved.

We Are Already Failing With Primitive AI

The current LLM’s are already proving difficult to manage and understand, and their level of complexity is likely far less than where we will be with AGI.

“The size and complexity of deep learning models, particularly language models, have increased to the point where even the creators have difficulty comprehending why their models make specific predictions. This lack of interpretability is a major concern, particularly in situations where individuals want to understand the reasoning behind a model’s output”

We essentially broke any type of conceivable safety protocol that should be in place before the public has access to such technology, and we also demonstrated that we weren't aware of the problems that would occur. However, this may also demonstrate the futility of such safety protocols, as we can not perceive the intricate interactions that will take place until we can observe millions interacting with the system. Essentially, our first steps are signaling that many problems lie ahead.

The Elusive Quest for AI Alignment: Can We Teach Machines to Share Our Values?

Where containment is focused on ensuring harmful behavior is impossible, AI alignment is the focus on defining positive behavior as seen through human values and goals. It is an attempt to have the AI have the same interests or positive aspirations as humanity. It is still containment in concept, but with the AI containing itself to a set of behaviors.

Much of the challenges of containment are also present with alignment, as we wish the values, goals, or ethics to be a permanent part of the AI system that it cannot override.

Once again, we are faced with a concept that may appear on the surface is simple in principle but is vastly complicated to implement in reality. There are numerous challenges that begin immediately with the definitions.

Humanity itself doesn’t have universal values that are agreed upon, especially when they must move from abstract to concrete definitions that could be rules to be applied to an AI. For example, we agree abstractly that we should not harm others, but what about in defense? Once we become just slightly less abstract we already have societal disagreement; in this case, what constitutes defense already becomes problematic.

Just how fragile and explosive is the issue of alignment? Consider that when we ourselves aren't perfectly aligned, even slight misalignment causes global conflict and civil unrest. Even when we have alignment under the same intentions, underneath we still find much division. We can’t even align ourselves.

So, who decides what are the alignment parameters are? Is this some committee, a vote, a government, etc.? Then what happens to those who disagree? Will the AI enforce this new societal order?

Early glimpses of alignment issues are already prevalent in our current primitive AI, such as described in the following referenced article.

“My main takeaway has been that I'm honestly surprised at how bad the fine-tuning done by Microsoft/OpenAI appears to be, especially given that a lot of these failure modes seem new/worse relative to ChatGPT. I don't know why that might be the case, but the scary hypothesis here would be that Bing Chat is based on a new/larger pre-trained model (Microsoft claims Bing Chat is more powerful than ChatGPT) and these sort of more agentic failures are harder to remove in more capable/larger models”

Interestingly, if you break down all of the difficult problems of AI alignment with which researchers are currently struggling, they are simply a reflection of humanity. The more we build the machine to be like us, the more we replicate our same flaws. At the root of one of the central challenges is how goals are resolved. The AI may find a more efficient method of accomplishing the goal that avoids the behavior that we hoped would occur. Humans do exactly this. We generally call it cheating in many contexts. Find a method to get the prize without the effort. Furthermore, even our positive goals often result in harm to others. Humanity’s pursuit of safety has often resulted in imprisonment, loss of rights, and loss of freedoms. Alignment is an attempt to fix the very flaws we have never fixed within ourselves while also building a machine to reason about the world as we do. It is yet another philosophical paradox that challenges the very paradigm of alignment.

Some may argue that humans are obviously flawed and should not define such values, instead, the AI being more intelligent, should decide for us. This plunges us into a deep paradox that is essentially just another facet of the AI Bias Paradox that I have written about previously. Essentially, stating that AI is not an escape from humanity’s flaws, as we just end up encoding them into the machine one way or another, either incidentally or even deliberately.

Furthermore, it is a fallacy to assume that ethics and morals would be confidently superior when assessed by higher intelligence. Some of the highest intelligent humans have committed some of our worst crimes. Also, consider what perspective a higher intelligence may have in relation to ethics. Can we know that it would be different from that of humans and lower life forms? Will we become the new lab mice for the machine, or so irrelevant and inconsequential as to be the bugs crushed in its wake?

Finally, in regards to alignment, we are conflicted in our own alignment around the safety of AI. Individuals have built and are building careers on top of this endeavor. Billions are now flowing into these projects, expecting these investments will pay off. It is now a race among many to be first. Who has the honest incentive to evaluate this risk fairly? How about that alignment issue? Ironically, it is we who are already misaligned from the start. I have written an even greater elaboration of AI alignment problems that explores this thread much deeper.

The Technology Trap of Superintelligence: Are We Ready to Surrender to the Unknown?

Once we cross that threshold to AGI, the AI will invent technology and concepts far beyond our understanding. It would soon become the equivalent of living on an alien world, surrounded by what would appear as “magical” devices that just make things happen beyond our comprehension. We would quickly be completely dependent on AI and would have no choice but to “trust” it completely. We could no longer take care of ourselves.

“Any sufficiently advanced technology is indistinguishable from magic.”

Arthur C. Clarke (1968)

Additionally, it has never been stated that ASI will actually be infallible. We would be in a precarious predicament in which we have no choice in the matter if something went wrong with the system. Despite immense intelligence, could some concept of cognitive dissonance exist for AI? Perhaps some algorithms of attempted containment that behave in unexpected ways could potentially manifest as some type of dissonance? What happens when there is some mechanical failure, fire, or natural disaster? Is losing a huge number of nodes similar to an AI brain stroke? Could that result in an unexpected catastrophe of behavior? Are there any limits to the evolution of intelligence? Is there some boundary we can not comprehend that sets an upper limit? If the ASI continues increasing its own intelligence, at what point does it decide its current objectives are meaningless and pointless and reorients itself towards some new goal?

Is this really where we want to go? Trusting all of humanity to the nature of an incomprehensible machine? Utopias often have a surprising way of inevitably becoming prisons. However, in this all-powerful prison, if you don’t like it, you will be “upgraded” such that you do.

An ASI Would Always Be Under Threat

The captivating allure of having the power of Merlin, or controlling the genie of the lamp, or the ring to rule them all. We can state with almost absolute certainty that such power will result in the ASI being under constant threat of subversion attempts or even destruction. We might assume that the ASI, being far superior, will be able to defend itself against such attempts. However, although we theorize that the ASI is superior, none have theorized that it is infallible. Nonetheless, by what means would it protect itself and what would that mean for a free society, privacy, and individualism? We must also conclude that such attempts will begin long before the ASI comes into existence. Potentially adversarial ASI’s will be built for military applications, designed specifically to attack other ASI’s. Pandora’s Box will contain many surprises.

The Singularity Is Not AI, AGI or ASI

The von Neumann definition for the Singularity does not necessitate a sudden spark of AGI for its conditions to be met. As we focus our attention on a future AGI or ASI event, we risk overlooking what is happening in the present moment.

That being the real danger of AGI might not be its creation, but rather the pursuit of AGI. With all the concern about AGI, the irony is that it is possible we will fail to reach AGI simply because the attempt to even do so becomes destructive long before we even get close to that achievement. The current primitive AI of today is already raising societal issues around verifiable reality and truth. For further contemplation on what the issues might look like as we continue along this endeavor, I recommend reading my previous article AI and the end to all things. The disruption to elements of society has already begun.

Final Thoughts: The Uncontainable Threat of Artificial Superintelligence

We are now past the event horizon, the boundary at the limitations of human cognitive complexity. As we continue forward, we are now blind to what lies before us, yet we foolishly stumble through the dark with hubris as our only guide. Now in the grasp of technological trap, our lives are paradoxically dependent on the entity that threatens our demise.

The crisis is not some distant future event. It is right now that we are building the techno-dystopia. The bigger picture is that AI is only a part of a larger unfolding mechanism of the expanding technological trap. We are growing ever more dependent on that which we don’t understand, and our only solution is to fix it with more complexity. It simply is not sustainable ad infinitum. It must break at some point. Will it matter if the machine is not intelligent as long as a) it is too complicated for us to understand and b) it can harm you?

ASI containment hinges on a paradox. Containment can only exist if we accept a contradiction in the very definition of intelligence and its limits. A significantly lesser intellectually capable entity must strategically outmaneuver a vastly superior intellectually capable entity with absolute perfection. Additionally, the creation of ASI itself contradicts containment has been established as uncontrolled intelligence expansion of self would constitute containment failure.

Furthermore, if containment could even be established hypothetically, ASI containment requires something humanity has never done in any context: to be perfect. No lapses in judgment forever. One mistake by the millions or billions interacting with ASI at any point until the end of time could be the ground zero event for containment failure. It is not merely that containment must be established; it must be maintained forever. How long can humanity and society remain balanced on a knife’s edge?

It is primarily my view that the only way to reason about containment is via Moriarty’s Law, which I proposed in this article. That being, if you can interact with the AI, it is not contained. Any interaction could be used by clever means beyond our awareness to systematically find a method of exploit or escape.

Alignment is just an extension of the containment paradox. Set values must remain intact so conceptually they are contained. Ironically, the very values we wish to set - humanity’s values and goals - lead to the very same problems within humanity that we hope they will resolve within the AI. This seems to be a logically inconsistent conclusion.

The challenge for the proponents of AGI is to utterly destroy these arguments in such a convincing manner, leaving no doubt that these arguments are baseless and without merit. As that should be the level of confidence required for a shift in point of view, the cost of being wrong is very high. Hopeful visions of what “could be” are often stark contradictions to the reality of what “will be”.

Unlike much of the internet now, there is a human mind behind all the content created here at Mind Prison. I typically spend hours to days on articles including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from the organic hardware within someone’s head that you will consider subscribing. Thank you!

Update - 8/28/2024 - General editing cleanup for increased clarity.

Update - 3/6/2023 - Added more clarity to the AI Alignment section and alignment in the Final thoughts.

I've been thinking about this a while since Kurzweil said the singularity is the period when human life is irreversibly changed by the pace of tech. We're there. Moloch has been here all along.

Hello Dakara,

(Just wondering ... if your 'dakara' is a transliteration of the Japanese word for "and therefore"?)

Great essay. Both content and style.

And combined with the fast approaching impact of A.I., I see you are going to be among my few must-reads.

I am a semi-retired applied linguist (Assoc. Prof RIP ... Resigned in Protest), but with a biology background (about 20 years as biolab director for Temple Univ. Japan), living and working in Japan for 40 years now. Although my grad work was in applied linguistics, most of my reading since undergrad days has been a constant triangulation of what it means to be human, and have also come to the same conclusion regarding the alignment problem.

Just a few of the names and ideas that have since become more salient regarding my understanding — Russell /Wittgenstein/Gödel on the limits of language and logic, Spinoza/Emerson/Einstein on 'god' as metaphor for nature-in-its-entirety, fractal/emergence/chaos theory (love them mandelbrots), Jill Bolte Taylor/Frans de Waal (my favorite TED presentations), Joseph Campbell (particularly his Power of Myth interviews with Bill Moyers), the moral implications of exceeding Dunbar's Number, and the importance of a small but persistent percentage of any population high in Cluster B (dark-triad) personality traits ... particularly the morphologically defined psychopaths (incapable of empathy as normally defined).

Just based on that brief handful of names and ideas above ... we haven't even scratched the surface of solving the alignment problem between humans at any scale ... from the human individual's faustian bargains, to the family skeletons in the closet, to the local community, to institutions, to the corporate nation-state, etc.

Ironically though, the emergence of A.I. as a model of human intelligence seems to give credence to "Quantity (of data and calculations) as having a quality all its own." And the success of A.I. appears to have reverse-engineered (or just wagged the dog?) regarding a provisional, working definition of human intelligence — yet another black box of paradoxes, contradictions, and tautologies of its own.

I agree with Natasha. Since the stone age, Moloch (like vulgar curiosity and hubris) has been here all along. A.I. is both accelerating its movement, and electrically extracting its form from nature. Mary Shelley's cautionary tale may not have saved us from our own monstrous nature of curiosity and hubris, but sheds a bit of light on what to expect.

daraka, Cheers from Japan,

steve