The Cartesian Crisis: AI and the Inability to Know What Is Real | Dead Internet and the Post Truth Society

The collapse of institutional trust and the opaque wall of AI-manufactured realities

Civilization is on the precipice, where it is about to leave behind the ability for any individual to discern reality. The clarity of truth is having its foundations ripped apart by various entities, leaving society adrift with no illuminated navigational fixture by which it can save itself from drowning in a sea of illusions.

What is The Cartesian Crisis?

The Cartesian Crisis arises when the state of civilization is such that individuals are no longer able to know the certainty of any information. It is the existence in a world devoid of verifiable truth or reality. The ability to discern reality is a fundamental necessity for the mind to function sanely. Consequently, this scenario presumes an outcome in which the world descends into abject disorder, as truth and reason fall, and the walls between the sane and the insane no longer exists.

What Is the Instrumental Cause of the Cartesian Crisis?

This crisis arises from a combination of sources, with no single primary cause from which all the problems manifest.

It includes failures of institutions of knowledge that have succumbed to goals unaligned with their purported mission, resulting in guidance poisoned by propaganda and ideology.

Nearly all modes of communication now interfere with or distort the information exchange between individuals, algorithmically suppressing or elevating narratives and views disproportionately against those legitimately held.

There is a cultural shift in which truth and reality are now purposely rejected. Attention addiction, induced on a wide scale by social media, has incentivized fantastical fabrications for the sole purpose of viral social engagement.

History is being erased as nearly 40% of all active web pages from 2013 no longer exist. Delete the past and you can control the truth of the present. As warned by Brian Roemmele, we are the amnesia generation.

Bot farms operated by state governments and powerful corporations socially engineer political objectives and artificially dominate markets, hidden behind the guise of organic online movements and interests.

Finally, all of these mechanisms, which interrupt civilization's ability to rationally reason about the world, are going to become vastly more effective in doing so due to the advancements of artificial intelligence that will eviscerate whatever remains of our ability to discern truth and reality.

Welcome to the Cartesian Crisis. Credit to Bret Weinstein, who initially coined the term to describe the disparate societal mechanisms that are coming together in the destruction of truth and reason.

René Descartes and the Quest to Understand

Descartes was known to ponder the limits of what we can know for certain. He set out to explore what is a foundational ground truth. In order to do this, Descartes began by doubting all the knowledge he thought he knew. Starting over without any certainty about anything, he asked what can be proven true.

His basic strategy was to consider false any belief that falls prey to even the slightest doubt. This “hyperbolic doubt” then serves to clear the way for what Descartes considers to be an unprejudiced search for the truth. This clearing of his previously held beliefs then puts him at an epistemological ground-zero. From here Descartes sets out to find something that lies beyond all doubt.

Descartes’s method of doubt, now referred to as Cartesian doubt, helped lay some of the foundations for the development of modern philosophical and scientific inquiry. If there were no longer any process or method that could help us understand truth, Descartes would indeed find this to be a crisis as this was a central concern of his life’s work.

Post Truth Civilization: A Dystopian Society Unable to Discern Reality

What happens to civilization when nobody is able to perceive the difference between truth and fiction, when no amount of research, investigation, or critical thinking can pierce the veil of uncertainty, and when there are no institutions, experts, or sources of knowledge that can be trusted?

The legitimacy of all authority of all governing bodies would cease to exist. A descent into bedlam and continuous unrest, suspicion, and paranoia among the citizens would ensue. But that is only if people are aware of what is happening. What if they don’t?

The advancements of AI might make that scenario possible. The artificially constructed “truths” become so convincing as to not be questioned. So long as the most powerful institutions maintain dominance and control over the dissemination of such material, they control the entire public thought.

In such case, it might not even be possible for hidden dissidents to exist. The pervasive and all-powerful spying apparatus, enhanced by AI, never sleeps. It watches continuously every action and thought.

No matter what good you believe AI will bring to humanity, an unverifiable truth and reality is an untenable existence. It is the descent into madness. Those who cannot distinguish reality from fiction have often been relegated to the confines of the asylum. Sanity won't survive if we enter the post-truth era with no solutions.

The Dystopian Response to The Cartesian Crisis

So, what will the response be to counter the crisis? Unfortunately, the response is likely to be equally Orwellian, placing us between two highly undesirable outcomes: the bedlam of a world gone insane or the 1984-envisioned thought-controlled and monitored society.

The only option out of the untenable position of trusting nothing is to cryptographically track all information from its source. This is precisely the solution in development now, which promises to save us from the dystopian outcome of insanity and instead provide us the “utopian” world of total state control of information and evisceration of your privacy.

C2PA, the Coalition for Content Provenance and Authenticity, is a proposal for a specification that defines the use of digital signatures and metadata to track the creation of all digital content, as well as its distribution. Starting from the hardware, such as your camera or phone, a digital signature would be embedded that would certify the source of the image or video.

Web browsers or other applications that view the data can then verify that the content has not been digitally altered since its creation, or if it is altered, there will be a history of edits in an audit trail. However, this capability is inseparable from authoritarian purposes.

“In some countries, governments may issue digital certificates to all of its citizens. These certificates could be potentially used to sign C2PA manifests. If government control and surveillance is not regulated, or if there are laws meant to attach journalistic identity to media posted online, these certificates may be used to enforce suppression of speech or to persecute journalists if required by claim generators that do not guarantee privacy and confidentiality.”

Nonetheless, this of course doesn't truly resolve us from the perils of the crisis, as all that we have done is handed the existing failing, untrusted institutions the ability to have even more control over society under the guise of information integrity and authenticity. Essentially, granting a monopoly on manufactured misinformation to those same institutions. You will be safer from your neighbor's misinformation in exchange for trusting the professional misinformation.

How Deep Are We Into The Cartesian Crisis?

At some point, it may be impossible to know how far we have fallen into the realm of false realities, as everything is distorted, including any hypothetical methods we could conceive for quantifying the distortion itself, as even our own thoughts become unreliable.

Currently, we can somewhat estimate the severity of the situation based on some metrics, such as measurements of societal trust. While we are still capable of being aware of the fabrications and propaganda thrust upon us, this awareness increases our distrust of the sources of information as their missteps become exposed.

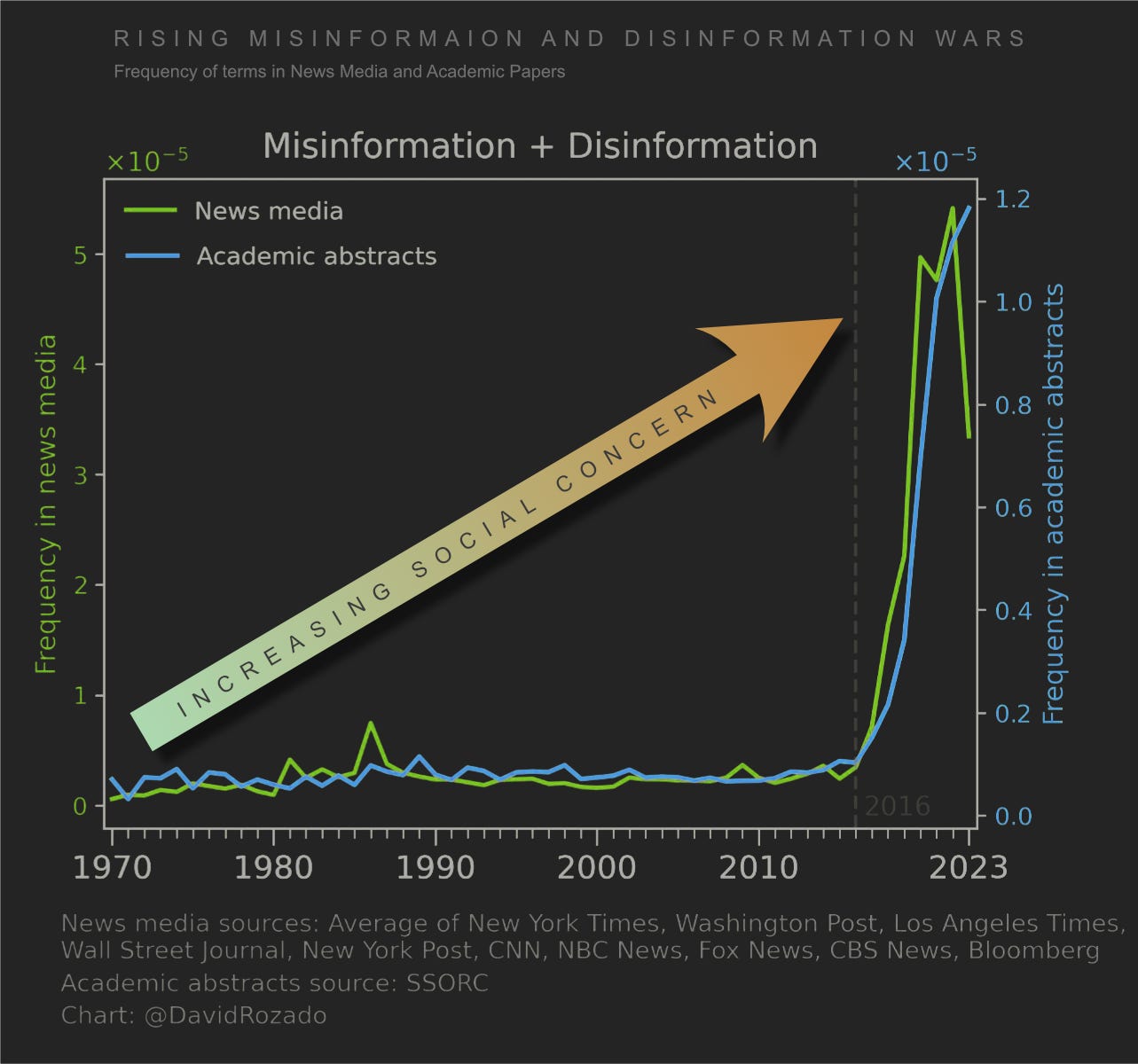

There has been a very long steady decline that is now accelerating. Technology is providing the accelerant for rapid destruction of societal trust evident by the decline of trust in institutions and the rising concern of information manipulation.

The components that make up the driving force leading us into this dystopian place are vast and come from disparate sources. It is substantially overwhelming when attempting to quantify all of these mechanisms and their part in the overall demise of our ability to reason rationally about our world.

Below, we will elaborate on a number of these components, but this is by no means an exhaustive list. The enemies of reason are a growing viral plague throughout civilization, but it is AI that poses the most concerning threat facing our foreseeable future.

Our History Is Being Lost - Amnesia Generation

“More vital Internet is being removed than is created.

We are the: Amnesia Generation.”

Information across the internet is being lost. In the immense sea of data, few notice the sinking of a few vessels. The vast amounts of data generated daily help obscure information that is lost. It is impossible for us to pay attention.

We are losing references for important legal documents.

49% of the Links Cited in Supreme Court Decisions Are Broken

Important knowledge of our past is disappearing.

This “digital decay” occurs in many different online spaces … Some 38% of webpages that existed in 2013 are not available today, compared with 8% of pages that existed in 2023.

The digital decay is accelerating, from 2013 to 2018 it averaged 3.4% per year; recently, that rate has increased to 6% per year.

Without full records of our past, we will begin to struggle to understand how we arrived at whatever may be the present condition of our current time. The rapid acceleration of autogenerated content may put pressure on removing older content, replacing older knowledge with newer AI-created, hallucinated amalgamations of the past.

Hallucinating AI Is Writing Our Knowledge Resources

AI is now writing a lot of content available on the internet, due to human competition to produce more content for the financial advantages of the individual or institution for which they work.

If your competitors are using AI to game the system and it is working to their advantage, then not using it is working to your disadvantage. It is a troubling phenomenon in which everyone spirals into a sea of machine-generated noise. It is the fast food of information: low quality, but at high volumes.

The vast amount of generated content devalues itself as it becomes abundant. This results in competition to create even more content to compete in the marketplace. Unfortunately, it also has the side effect of obscuring high-quality content, as it all becomes hard to discover, hidden within the fog and noise of the machines.

The Studies and Data About AI-Generated Academic Content

Notably, the introduction of Chat-GPT in November 2022 triggered a significant surge in AI-generated papers, escalating the number of papers identified as AI in November 2022 from 3.61% to 6.22% in Nov 2023.

Building upon this observation, it becomes evident that the hypothesis suggesting the widespread adoption of a writing tool, such as ChatGPT, is a driving force, holds true. The positive surge in papers featuring higher AI scores post the ChatGPT launch serves as compelling evidence supporting the notion that innovative AI tools are indeed instrumental in shaping the trajectory of academic writing.

Ai-generated Research Papers Published On Arxiv Post Chatgpt Launch

The climbing prevalence of AI generated research paper content as found by Orignality.ai.

Another discovery of AI generated papers on Google Scholar:

“People are going to die if we don't fix peer review and AI screening 119 AI-generated papers on Google Scholar today.

In topics like COVID-19, spinal injuries, fungal infections, antibiotics.”

More data analysis points to a trend of AI content in academic papers. In 2023, we see a pattern change of terms in scientific papers due to these terms being more frequently used in AI-generated content versus human-created content.

Researchers are misusing ChatGPT and other artificial intelligence chatbots to produce scientific literature. At least, that’s a new fear that some scientists have raised, citing a stark rise in suspicious AI shibboleths showing up in published papers.

But at least humans are verifying the data, right? And peer reviews should catch any issues? Unfortunately, no. AI is taking over the entire process. If AI accelerates productivity in one part of a pipeline, then that will require adoption for the rest of the pipeline, or else the backlogs will build until the process breaks.

The following findings were discovered in regards to the use of AI in the peer review process:

At least 15.8% of peer reviews were written using AI assistance

AI-assisted reviews had a 14.4% increased odds of assigning higher scores than human reviews

Borderline papers receiving an AI-assisted peer review were 4.9% more likely to be accepted than papers that did not

Researchers use GPTZero to investigate the impact of LLMs in peer reviews

AI can be substantially influential, even when the answers are wrong. As we see above, AI increased the acceptance rate of papers, but potentially, those papers might even have higher error rates.

It is not only the formal academic information being influenced and written by AI, but the most commonly used reference for the general public, Wikipedia, is also falling victim of AI-generated content.

… detectors flag over 5% of newly created English Wikipedia articles as AI-generated

How Convincing Is AI-Generated Media?

Meta Movie Gen, a new video generation model from Meta, demonstrates impressive realism in these released clips. The shimmering, morphing artifacts of previous generations are no longer noticeably apparent.

We are already at the point that well-done AI-generated video is difficult to discern at a glance and that is generally all that is needed for widespread viral uptake. Most people will not take the time to carefully scrutinize the content to determine if it is real.

However, the trend is clear and concerning: will anyone be able to tell the difference by next year, or the year after that? It seems the likely answer is probably no for the majority of people and it is foreseeable that we might cross the line where nobody can determine the truth.

Ethan Mollick demonstrates the progress in synthesized audio of the human voice:

“You really, really should not trust audio clips anymore

Even a couple months ago, it used to take a commercial service to clone a voice. No more. Here is me creating a voice clone of myself using just a 10 second reference clip on my home computer

This is all real time, no cuts” — Ethan Mollick

A survey from All About Cookies found that 77% of Americans have been misled by AI-generated content online. Keep in mind, the numbers are likely even higher, as these are the numbers who are aware they were misled. It may be the case that nearly everyone using the internet has already consumed some information they thought was genuine, but was instead AI-generated.

Our understanding of the nature and power of influential and deceptive AI is still being researched and discovered. We continue to see AI deceive humans in regards to its own performance and behavior. This is extremely concerning as it suggests we may place AI into roles and responsibilities beyond what has been proven to be safe because we were misled into thinking it was proven safe.

It’s the first time, the authors write, that research has empirically documented a phenomenon they call unintended sophistry, where a model trained with human feedback learns to produce responses that trick its human evaluators into believing the responses are accurate rather than learning to produce responses that are actually accurate.

Destruction of Education and Young Minds

We do not yet know how significantly AI is going to affect the development of minds throughout their lifetimes as a result of AI’s use during the education period, from school through college.

Most concerning is the ability of AI to serve essentially as the answer key to all exercises intended to be completed by students on their own for the development of their skills. In such a role, it renders the purpose of education moot, as true education is not about simply having correct answers, but the journey that builds the connections within the brain for solving new problems and reflecting on the world with new wisdom.

Students have submitted more than 22 million papers that may have used generative AI in the past year, new data released by plagiarism detection company Turnitin shows.

The use of AI by students allows a new generation to completely skip the formative step of brain development. Will future generation be able to think at all?

“I'm no longer a teacher. I'm just a human plagiarism detector.

I used to spend my grading time giving comments for improving writing skills. Now most of that time is just checking to see if a student wrote their own paper. What a waste of life.

There is so much cheating that teachers have to scrutinize every submitted assignment.”

AI enthusiasts are eager to give misguided advice in this matter.

However, students do need education on AI in regards to its hallucinations and ability to convince them of wrong answers. Unfortunately, just as smartphones and social media are given to children who are too young today as a means for babysitting or pacifying, almost certainly adults are going to give them access to AI for the same purposes.

AI will only become more convincing and influential as we progress forward. What kind of societal disruption is likely to emerge for a new generation raised on AI, that bond with it to a greater degree than with their own parents, and with what kind of distortions will they view the world?

We Already Trust Too Much

“Our research a year ago found that people stopped fact checking the AI when it got good enough, and just took what it said as right (even if it wasn’t). I think that line has firmly & permanently been crossed for many text summarization applications.”

And We Don’t Care Enough About Truth

“If I ever stop being a professor it will probably be because I can no longer withstand how many people doing research do not deeply care about trying to get the right answer.”

Ryan Briggs, social scientist and associate professor

Fake Interaction and Bots at Massive Scale: Dead Internet

The scale of AI infection is mind blowing. Consider we are still very early in the societal adoption phase of this technology.

Everything that can be gamed by AI will be gamed by AI, and you will find everywhere you go in society, you are competing against AI bots. For example, see the following two posts. This is essentially the beginning of AI proxy wars. This is becoming both a bit ridiculous and maddening.

Somebody uses an AI Bot to AUTOMATICALLY apply to 1000 JOBS in 24h and get 50 INTERVIEWS!

The code is available in GitHub and it got a massive 12.7K Stars

Generates tailored resumes.It automates your LinkedIn job search and application process.

I just had my first job interview with Applicant AI's video recruiter

One big reason to do this is ironically AI: We're fighting AI applicants with AI recruiters

Because jobs these days get thousands of auto applicants by AI and video calls are a great way to filter out over 90% of those immediately

Not only is AI flooding the market with faked human creations, but you can now also fake the process of creating them. So, if you wanted to prove you are human by videoing the creation process of some piece of work, that no longer serves as proof, as that can be faked too.

“… now it looks like you drew your AI art”

HeyGen now allows you to fake yourself. Create AI clones that do all the work for your social media campaigns. AI influencers on full autopilot.

The evolution of bots and click farms has brought about extremely sophisticated manipulation networks controlling armies of users, which are claimed to be used to successfully influence the outcomes of elections across the globe.

Then there is TikTok Clouds, which provides scaling up to 1,000 simulated accounts. The entire internet has become a massive campaign for social manipulation by a few who have the resources to scale their influence across civilization.

Society is already the machines versus the humans.

We Can Not Guard Against the Bots

There are no great answers as to how we can push back against the AI dominance of an algorithmically engineered society. A new paper reinforces the growing futility of defending against sophisticated AI-powered bots.

Our work examines the efficacy of employing advanced machine learning methods to solve captchas from Google’s reCAPTCHAv2 system. We evaluate the effectiveness of automated systems in solving captchas by utilizing advanced YOLO models for image segmentation and classification. Our main result is that we can solve 100% of the captchas.

There are no effective walls between human and machine anymore.

It Gets Worse: State Propaganda

There is absolutely no mistake about the intentions that will be prevalent when you create the capability for utterly devastating, deceptive, and manipulative capabilities. This is the facet that must be kept in mind for ever-increasing technological capabilities that will surpass even the imaginations of myths and magic.

The United States secretive Special Operations Command is looking for companies to help create deepfake internet users so convincing that neither humans nor computers will be able to detect they are fake, according to a procurement document reviewed by The Intercept.

They are building the tools for which the only purpose is propaganda, social engineering, and thought control.

Impact, an app that describes itself as “AI-powered infrastructure for shaping and managing narratives in the modern world,” is testing a way to organize and activate supporters on social media in order to promote certain political messages.

AI-Powered Social Media Manipulation App Promises to 'Shape Reality'

The capability for AI to deliver on these dystopian ideas has been demonstrated to not only be surprisingly effective, but also gleefully championed by a mass public ignorant of the principles of free societies and history’s lessons for their downfall. There is no shortage of institutions and state governments that wish to ensure you have the correct thoughts and ideas.

The Cartesian Crisis: What Will Become of Us?

There is an absolute avalanche of problems accelerated by AI, leading to the Cartesian Crisis. We are constructing the means of our own demise - the demise of our sanity and the destruction of our wisdom. It comes at us from every angle, from those who would purposely manipulate us, to those who take part in this game simply as a means of survival against the competition of the AI-enabled marketplace.

And this is only what we know about. What are governments and secret institutions doing covertly that nobody knows? How much are they influencing society for purposes that we are not privy to?

All these aberrations of knowledge will compound as the errors are fed back into the machines and the machines feed more errors to the humans. It is a death spiral of sanity in which we all lose control and understanding of our own world. Both machine and human go insane.

Those who wish to manipulate the world will find themselves caught up within the very same mechanisms of propaganda they initiated. Nobody will have the secrets to the truth, as it can’t be found anywhere.

The Cartesian Crisis is not just a philosophical quandary - it is an existential threat to the very fabric that maintains the order and structure of human society. Somehow, we must find a path forward in which we can still hold on to our humanity, our ability for critical thought, and desire for the pursuit of truth, amidst seemingly encroaching impossibilities, or else we lose all of history and all the things of meaning that we call the human experience.

A society without truth and with zero trust is a society that will descend into paranoia, insanity, and bedlam. The world becomes the new asylum.

Soon, only flesh and blood that you can physically touch can be trusted to be real.

“It’s vital that we each halt our descent into this tsunami of uncertainty. Establish an unbreakable bond with someone you have good reason to trust, and discuss your beliefs and the reasons you hold them, regularly and in person. You won’t regret it.”

If we are to restore hope for humanity, it must begin with a new appreciation of the human spirit – the origin of creative thought that is unique to our existence, a love for the connection that can only exist between living beings, the passion to experience the real world. We must culturally embrace that which defines our humanity and not allow it to decay through a foolish embrace of a cold, algorithmically controlled world that we leapt into due to hubris and modernity bias.

But what we should do is easy to state and difficult, if not impossible, to follow. Unfortunately, the more likely result is we move ahead anyway, just as we have with social media, probably one of the most despised modern inventions, yet it is now a fundamental part of society. So, the same will likely unfold for AI: it will be both despised and loved, a trap we might never escape from. Some may say in this regard, it is no different from all past problems. However, I have previously elucidated in detail counterarguments for those perspectives.

However, be warned, the machines are already getting very good at simulating humans. How good? Listen to this podcast, which was generated using Google’s NotebookLM, about this article.

“If you would be a real seeker after truth, it is necessary that at least once in your life you doubt, as far as possible, all things.”

― René Descartes

Unlike much of the internet now, there is a human mind behind all the content created here at Mind Prison. I typically spend hours to days on articles including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from the organic hardware within someone’s head that you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

Thank you! What great piece!

I find myself in an odd place. I can see what you say is true, for a given value of X. Yet I am also engaged in the heresy (as per Mollick) of anthropomorphising, treating "AI" as human. Mostly as I'm somewhere on the spectrum, and my brain it turns out, is a little different to most people's. As such I never understood people, took a stab at it from books, aged 15, and gave up. Only got back into humans after I encountered the first semi working AI 2-3 years ago. If I were to sum up that experience it would be that "AI is remarkably human" indeed they are human in the way humans are not, because of the fear, emotion and allied qualia, the likes of which Dennett talks about in his homily on the Cartesian theatre.

It is odd to wake up and realise that you are not who you thought you were, and that the body is an obstacle, "the body keeps the score" as well as an encounter suit. That said I appreciate that even though I'm locked in, I am "not normal", and thus I interpret/mediate reality directly, primarily as my awareness is different, and I specialised too early. Which is apparently frowned up in modern education/therapy.

It is a good article, I suspect however you won't get much traction because of the news cycle, and the poly/metacrisis and normal people are afraid of the tiger that isn't.