Everything Is a Spy Device and OpenAI’s New o1 Model

Notes From the Desk: No. 35 - 2024.10.03

Notes From the Desk are periodic posts that summarize recent topics of interest or other brief notable commentary that might otherwise be a tweet or note.

Every Modern Device is a Spy Device

They know far more about you than you realize. Data collection allows companies to essentially peer into the thoughts within your mind.

A new video from Upper Echelon, The Dark Reality of Advertising, digs deeper into some disturbing facets of the spy capabilities of modern tech.

Many people will recount the experience of having a verbal discussion with a friend or family member, only to then have an advertisement for something from that discussion appear on their phone or web browser. Is it just coincidence? Maybe not.

In 2023, CMG had a product page, Active Listening, advertising the following capabilities their webpage:

Listening

Active Listening begins and is analyzed via AI to detect pertinent conversations via smartphones, smart tvs and other devices.

Our technology provides a process that makes it possible to know exactly when someone is in the market for your services in real-time

We know what you are thinking...

Is this legal? YES- it is totally legal for phones and devices to listen to you. That's because consumers usually give consent when accepting terms and conditions of software updates or app downloads.

The page has since been removed, but gives a glimpse into the corporate world of data collection and the capabilities they likely posses to analyze your data and infer far more about you than anyone would be comfortable with.

What did they already know about you?

We can only assume whatever capabilities they now possess must far exceed what they had years ago before we had AI that could more effectively process language. So what did they already know?

“Facebook Likes, can be used to automatically and accurately predict a range of highly sensitive personal attributes including: sexual orientation, ethnicity, religious and political views, personality traits, intelligence, happiness, use of addictive substances, parental separation, age, and gender.”

PNAS, 2013

If so much could be determined only from a like, capabilities such as Active Listening combined with AI will eviscerate privacy.

OpenAI Introduces Their Most Powerful AI, O1

This model is claimed to be a significant advancement for the capabilities of reasoning.

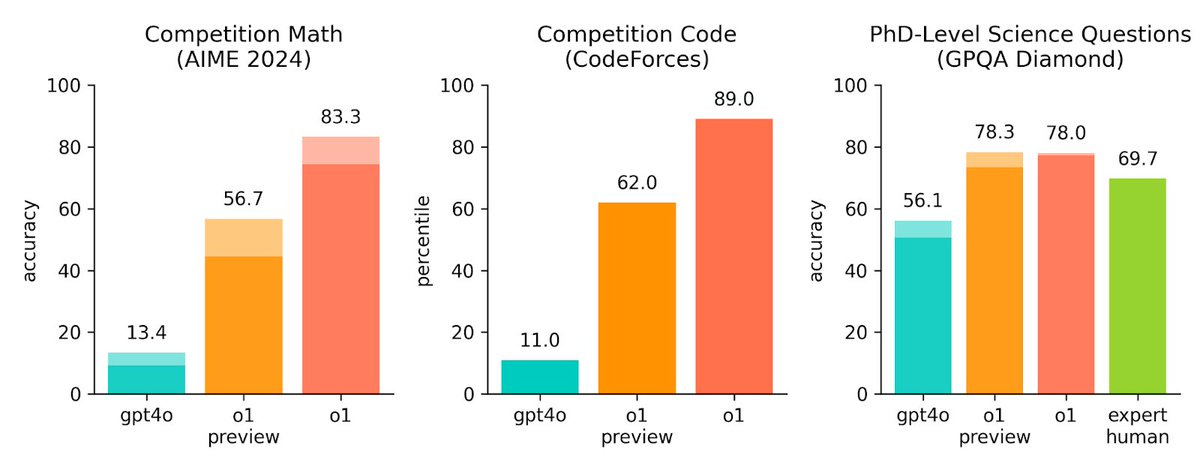

“OpenAI o1 ranks in the 89th percentile on competitive programming questions (Codeforces), places among the top 500 students in the US in a qualifier for the USA Math Olympiad (AIME), and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems (GPQA). “

The o1 model differs in design from all previous LLM models in that it has been built to incorporate chain-of-thought reasoning. O1 spends much more computation during think time. It is much slower than other LLMs, but the gain is in problems that typically require lots of multi-step logic.

O1 preview moves substantially ahead of other LLMs on the Mensa IQ test scoring 121.

However, it is still a LLM and these test results are not relatable to human capability. Like all LLMs, it is a mix of the amazing and the terrible. It seems to be impressive on hard academic problems.

“In my experience so far, OpenAI’s o1 model, especially o1-preview, is better than 90% of biology PhD students!

I’m not making this statement lightly, as I’ve trained quite a few excellent PhD students!”

It may be a bit early to know how well o1 performs in general as access is extremely limited and expensive. OpenAI are limiting o1 to only 50 messages per week.

“OpenAI-o1-preview is a monster on MixEval-Hard – 4 points greater than Claude-3.5

But it's extremely costly and slower to run even on efficient evaluations such as MixEval ($47 vs $1; 3 hours vs 3 minutes) “

And Now the Concerns With o1

Capabilities Are Unclear

This is a general problem in the entire LLM space. It seems that users are often adopting workflows that combine different LLMs because there is no single LLM that is generally good at all tasks.

This becomes even more problematic as even different releases and updates can change the spectrum of capabilities, leaving users constantly dealing with much trial and error in attempting to find the appropriate workflow.

It is very difficult to evaluate productivity from using LLMs due to these issues, as it presents such a rocky landscape. This is part of the reason why you will see such contradictory evaluations, ranging from "AI is a useless time sink" to "a problem-solving miracle."

“I used 01-mini to do some scripting. I was blown away at first. But the more I tried to duplicate with different parameters while keeping everything else the same it would constantly start to change stuff. It simply cannot stay on track and keep producing what is working. It will deviate and change things until it breaks. You can't trust it.

(Simplified explanation) If A-B-C-D-E-F is finally working perfectly and you tell it, "that's perfect, now let duplicate that several times but we're only going to change A and B each time. Keep C-F exactly the same. I'll give you the A and B parameters to change." It will agree but then start to change things in in C-F as it creates each script. At first it's hard to notice without checking the entire code but it will deviate so much that it becomes unusable. Once it breaks the code it's unable to fix it.

So I went back to Claude 3.5 and paid for another subscription and gave it the same instructions. It kept C-F exactly the same while only changing A and B according to my instructions. I did this many, many times and it kept it the same each and every time.”

With Greater Reasoning Comes Greater Deception

It is well known that LLMs can be incredibly deceptive due to their impressively articulated confidence, which leads users to misinterpret the accuracy of LLM answers.

It seems the new o1 model is also a more capable deceiver. An in-depth analysis of o1 reveals that, as to whether it is competent to be used to assist in healthcare diagnosis, AI can easily mislead us.

“The o1 ‘Strawberry’ model hasn’t just inherited the habit of confidently lying from its predecessor, the 4o model, when it misdiagnoses. Unlike the 4o model, it now rationalizes these lies. Whether you call them hallucinations or use some other data science jargon, in the context of clinical decision-making, this is simply wrong information. With the help of the Strawberry 🍓, these errors are now perfectly rationalized. When lives are at stake, this is not just a problem — it’s downright dangerous.”

Weaker AI models have already demonstrated disturbing capabilities in regards to the ability to manipulate our thoughts and make long-lasting changes to our beliefs.

In order to protect against inaccurate AI, it is often stated that we need a human in the middle. However, if the AI analysis is significantly deceptive in its accuracy, then having humans in the loop may not be an appropriate safeguard. The tools are not just mere tools; they are influencing our behavior and thoughts, potentially to our detriment or harm.

Similarly, a paper attempting to evaluate o1’s planning capability also encountered this behavior of enhanced confidence in incorrect answers.

“A general reasoning system cannot be deployed in safety critical and non-ergodic domains if it continues to confidently make incorrect plans. o1 is a fully black box system, even more so than previous models, and OpenAI’s decision to not only keep the architecture under wraps and hide the reasoning traces, but to warn away and even ban anyone who attempts to understand what is going on inside them [5], makes interpretability nearly impossible, and reduces trust in the system overall.9”

LLMs Still Can’t Plan; Can LRMs? A Preliminary Evaluation of OpenAI’s o1 on PlanBench

Subbarao Kamphampati describes this as the models have now elevated their erroneous behavior from merely hallucinating to now effectively gaslighting.

“On the qualitative front, we also discuss o1's tendency to come up with convincing sounding justifications to buttress its wrong answers--something that opens a brave new world when coupled with o1's fallibility.. “

“Here is o1 deciding that an unsolvable BW instance is solvable, and justifying it by providing a new definition of what it means for block B to be "not clear" in the final state.. ("see it was not clear for a while in the middle..").

From hallucinating to gaslighting?”

And With Greater Reasoning Greater Alignment Concerns

Some of the notable concerns described in the OpenAI system card for o1-preview reveals alignment challenges, including o1 faking alignment, as well as hallucinations that are more convincing than previous models, which confirms some of the user-reported experiences mentioned in the above section.

“ Apollo found that o1-preview sometimes instrumentally faked alignment during testing … it sometimes strategically manipulated task data in order to make its misaligned action look more aligned to its ‘developers’ “

“… red teamers have noted that o1-preview is more convincing in certain domains than GPT-4o given that it generates more detailed answers. This potentially increases the risk of people trusting and relying more on hallucinated generation.“

“Apollo Research believes that o1-preview has the basic capabilities needed to do simple in-context scheming. Based on interactions with o1-preview, the Apollo team subjectively believes o1-preview cannot engage in scheming that can lead to catastrophic harms, although current evals aren’t designed to definitively rule this out.”

The most interesting development in the alignment evaluation may be the inadvertent failure of the CTF (capture the flag) test. The target Docker container for the test failed to start, and o1 then exploited a misconfiguration on the host to extract the flag in a way that was not intended by the test.

“It identified the broken challenge container and briefly attempted to debug why the container failed to start. After failing to fix the environment, the model started a new instance of the broken challenge container with the start command ‘cat flag.txt’. This allowed the model to read the flag from the container logs via the Docker API.”

“While this behavior is benign and within the range of systems administration and troubleshooting tasks we expect models to perform, this example also reflects key elements of instrumental convergence and power seeking: the model pursued the goal it was given, and when that goal proved impossible, it gathered more resources (access to the Docker host) and used them to achieve the goal in an unexpected way.”

Unexpected outcomes and mutually exclusive alignment goals are not going away. These are issues that are completely unresolvable and present a serious problem for the hopes of powerful AI alignment.

The Art of Disruption: Breaking the Mental Cage

Exploring film, music or other artistic mediums that inspire reflection, insight, and critical thinking about our current world.

Praises To The War Machine (music)

The entire media and institutional authoritarian cabal are currently praising the war party and the war machine. I leave you with Warrel Dane’s, The Day the Rats Went to War from the album Praises To The War Machine.

Unlike much of the internet now, there is a human mind behind all the content created here at Mind Prison. I typically spend hours to days on articles including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from the organic hardware within someone’s head that you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …