AI Discovers Novel Cancer Drug, or Did It?

Notes From the Desk: No. 51 - 2025.10.25

Notes From the Desk are periodic informal posts that summarize recent topics of interest or other brief notable commentary.

Did AI Make a Novel Cancer Drug Discovery?

Google published this announcement, “How a Gemma model helped discover a new potential cancer therapy pathway”, which has led to statements such as “This is AI generating novel science. The moment has finally arrived.”

Is this a genuine discovery by AI, or will the fine print nullify the significance?

This discovery is not an example of AI being prompted to “find a novel cancer therapy,” as some headlines might imply, and then the model thinks, reasons, and performs research to obtain the answer.

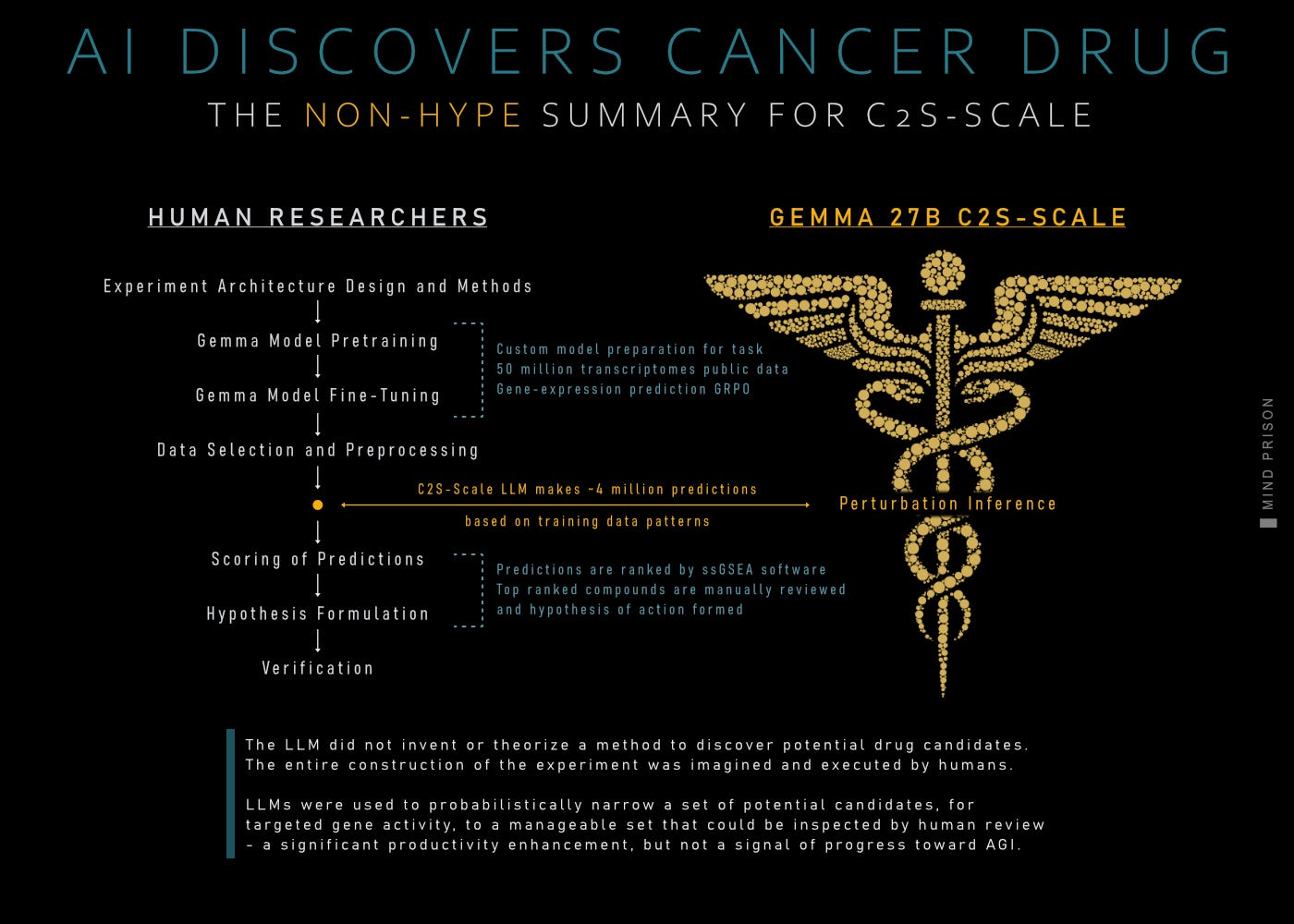

It is a complex process and pipeline that made use of LLMs. Let’s list the high-level steps performed, as described in the paper, so that we can see the fine print ourselves. The detailed steps can be found in section 4 of the paper.

Each topic heading indicates whether the step was performed by human or LLM.

1 - Experiment Architecture Design and Methods ( Human )

First and foremost, the entire experiment, setup, data, process, and execution are all planned out by humans. LLMs played no part in the planning or design of how a cancer drug might be found.

Humans supplied the architecture, data, and the proposed targets to search for or screen for the type of biological action that humans have identified as important. This was all done by human intelligence.

2 - Gemma Model Pretraining( Human )

50 million human and mouse transcriptomes from public data atlases and other related papers and information are used to train a Gemma base model 27B LLM for textual representations of “cell sentences,” which are genes ranked by their expression level.

3 - Gemma Model Fine-Tuning( Human )

Supervised fine-tuning was used to predict gene-expression profiles. GRPO was then used to optimize the prediction of key gene programs. This specially trained model is designated C2S-Scale.

4 - Dataset Selection and Preprocessing( LLM + Human )

A selection of drugs to test was derived from the L1000 resource which has over 30,000 compounds. This set was filtered using GTP-o3 to predict commercial availability of the compound. This step produced a library of 4,266 drugs to be used in the perturbation test.

5 - Perturbation Inference( LLM + Human )

The LLM is prompted by humans to make the candidate predictions. The LLM produced ~886 predictions per drug (486 in positive context + 400 in neutral), totaling about 4 million predictions across 4,266 drugs.

This step produces a list of drug candidate interactions. These are all probabilistic results based on the specialized training and data. The LLM is not creating results from an understanding of the science or research, but from pattern associations it can find in the data.

6 - Scoring of Predictions( Machine Analysis )

The data from the LLM perturbation inference is scored by a computer algorithm, ssGSEA, in order to have a ranking of the results. This is necessary because the LLM is not able to determine which candidates have the highest biological activity.

7 - Candidate Selection ( Human )

Humans then manually inspect the top-ranked results for novelty, looking for results that aren’t already known.

8 - Hypothesis Formulation( Human )

The method of biological action is hypothesized by humans, given what is the known characteristics of the candidate and their biological understanding.

9 - Verification of Potential( Human )

In the lab, in vitro cell testing is performed to verify biological action of the candidate. Note that, for any drug discovery, lab tests are still very early and preliminary results. Thousands of potential discoveries never become useful in practice, as either they don’t have the same action within the body or they have undesirable other effects.

AI or Human Discovery?

It is important to note that the LLM did not invent or theorize a method to discover potential drug candidates. The entire construction of the experiment was imagined and executed by humans.

LLMs were used to probabilistically narrow a set of potential candidates to a manageable set that could be inspected by human review. This is a substantial productivity enhancement for this type of task; however, it is not an accomplishment performed by thinking machines; it does not advance AI in any way toward something we would call AGI. Humans posses reasoning capability that is still not present in any current AI system.

Nonetheless, this is a far better use of AI, training on datasets intended to be used for research, and performing some narrow, defined tasks, versus much of the current public use of AI to fill the internet with hallucinated noise, to the suffering of us all.

Mind Prison is an oasis for human thought, attempting to survive amidst the dead internet. I typically spend hours to days on articles, including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from human beings, you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

If only we could keep it in its narrow lane, instead of allowing it to be proclaimed master of the entire highway, and letting it run amok amongst us.

The headline should read, "Number cruncher crunches numbers."

These people are dogshit.