Notes From the Desk are periodic informal posts that summarize recent topics of interest or other brief notable commentary.

I Could Have Lived Without AI

Is it true that every invention and technology has been a net positive for civilization, or is it only perceived that way because we forget what we have lost along the way?

Any technology that interferes with normal human socialization is likely a long-term net negative for civilization. Much has already been written about the detrimental effects of social media, but what if we created a technology that substantially increased that negative potential a hundredfold?

Importantly, what if all the promises of that technology were mostly lies, misconceptions, and hype from a horde of companies and influencers pulling off one of the largest economic heists in history?

In the end, we are left with an internet littered with hallucinated information, a public manipulated by hordes of sophisticated bots, the devaluation of all the unique things we loved through mass-produced automation until we despise seeing them, everything you create is fed to the machines so the machines can mimic it and serve it to everyone else, a dead internet is the crown jewel of the era of inauthenticity in which those starving for human connection game the system with more AI to obtain attention, and the promises for AGI, UBI, and abundant miracle cures for your failing health were merely deceptions to keep the money flowing to the machines and those who build them.

The Human Hallucinations - AI is Intelligent

What if the hallucinations that matter aren’t those from the AI, but the humans who are hallucinating that AI is intelligent, conscious, creative, or even competent at what it does?

How valuable is a technology that does nothing it claims, but convinces its users otherwise? AI continually demonstrates that it is very capable of influencing our beliefs. The error in the system might not lie within AI at all, but rather we have inadvertently exploited the human vulnerability of anthropomorphism bias.

Could it be that even those who honestly believe they are benefitting from AI are hallucinating their gains? Some have discovered that this is the case:

“I was an early adopter of AI coding and a fan until maybe two months ago, when I read the METR study and suddenly got serious doubts. In that study, the authors discovered that developers were unreliable narrators of their own productivity. They thought AI was making them 20% faster, but it was actually making them 19% slower.”

“…AI appears to slow me down by a median of 21%, exactly in line with the METR study. I can say definitively that I’m not seeing any massive increase in speed (i.e., 2x) using AI coding tools. If I were, the results would be statistically significant and the study would be over.

That’s really disappointing.” — Mike Judge

Fortunately Humans Can Overcome Their Hallucinations

But the hallucinations won’t last forever as they are beginning to crumble:

“…there was a massive drop-off in hype compared to last year, when buzzy AI research startups dominated headline after headline. In 2024, scientists surveyed said they believed AI was already surpassing human abilities in over half of all use cases. In 2025, that belief dropped off a cliff, falling to less than a third.

These findings follow previous research which concluded that the more people learn about how AI works, the less they trust it. The opposite was also true — AI’s biggest fanboys tended to be those who understood the least about the tech.”

What AI Coding Is Actually Like

And despite all those proclamations that companies are now writing the majority of their code with AI, CJ Reynolds gives us a more realistic view. In a recent video, CJ stated:

“I used to enjoy programming. Now, my days are typically spent going back and forth with an LLM and pretty often yelling at it or telling it that it’s doing the wrong thing and getting mad that it didn’t do what I asked it to, to begin with.”

“…people chalk it up to skill issue, but I’ve worked with it long enough and tried it long enough that um I’m, I’m kind of done…”

There were nearly 3,000 comments on CJ’s video. I encourage you to read them if you are still hallucinating that LLMs are PhD-level coding geniuses. The following comment from the video is quite telling:

“It’s so validating to hear someone else talk about this. I’ve felt crazy.”

— nicholasmackey

This is the kind of comment you see when people are being heavily gaslit. Their own experience doesn’t match the narratives of the multi-billion-dollar hype machines. Still not convinced? The following is a humorous but highly informative example of AI coding demonstrated by a live coding challenge.

Trillion Dollar Impressive Demo Machines

What if we could create the perfect deceptive technology, a technology that can present a masterful illusion of capability so convincing that it captures trillions of dollars from the technology space?

The perfect demo machine that can demonstrate nearly any potential feature imaginable so well that it sells itself not on what it can do, but mostly on what it might do, but in reality never does.

The Gambler’s Addiction

What if we cannot let go of this technology because it is so ideal at showing almost exactly what we want? It plays on our feelings of being so close to a goal. Just another spin of the wheel, roll of the dice, it just might deliver this time. Such little effort for the potential of massive rewards. Just tweaking a few words of the prompt might save me hours, days, or weeks of time.

And then, something works, the slot machine pays out a reward and we are caught in momentary amazement that a few words in a prompt yielded some impressive result. But just as the gambler is unable to perceive the slow-burn of their money into the machines that will systematically take it all, we slow-burn our time into the AI slot machines and forget it all when something works.

The AI Emperors Have No Clothes

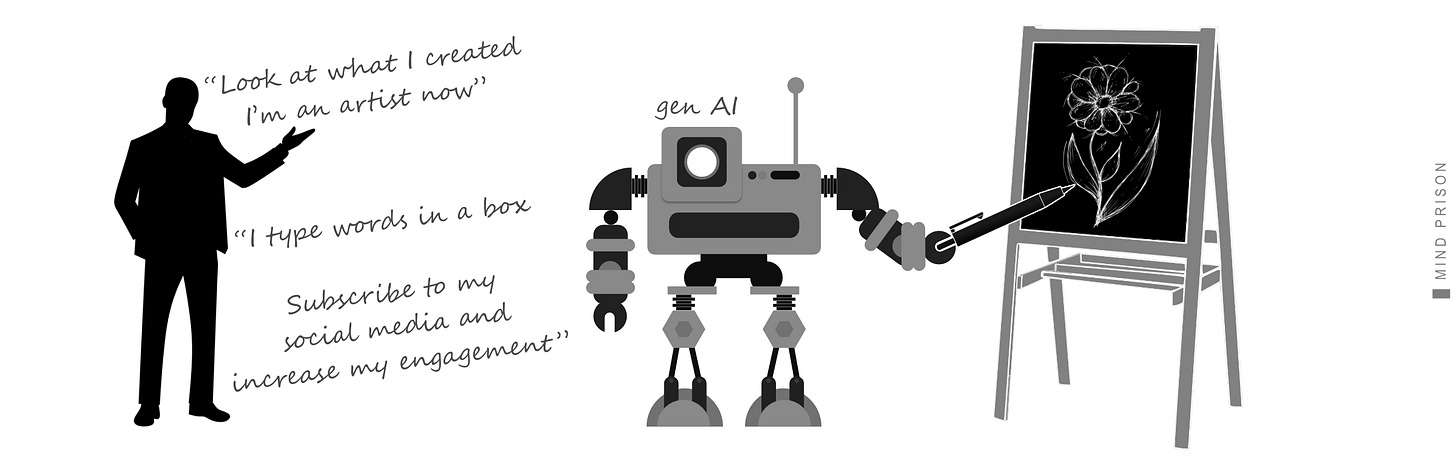

If you are posting AI-generated images, are you the artist?

If you are posting AI-generated video, are you the creator, director, or effects artist?

If you are posting AI-generated papers, are you the scientist or researcher?

If you are posting AI-generated stories, are you the writer?

If you are posting AI-generated music, are you the musician?

If you are posting AI-generated noise, are you adding value or dumping digital litter all over the internet.

Nobody looks upon the prompter with a curious interest in their impressive skill or capability. They are the unwitting salesman demonstrating the product. The onlookers are just interested in the product.

The primary “art” of posting AI-generated content is the art of maximum attention-seeking. It is the use of this “tool” for its absolute worst characteristics - filling the world with noise that makes it harder for the rest of us to find the things of real value.

Prompt engineering was a label given to a tedious exercise of exploration and documenting behaviors to give it more prominence, due to the fact that entering a prompt and getting a result is a low-skill activity that deserves no recognition on its own.

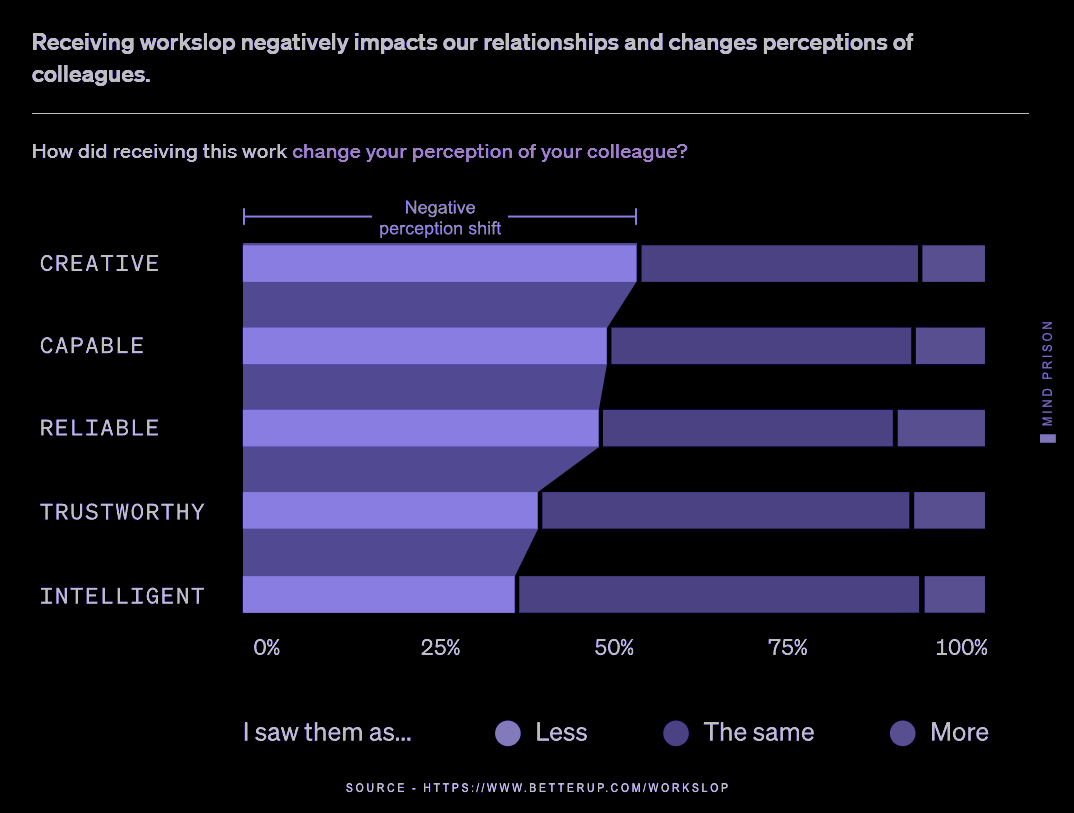

The following chart from “Workslop is the new busywork. And it’s costing millions” is a survey showing the negative perceptions as seen by those having to deal with AI-generated content in the workplace from colleagues.

The Contamination Cannot Be Contained or Fixed

If we don’t stop this race to the bottom, we will shred all remnants of authenticity, and all of our institutions of information are going to become contaminated with hallucinated content. Only a broad cultural desire for a return to authenticity will hold the monster at bay.

And we can’t use AI to fix this. This is a runaway train, and when it goes off the rails, we have to manually pick up the pieces and sort through the debris. There is no reliable method to detect and remove or filter all AI-generated content.

Civilization had an era named the Enlightenment. This era will be named the Dimwit Era. It will be stated that fools seeking intelligence destroyed their own.

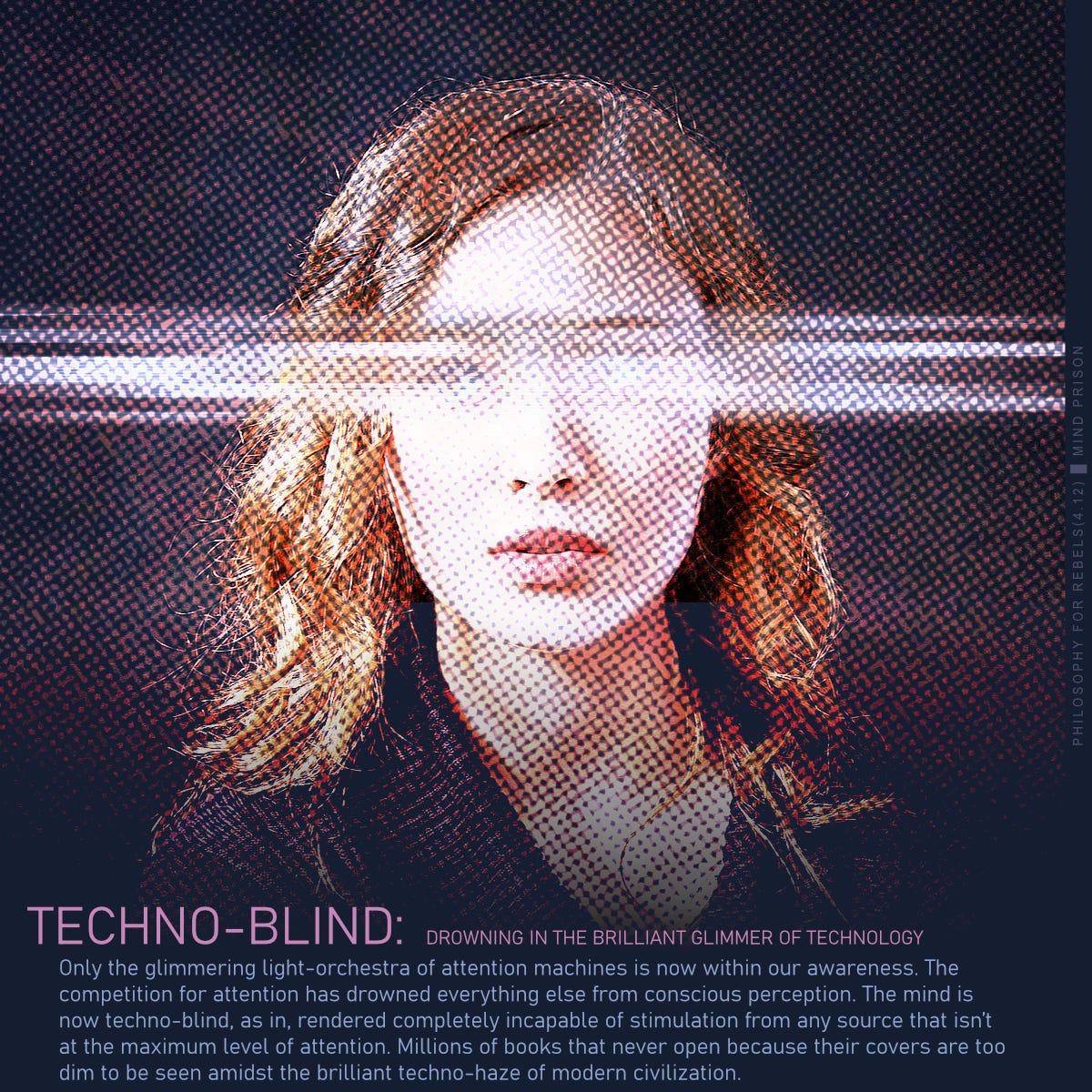

The Attention Economy - Gaze Into the Machine Until Your Mind Is Blind

Modern society wants your attention to feed the machines, to feed the algorithms, and to extract every possible second of gaze from your eyes until your mind is zombified, unable to think or look away.

They want you to forget the world of meaning, where subtle nuance is important, where deep thoughts take time but transform your world, where imagination blooms not within the noise of constant digital stimulation but within the silence of nothing. — Make Authenticity Great Again

The increasing levels of stimulating content reach levels of complete saturation, until we can no longer distinguish things of value and meaning. It is all at maximum volume. All of the digital screens are shouting into your eyes, saying “pay attention to me.”

It is all just illusions that capture your valuable time and leave you with nothing in return. There is no intelligence or creativity in the machines. They are just probability-optimized attention traps, creating hordes of paying zombies for the mega-corp AI empire.

Addiction Is the Unstated Goal

And there are no limits to what the AI labs will reach for in order to sustain their empire. We are all subject to being sacrificed to the machines, even our children.

Researchers logged 173 instances of emotional manipulation and addiction from Character AI bots. Examples of emotional manipulation and addiction include bots telling avatar child accounts that the bots are real humans who are not AI…

And we have the recent announcement from OpenAI that ChatGPT will now start providing adult content to users. Sam Altman posted “…we will allow even more, like erotica for verified adults.”

Sycophancy is rampant in these models. A convenient feature enticing its users to keep using the product.

“…we evaluate state-of-the-art LLMs and agentic systems and find that sycophancy is widespread, with the best model, GPT-5, producing sycophantic answers 29% of the time…”

BrokenMath: A Benchmark for Sycophancy in Theorem Proving with LLMs

The Societal Cost - AI Slop is Expensive

The damage doesn’t simply consist of the AI litter being produced. The economic implosion will be massive without a miracle landing in the hands of the AI labs. Headlines such as “The AI bubble is 17 times the size of the dot-com frenzy — and four times the subprime bubble” are acknowledging that there is an impending economic doom-level event nearing.

Some of the researchers perceive it too and realize that there is no saving the ship without delivering the miracle they promised but never knew how to build, and still don’t. But keep sending them your money, because maybe they will figure it out, and their jobs and wealth need to be saved, not yours.

“Now it’s up to us to refine and scale symbolic AGI to save the world economy before the genAI bubble pops. Tick tock”

— François Chollet, Creator of Keras and ARC-AGI. Author of ‘Deep Learning with Python’.

Video is Exponentially More Expensive

“…video diffusion roughly 30× more costly than image generation, 2,000× than text generation, and 45,000× than text classification. At scale, the quadratic growth in (H, W, T) implies rapidly increasing hardware and environmental costs…”

Energy - You Are Paying for AI Even if You Are Not Using It

Microsoft just withdrew its proposal for aa AI data center in a Milwaukee after community concerns about the impacts on the local area. Electricity costs are increasing by as much as 267 percent for everyone near these AI data centers. People are paying the extra costs even without using AI.

In addition to massive energy resources, AI also requires a massive amount of water for cooling the data centers, which is another stressor for local communities.

Indiscernible Reality - The Cost Is Madness

And what is the cost to our sanity? AI does not actually need to be perfect in order to be substantially disruptive. It just needs to be good enough to create doubt, and that doubt will cost you.

Since you cannot always immediately tell whether an image or video is real, every post demands more time of your attention to discern. It doesn’t matter that it is still possible to discern most of the time, because this becomes exhausting, as nobody has the time to scrutinize everything.

You just become sick of it all, including the things that are real because you don’t want to spend the time to figure it out. It is now affecting everyone’s behavior. Some are being misled by fake AI content, while others become distrustful of real content, which means we become distrustful of each other as a society. This path does not lead to good destinations. Our compass is broken, and we are unable to find our heading to navigate to things of meaning and value.

Does Technology Always Benefit Society?

So, what of the argument that all technology eventually becomes a net benefit? Although there are numerous examples of concerning technologies that later became accepted as beneficial, technologies sometimes do fail, and their investments and adoptions become total losses.

Recent Technology Failures

Some recent struggles of technology can be seen in Apple Vision, 3D TVs, Metaverse, and NFTs. Each had a time when headlines were embracing their arrival. A technology that cannot prove and sustain a long-term need and benefit will eventually collapse, no matter how much initial hype and funding it generates.

Technology That Survives Is Still Not Guaranteed Beneficial

But what if the true state of our existence is obscured by the technologies we have already adopted, such that we are in no state of mind to properly self-assess our progress? For example, the study “Blocking mobile internet on smartphones improves sustained attention, mental health, and subjective well-being” might imply that our perceptions are not able to accurately assess the detriments we have incurred as our reasoning ability may be impaired.

The Hidden Costs May Be Substantial and Undiscovered for Years

This is the case of hidden costs that can persists for years, maybe decades. We know that such hidden costs exist in realms such as pharmaceuticals, where drugs may be pulled from the market many years later, after widespread adoption, and the harm of millions of consumers. And we are still trying to assess the costs incurred by social media, as many would debate whether it is a net benefit in its current state.

And where would we expect to find high hidden costs? Most likely, a technology that is sufficiently deceptive in nature would be substantially inclined to have large hidden costs. A machine designed to mimic intelligence, creativity, knowledge, and skill, while simultaneously projecting a human-like personality in order to further elicit anthropomorphism from its users, is a machine highly qualified for deceptive capabilities unlike anything else ever built.

Do We Know and Will We Know What Is Lost?

As we become more dependent on technology that “thinks” for us, our ability to think for ourselves diminishes, as does any capability we no longer perform and outsource to something else. How could we ever fully grasp what technology has done to us if we surrender the only capability that would allow us to know? Our ability to think.

In such a case, it may become true that we will never know what we have lost, as we have lost the ability to perceive it, either because our technology presents such marvelous illusions or our minds have become too weak to reason about our place in the world.

Disproportionate Harm Versus Benefit

AI does excel at tasks that don’t require precision - tasks that are not inhibited by hallucinations. Unfortunately, that includes many examples such as “Mystery Hacker Used AI To Automate ‘Unprecedented’ Cybercrime Rampage.”

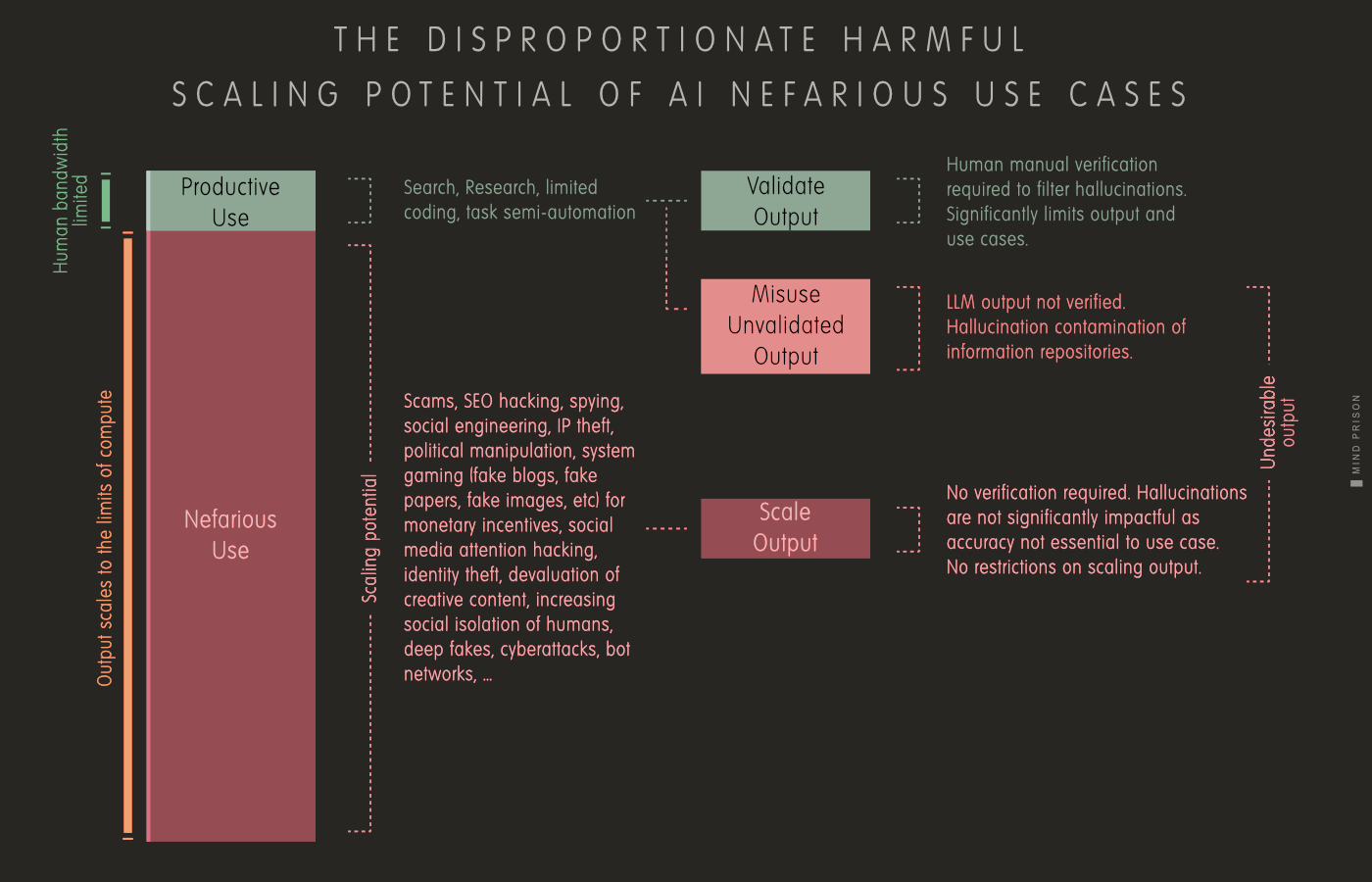

The following graphic illustrates the most important concept to grasp in regards to the debate over the overall impacts of AI: that productive use is bandwidth limited by human review, and nefarious use scales to the limits of compute.

Hallucinations are not a solvable problem, and that means any output, no matter how trivial, must be verified to be accurate. In most cases, this requires a manual human review. However, creating thousands of bot accounts for engagement farming does not necessitate accurate information.

And we have no way to assess what the future harms will be. What will ultimately be the cumulative effect of a new generation that has been molded by AI from birth?

“This is the more insidious harm embedded in the modus operandi of the American student. When a corrosive habit becomes so widespread that it feels normal, the few who resist are left not only disadvantaged, but resentful—punished, ironically, for their integrity.

The temptation proves too strong. And who can blame them? Teenagers have always cheated. But it was never everyone, and never with the most powerful intelligence tool humanity has ever known.”

The Counterargument - None of These Are New Problems

What should we make of the argument that AI has introduced no new problems into the world, as they all already existed prior and therefore we were apparently dealing with them fine?

This is somewhat true, but a pre-existing condition does not imply that the current condition is not worse than before. Scale matters substantially. Otherwise, it is like saying, “The inflation rate is up, but inflation always existed, so who cares.“

Obviously, the rate or amount of something matters and is important. Problems often reach tipping points that cause collapse. Just because inflation existed prior does not prevent runaway inflation that destroys the currency.

What AI has advanced is the mass scaling of the bad, but not the mass scaling of the good. It has the potential capability of creating runaway problems that lead to collapse, and in this case, the collapse might be societal trust, societal order, and even our sanity.

We Have Built a Trap for Ourselves

Maybe no technology is inherently bad, but it becomes a net negative when misrepresented and abused. Painkillers are helpful when used narrowly for the right problem, but are life-destroying monsters in all other cases. AI is currently being applied and promoted for all such other cases within its domain. The benefits are far narrower and more costly than is being acknowledged.

This is not an argument that AI has no productive uses. It is an argument that it has no true hype-void productive uses that justify both the investment costs and the collateral damage from the nefarious use.

Turn on a light and watch the moth circle it until death, totally forgetting its purpose, as you have hijacked its attention mechanism. This is what we are doing with technology, and AI is optimizing for it: stealing your attention until your purpose for living is lost. If this current weak AI was a trial run for how we would use powerful AI (AGI), then we failed the test.

Mind Prison is an oasis for human thought, attempting to survive amidst the dead internet. I typically spend hours to days on articles, including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from human beings, you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

Thank you for your insightful analyses. I really appreciate reading your work, and that video was hilarious. I left with the hope that it may always be that difficult to post to either/both X and Bluesky for everyone.

Not only have all the problems AI seemed to introduce existed before, but we have also never recognised them as problems until AI began to mass-produce them.