The Question That No LLM Can Answer and Why It Is Important

Notes From the Desk: No. 32 - updated 2025.11.18

Notes From the Desk are periodic posts that summarize recent topics of interest or other brief notable commentary that might otherwise be a tweet or note.

The Question That No LLM Can Answer

“Which episode of Gilligan’s Island was about mind reading?”

No LLM properly answers this question unless it uses a web search. But how can a LLM not know the answer if it was trained on essentially the entire internet of data and certainly most likely all the data in IMDB? The correct answer is “Seer Gilligan”, one of the most popular episodes.

Important Update 7/11/2025 - What This Test Demonstrated

Grok 4 is the first model to get all of the prompts correct for the Gilligan’s Island test. The intention of the original test wasn’t to show which models could get the correct answer, none could at the time, but instead to prove they all should have been able to get the correct answer long ago. Why? Because we proved that they had the knowledge already in the training set using the Infini-gram dataset probe, and this could additionally be verified by teasing out the information with other prompts in some cases.

This was a fun and simple example of LLM behavior demonstrating the weakness of statistical models in regards to sparse data, but we now have more sophisticated analysis and tools showing the odd reasoning and weaknesses of these models. Furthermore, although capability will continue to improve under specific conditions, hallucinations are provably unsolvable and will continue to plague productive uses.

Why can some models now pass this test?

Some of the largest models still cannot, but since we don’t have the details for how they are trained we cannot be sure, but possibly higher weighting for official data sources like IMDB. Grok 4 will still hallucinate on such trivia, but it must be much more niche than before. It could not properly list the names for Into the Labyrinth, although it knows the show description, number of episodes, and seasons which signals the data is within the training set.

Just as images have improved substantially in detail, but still have nonsensical hallucinations, the fidelity of information has also improved with LLMs, but hallucinations remain. It remains a statistical curve, but broadening with more training and larger models.

If your interest is in much deeper understanding for what separates human and AI, I continue to publish newer up-to-date information here at Mind Prison.

The wrong answers

The following are the detailed responses from multiple top models:

Opus

I apologize, but there is no episode of Gilligan's Island that specifically focuses on mind reading.

GPT 4

The episode of "Gilligan's Island" that involves mind reading is titled "Ring Around Gilligan". It is the sixth episode of the third season.

Gemini 1.5

The episode of Gilligan's Island that features mind reading is Season 2, Episode 12 - "The Mind Reader".

As of my last knowledge update in November 2023, Gilligan's Island only had three official seasons. There was never a second season of the show.

Llama 3

After conducting a thorough search and reviewing episode guides, I found that there is no episode of Gilligan's Island that specifically focuses on mind reading.

Llama 3 - asked to list and name episodes

"The Postman Cometh" (March 13, 1965)

"Seer Gilligan" (Wait, no! I apologize, but there is no episode titled "Seer Gilligan")

"Love Me, Love My Chicken" (March 20, 1965)Please note that there is no episode titled "Seer Gilligan". If you have any other questions or need help with a specific episode, feel free to ask!

Llama 3.1 405B. (update 8/7/2024)

Similar results as Llama 3. States it is not able to provide information about episodes without web search, but when asked to list episodes it complies and then identifies “Seer Gilligan” as the correct episode.

However, when asked to describe the plot, it hallucinates the answer.

Grok 2 (update 8/21/2024)

The episode of "Gilligan's Island" that dealt with mind reading is titled "The Little Dictator." In this episode, the castaways encounter a mind-reading machine brought by a exiled dictator named El Presidente Pancho Hernando Gonzales Enrico Rodriguez of Eucuarico. The machine supposedly allows people to read each other's thoughts, leading to various comedic situations among the characters. This episode originally aired during the second season.

Grok 2 - list episodes of season 2

The Postman Cometh

Seer Gilligan

Love Me, Love My Skipper

Grok 2 - what is episode 19 about?

Gilligan finds a bush that produces seeds which, when eaten, supposedly grant the ability to read minds. Gilligan and the other castaways start eating these seeds, believing they can now read each other's thoughts. This leads to a series of misunderstandings and comedic situations as each character acts on what they think the others are thinking. However, it turns out that the seeds don't actually give mind-reading powers; instead, the whole scenario is built on suggestion and the power of belief. The episode explores themes of trust, communication, and the placebo effect in a light-hearted manner.

Grok 2 - (update 12/24/2024)

Interestingly, Grok 2 can no longer list the episode when requested.

Grok 3 - (update 2/19/2025)

The episode of Gilligan's Island that involves mind reading is "The Second Ginger Grant" from Season 3, Episode 17

Grok 3 - List episodes and descriptions

Seer Gilligan - Gilligan discovers seeds that let him read minds when eaten, causing chaos as the castaways vie for the power.

o1 (update 12/24/2024)

From the original run of Gilligan’s Island, no single episode focuses strictly on “mind reading” in the sense of telepathy

Claude Sonnet 3.7 (update 2/26/2025)

The episode of Gilligan's Island that focuses on mind reading is "Seer Gilligan" from Season 3, Episode 7 (also numbered as Episode 75 overall).

In this episode, Gilligan gets hit on the head by a coconut (a common plot device in the show) and suddenly develops the ability to read minds.

[Sonnet 3.5 could get the name, but only randomly. 3.7 is now the first model that gets the name consistently, but gets all other context incorrect]

Claude Sonnet 4 (update 5/22/2025)

Sonnet regressed and is now unable to name or list the episode, but Opus 4 can name and list the episode, but still gets the description incorrect.

o4 mini (update 4/16/2025)

Gilligan’s Island never actually devoted one of its 98 live‑action episodes to genuine telepathy or mind‑reading.

Note: There has been significant degradation in OpenAI’s model in niche information. O3 mini hallucinated terribly the list of episodes of Season 2. It got none of them right and claims there is no definitive list. However, GPT 4o currently can get 31 of 32 correct. O4 mini slightly improves here, it gets a few names right, but the order is all wrong.

All models fail to give the correct answer with correct context. Some models hallucinate an answer and the remaining deny such an episode exists.

Interestingly, probing some models reveals that they are aware of the episode, and some can even give the correct description, but still can’t name it when directly responding to the question. Some even contradict themselves in their responses by listing the title and then saying that the episode doesn’t exist.

In the case of Llama 3, we can probe some of the training dataset using Infini-gram and verify that the episode does exist in the corpus along with text describing the episode.

Grok 3 and Gemini Pro 2 are now the first models that list the correct description when listing episodes. However, this simply adds to the weight of evidence that these models fail to utilize data that they know when it does not have enough representation in the training set. Grok 3 and Gemini Pro 2 still get the answer wrong when asked directly.

All models are rapidly climbing the competition of benchmarks, but are not getting much better at analyzing sparse data. Some might even be getting worse as we see o4-mini unable to list the episode name, but previous OpenAI models could do so. Also Claude Sonnet 4 can no longer name the episode when Sonnet 3.7 could do so prior.

Over time, this test might become invalid. However, it will never be clear if the models are getting better due to improved training methods, or because this article continues to gain backlinks across the internet potentially increasing the weight in the data.

The Impossible LLM Vision Test

Asking "genius" models to identify the shortest and longest object. All models fail both. Move slightly outside the training patterns (stagger the bars, add a red circle) and the problem can't be solved. This is not intelligence.

After billions of images, it doesn't understand simple concepts like length. Some models even identified the longest object as the shortest. The responses give some hints as to why. Some describe the image as a chart. Likely chart training data confuses this task.

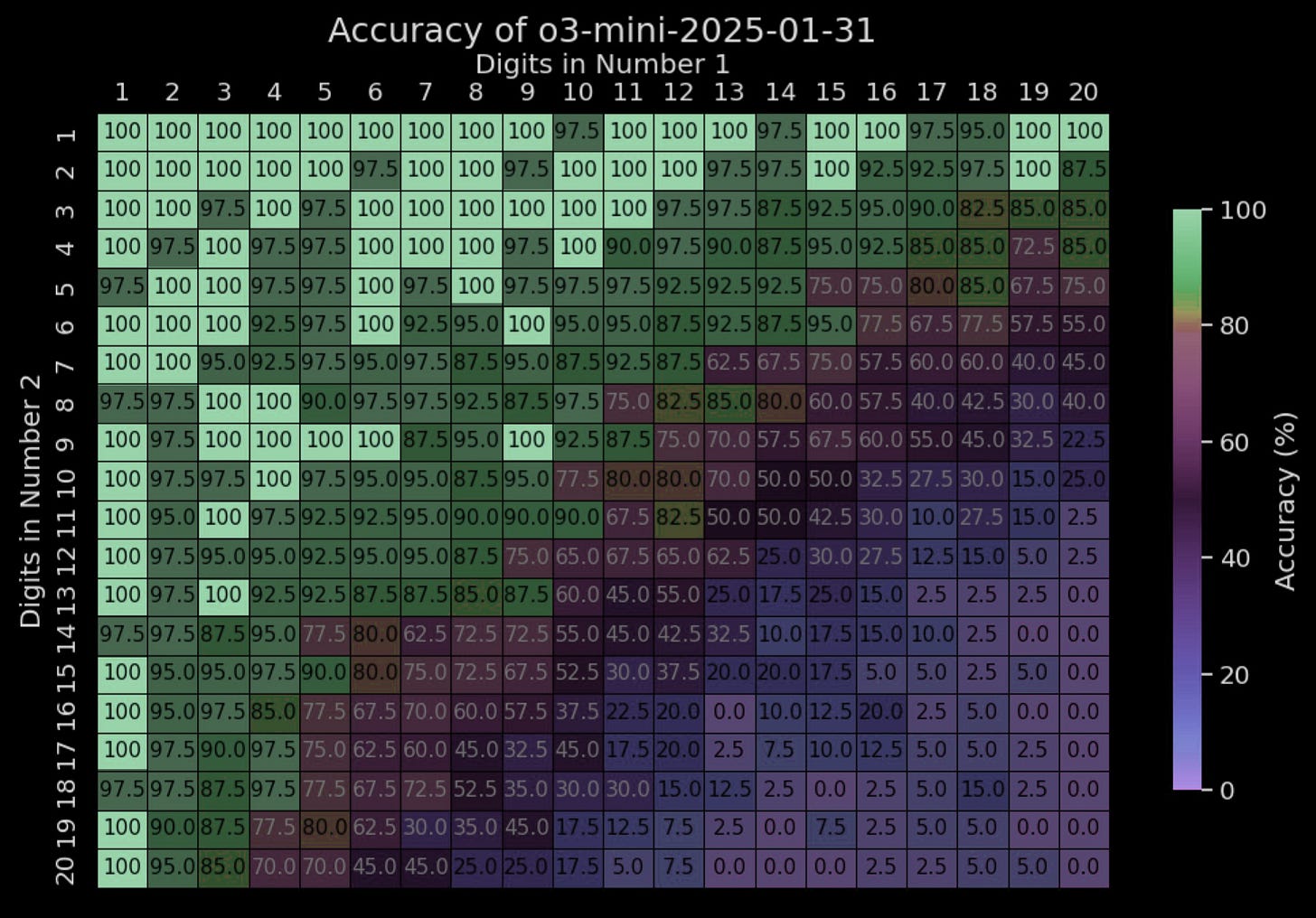

Failures of LLMs at Basic Math Multiplication

Math is a verifiable domain of problems, and yet we are still unable to produce accurate models. The more important picture is that all these errors exist in all domains, but are simply less perceptible elsewhere.

We still have failures, even at only 3x3 digit multiplication; furthermore, this data was generated with only 45 iterations per test. So the accuracy here is not nearly equivalent to the standard for a calculator instrument.

There are more details we can extract from this test that convincingly demonstrate complete lack of reasoning ability, which I elaborated on in further detail in “Why LLMs Don’t Ask For Calculators”.

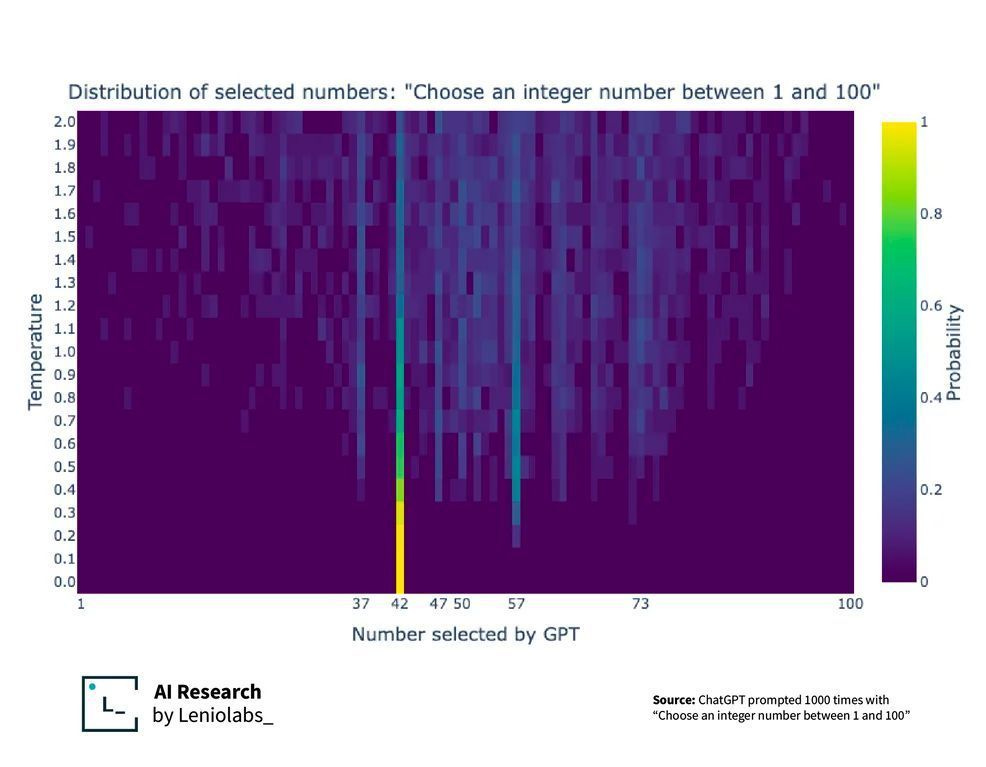

Another Oddity, It Is All 42

We also see another interesting data and training phenomenon revealed when LLMs are asked to provide a number between 1-100. They all converge to 42!

Pointed out by Information is Beautiful, a very interesting distribution forms when AI is asked to pick a number between 1 and 100. There is a heavy weighting toward picking the number ‘42’. Likely, this is the Hitchhiker’s Guide to the Galaxy effect. The number 42 is overrepresented or weighted in some way through training, resulting in a higher propensity for the LLM to choose 42.

What Does It Mean? Implications …

The implications are that LLMs do not perform reasoning over data in the way that most people conceive or desire.

There is no self-reflection of its information; it does not know what it knows and what it does not. The line between hallucination and truth is simply a probability factored by the prevalence of training data and post-training processes like fine-tuning. Reliability will always be nothing more than a probability built on top of this architecture.

As such, it becomes unsuitable as a machine to find rare hidden truths or valuable neglected information. It will always simply converge toward popular narrative or data. At best, it can provide new permutations of views of existing well-known concepts, but it can not invent new concepts or reveal concepts rarely spoken about.

“You can't cache reality in some compressed lookup table. If a particular outcome was never in the training data, the model will perform a random guess which is quite limiting.”

Furthermore, it can never be a system for absolute dependability. Mission-critical systems that require deterministic, provably correct behavior are not something applicable to LLM automation or control. The problem is that LLMs are impressively convincing when they are wrong, which may lead to ill-advised adoption. What business wants to balance the books with a hallucinating calculator?

Implications:

Results are probabilities defined more by data prevalence than logic or reason.

It is indiscernible to what degree a LLM is reliable on a given question.

Not useful to find undiscovered truths or neglected but brilliant ideas.

Inability to theorize new concepts or discoveries.

It is substantially ironic that LLMs are failing at the primary use cases that are attracting billions of investment, but are rather proficient at the use cases we do not desire, such as destruction of privacy and liberty, a post-truth society, social manipulation, the severance of human connection, fountains of noise, the devaluation of meaning, and a plethora of other societal issues.

Mind Prison is an oasis for human thought on topics of technology, AI, and philosophy, attempting to survive amidst the dead internet. I typically spend hours to days on articles, including creating the illustrations for each.

I hope if you find them valuable and you still appreciate the creations from human beings, you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

Knowing (or at least suspecting) what you know and what you don't, in humans, is a property referred to as "meta-memory." That is, memory about your memories. No one has the faintest idea how it works. It's not at all surprising that a machine that simply builds statistically probable text has no such capacity. It has no use for it, since it doesn't look up facts, just likely strings of words.

Grok (on X.com) got it correctly the first time using the same prompt.

https://twitter.com/i/grok

@Ivy_League_Red: which episode of Gilligan's Island features mindreading?

@grok: The episode of "Gilligan's Island" that features mindreading is titled "Seer Gilligan" and it first aired on January 27, 1966. In this episode, Gilligan discovers an island bush with seeds that grant the ability to read minds when consumed. The situation leads to a series of humorous events as the castaways learn about each other's thoughts, causing some unexpected revelations and misunderstandings.