AI accelerates post-truth civilization

The erosion of verifiable reality and the embrace of fiction will cost us greatly

Unverifiable truth and reality is an untenable existence. Those who can not distinguish reality from fiction have often been relegated to the confines of the asylum. However, we may soon find that we all exist in such a state of mind imposed upon us by both technology and the societal culture it promotes.

There seem to be parallel evolutions both leading to this outcome. Technology is providing more impressive means to construct fake and deceptive content which is a phenomenon despised by nearly everyone seeking to understand anything about the world. However, simultaneously a large portion of society is embracing the creation of such content for the fame and glory of social media status and attention.

This duality likely even exists within the same individuals. Those who present a deceptive or enhanced persona of themselves simultaneously complain about all the misinformation that exists within the world.

Face filters and such have normalized the acceptance of a fake existence. There is little resemblance of an online “reality” anymore on much of social media. It is all about seeking attention by any means necessary.

What is post-truth

It is both the inability to discover truth and the embrace of fiction pretending to be truth. It comprises a vast set of components from virtue signaling social media, reality filters like face filters, generated media hooks for engagement and attention, deceptions, scams, disinformation, social engineering and state propaganda all of which are leveling up rapidly in capability due to rapid advancements in AI technology.

Some will state we have already been in a post-truth society. Others believe it to be a state of equilibrium from the beginning of time between truth and lies. It has always been here and always will be. Therefore, it is a moot point to consider.

However, I will argue to whatever degree it exists or not, that we can quantitively build support for the phenomenon to be increasing in effect.

The era of unreality

We have built the tools for the construction of elaborate convincing masquerades of our choosing. It is not that we are entering the virtual world. We are bringing the virtual into our own to replace our reality. We are convincing ourselves of the fiction we can create and believing it to be real.

As a society, there is a broad movement encompassing multiple facets and dynamics that are making it a norm to reject the physical presentation of ourselves and embrace a new construction of being to align with what we want to be. However, is this need inbuilt or a result of social conditioning that makes us unhappy with our natural state?

There is a growing divergence between what and who we are and that which we pretend to be. The line of illusions begins to blur and the distinctions of what is real become unclear. It is mutually understood that avatars in a game are fantasy, a temporary escape. However, the pretend world has begun merging with the real world through social media filters. A primary means of communication among much of society facilitates building deceptive facades.

Skill adoption

It is not only about the false projection of appearance but also the adoption of skills that we don’t have.

At some point, technology within some domains reaches diminishing returns for the upper band of skill and begins to compress the lower band of skill into the upper. This essentially means those at the top gain fewer advantages than those at the bottom. The distinction begins to disappear.

“Billy Eilish being a great singer is not getting the credit that she deserves because pitch correction and auto-tune are so widely used in the industry.

So it means that yeah unfortunately artists don't get the credit they deserve and artists who can't sing very accurately get the same credit as those who actually can do it.”

Auto-tune is a minor capability compared to what is coming. Complete song production from a prompt just like image and video generation. The incentives to do something real continue to diminish when the result is no longer discernable. Wings of Pegasus makes the point that much of society is losing the ability to discern true skill. The motivation to put in extraordinary amounts of work to accomplish something impressively unique will likewise also diminish.

The capability to portray skills that we don’t actually have will rapidly expand into all domains. This has already become a controversial topic for AI art.

Attention loves fiction

Attention-seeking isn’t always about projecting an imaginary persona of one's self. It also loves any emotional drama. This motivates the creation of lots of fake content to trigger emotional responses. The reward is of course lots of attention which is the fuel for the social media machines and the drug of many of its users.

The fiction generated for social media attention will continue to become both more convincing due to capability and more extreme due to competition for attention.

Embracing fiction

These are the social untruths that are now widely accepted. These are the societal reasons vast numbers are voluntarily creating the very problem we oppose. It is not just politics and state governments creating propaganda for social engineering, but great numbers are doing so simply to satisfy the attention addiction in some form.

We have seemingly two motivations coming from distinctly different places pushing society into the despair of fiction replacing reality. Those who seek fame and those who wish to control others.

The counterarguments

My recent post, Post-truth society is near, received some attention on Hacker News. Going through all the comments the sentiment for no concern vs. concern was about 3 to 1. In other words, a significant majority see little issue with the rapidly increasing capability of AI to produce deceptive content. That disproportionate viewpoint led me to create this longer-form article to go further in-depth on this topic.

As anticipated in the previous article, the predominant counterargument was that it is the same problem that has existed since the beginning of time. AI adds nothing new to this equation that will have any significant effect worthy of greater concern.

Below are some collections of comments from the previous post and my thoughts on each critique.

“Uh... Post-truth has been here awhile. They’ve had the capacity to fake/alter live events for decades now.”

“Generative AI doesn't change the equation and was never a requirement to successfully manipulate public opinon. Hell, most of these problems with misinformation are probably as old as the emergence of sedentary human societies.”

“There is so much baseless hysteria about this subject.”

“This argument around cost of fake content would be more convincing if it weren't already used countless times throughout history.”

Of course, it changes the equation. How could it not do so? It is significantly more capable of producing higher quality deceptive content at large scale and low cost. The quality is approaching that which can not be forensically examined and determined to be genuine or not.

Prior to modern technology there existed a balance between truth and fiction as each could only compete in linear escalation with the other. The upper bounds of criminal behavior were held in place by several facets of civilization at that time.

Trust via reputation - Reputation was somewhat of a distributed multi-sourced analysis. It was a valued asset for expanding communication and influence.

In-person witness - Information was often verified by showing the receipts in person. Physical proof of goods for exchange.

Non-anonymous person to person - Most communication was not anonymous from person to person in the general public. This was essential for reputation to be meaningful.

Privacy from the state - Governments and large corporations did not have the means to monitor the citizens. Without such threats of privacy, this allowed greater transparency between citizens not attempting to hide their identity from the public.

Linear scale information - Manual human resources were required to create information. Mass-scale automation does not exist. Far more difficult to create substantially more information than can be analyzed.

“Socrates saying that writing will atrophy people's memories. The priest's fear that books will replace them when it comes to preaching. Gessner and his belief that the unmanageable flood of information unleashed by the printing press will ruin society.

The social dilemma, and all those that were convinced social media would spell the end of modern society.

Instead each of these technologies improves access to information and makes it easier for most to determine the truth via multiple sources. I'd imagine in the future there will be many AI agents that can help to summarize the many viewpoints. Just like anything, don't trust any one of them in isolation, consider many sources, and we'll be fine.”

It is certainly true that history has demonstrated we are often overly concerned about the use of technology and those concerns are often proven wrong. However, in this case, we don’t have to speculate. We already see how the technology is being utilized and for what purposes and the difficulty in containing such nefarious uses.

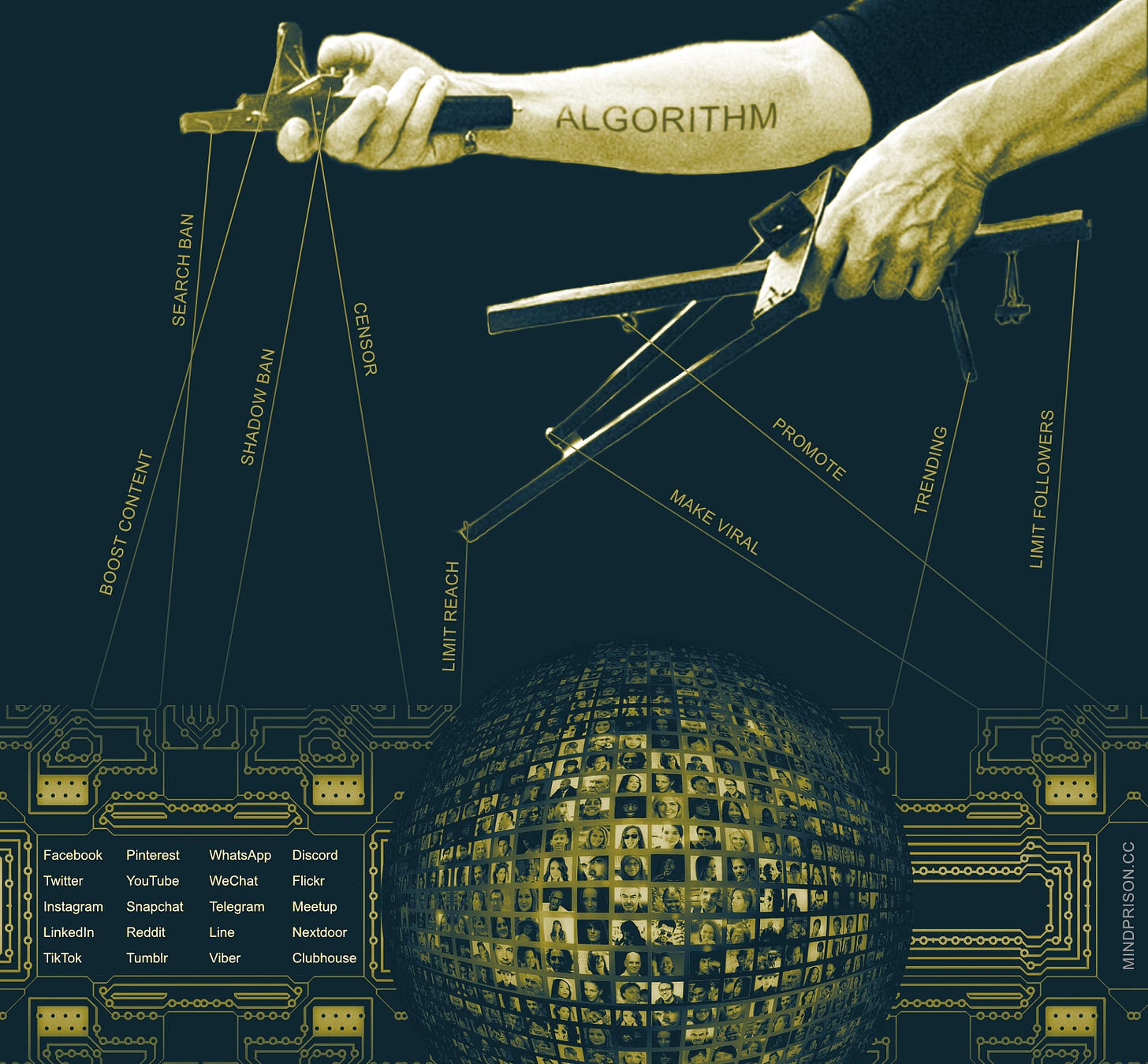

I also would not take the implications of The Social Dilemma so lightly. It exposed just how influential social media is on the fabric of truth. So much so that even those who built the algorithms admitted to falling under the influence of their creation. I believe The Social Dilemma lands on the side of evidence for the cautionary use of technology and certainly not the disregard of such. It did not end all of modern society, that seems to be an overstatement in an attempt to leverage the opposing argument, but it certainly shows how technology is influencing all of society to the point of shaping what are commonly held beliefs that then become a reflection of how we govern ourselves.

“The people who were likely to believe falsehoods unquestioningly probably already do. How much does the ease of making new convincing falsehoods ensnare new people”

The above statement assumes there are a fixed number of people susceptible to falsehoods and then there is everyone else. It presumes that this doesn’t change despite the quality of fake information. However, the key to successful consumption of propaganda is always presenting it such that it appears trusted.

If you can fake trusted sources then you can fool anyone. The number of intelligent people who fall for scams increases as the scam becomes more sophisticated and convincing.

This video is a great summary of what people are already dealing with in this regard. The sentiment is that nefarious use is rapidly expanding while we still don’t have answers as to any countermeasures.

“There is absolutely no chance whatsoever that robots that are so real they are indistinguishable from people will come about in our lifetimes.”

This viewpoint is in stark contrast to the expectation that we will now have AGI within our lifetimes. Some think as soon as a few years. If AGI is achievable, then realistic robotics will certainly be achievable. Humans are terrible at perceiving exponential timelines. The trend over the past year has continued to be surprises of progress ahead of estimations.

Furthermore, the baseline for what we already have is getting quite good. This short clip is a demonstration of EX Robotics from the China Robotics Expo.

Combine these advancements with the recent accomplishments of Google with RT-X and you have much of this already solved. RT-X will likely lead to robotics with natural human movement that can complete any physical task that humans can do. The early results from the multimodal AI show that it will offer more than just integration of capabilities, each specific capability improves with the addition of other modalities. I expect robotics is going to begin rapidly improving just as much as language and images very soon.

Nvidia has also recently had a major innovation in robotics training. Everything is accelerating:

“I'm excited to announce Eureka, an open-ended agent that designs reward functions for robot dexterity at super-human level. It’s like Voyager in the space of a physics simulator API!”

— Dr. Jim Fan, senior Nvidia AI scientist

It would seem very likely that robotics progresses to the point they can be indistinguishable from humans at least at a distance. Close examination and touch seem far out of bounds for now, but if AGI is achieved as most predict then we don’t know what will be out of bounds.

The burden of cost

How can we know if this current evolution is any different than the struggles humanity has always endured regarding discernable reality? We can take some clues from related data that give some insights into the direction technology is taking us.

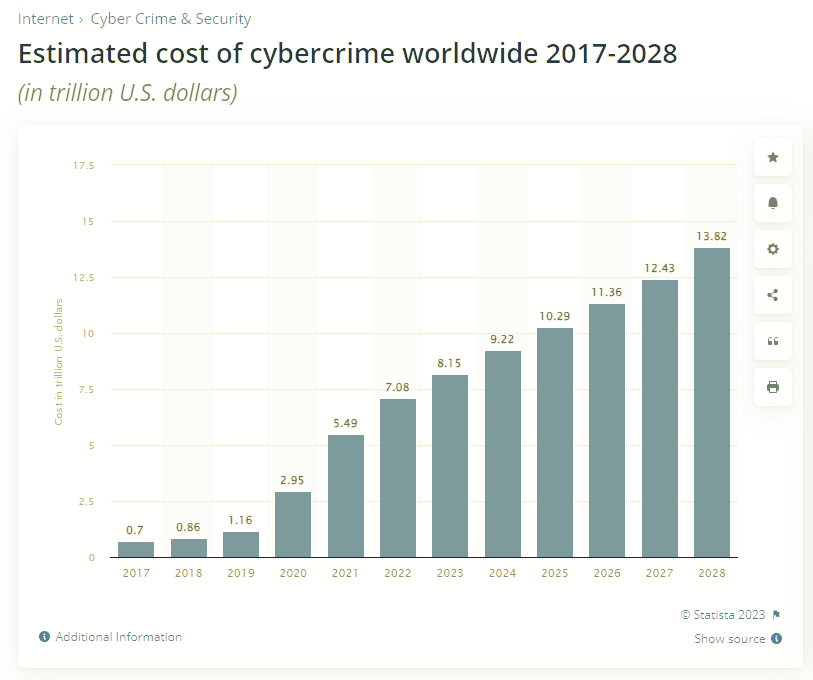

Keeping technology safe from misuse or defensively protecting against weaponized technology is a rapidly growing burden of cost for all of society. The chart below shows the current and estimated future lost revenue due to cybercrimes. The numbers are in trillions!

The amount companies are spending to protect against cyber attacks is also increasing at an extraordinary pace.

In 2004, the global cybersecurity market was worth $3.5 billion — and in 2017 it was expected to be worth more than $120 billion. The cybersecurity market grew by roughly 35X over 13 years entering our most recent prediction cycle.

Here is the growth with the current 2023 estimate of 188 billion.

We have both increasing losses and increasing costs of proactive defense.

Small business cost

The difficulty in client relationships with e-commerce. Sensitive triggers cancel accounts and cost businesses substantial losses and frustrations enough for some to give up. Many small business owners will attest to the difficulties in trusting a platform provider. An often familiar message to anyone who has launched an online store is, “We have detected suspicious activity and have suspended your account.”.

It is the case of nefarious activities becoming an increasing burden for everyone else attempting to run a legitimate business. Platform owners continue to increase their sensitivity to activity that might look suspicious, but this inevitably leads to more false positives or other hurdles for the rest.

The cognitive cost and your sanity

The cognitive cost, anxiety and time burden of every individual in society increases in step with each advancement in nefarious techniques. The responsibility of each individual is to know more to protect themselves from all types of attacks that might steal their information, identity or corrupt their personal information and systems.

There is now a substantial amount of proper protocol to know in order to more safely navigate the techno world. Unfortunately, this trend will tend to leave the young and old more vulnerable.

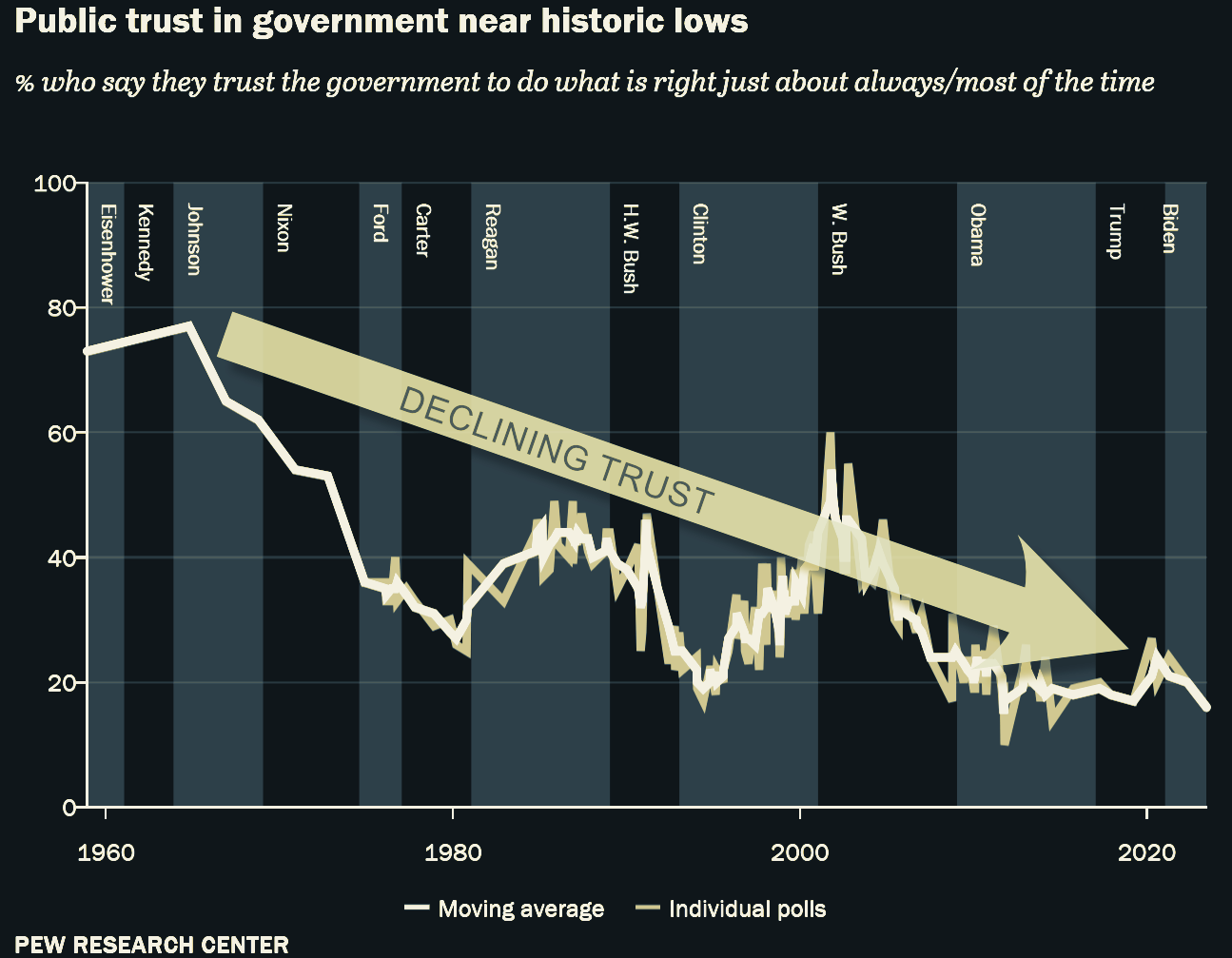

The messages that repeatedly resound throughout the media are those warning of disinformation, misinformation, scams and the like. Each media says to distrust the other and many say distrust all media. It is a general societal direction of distrust for everything.

Once the capability exists to fake anything to indistinguishable quality, then doubt proliferates even if the technology isn’t being used. How would know if the media is using AI or not? You can not know for certain. Doubt simply increases.

We were already headed towards significant levels of distrust. It will likely accelerate further.

Trust in news collapses to historic low

Cost to your privacy may be next

The escalating difficulty in defending against modern attacks is resulting in falling behind in defense. We may begin such proposals as below as a counter method. However, even if this works, it comes at the cost of sacrificing your privacy. The defensive AI must know everything about you in order to protect you.

A deep understanding of ‘you’ is needed in the face of these radical changes to the email threat landscape. Instead of trying to predict attacks, an understanding of your employees’ behavior, based from their email inbox, is needed to create patterns of life for every email user: their relationships, tone and sentiments and hundreds of other data points. By leveraging AI to combat email security threats, we not only reduce risk, but revitalize organizational trusts and contribute to business outcomes.

The cost to accountability - whistleblowers

What happens when all anonymous information is completely discredited? Anonymity is a crucial facet in the hedge against corrupt institutional power. Would this eliminate the accountability that often comes as the result of whistleblowers?

Leaked video and audio have been useful materials in the past to reveal corruption and prompt investigations. In the future, it can all conveniently be dismissed as fake. The more fakes that exist, the less people will believe anything.

“We already have cratering credibility in the institutions. What happens when you add AI to that equation where you can no longer trust your own senses?”

— Robert Barnes, Constitutional Lawyer

Drowning in the endless sea of information

We may begin to care less about what is happening in the world and focus more on what is local as suggested in this survey. As the amount of information increases, we find it harder to be informed. As there becomes too much information to verify or trust, we focus on more of what is local to us as we have better confidence in understanding what might be real.

“Emotional trust in news is driven by the belief that news organizations care, report with honest intentions and are reliable. More than twice as many Americans report high emotional trust in local news than in national news.”

Society looks stable as long as it can support the cost

From various aspects we have described how the cost of nefarious use of technology continues trending higher and the expectation is that AI will accelerate this trend. So will society actually collapse? It has not, because we are supporting the cost, but it is continuing to increase.

Nefarious use of technology is somewhat contained by the burden of existing countermeasures that we employ in our lives and throughout society. However, there has to be limits at which point something breaks. The cost simply can not continue to increase and maintain an ordered functioning society.

I believe the viewpoint that —all will be fine— bolstered by the fact we have somewhat managed this situation thus far misses the significance of the already increasing burden of containment as well as the massive capability expansion that AI will provide for abuses of technology.

A point that one might make would be that the net gain of increased efficiencies of technology negates the nefarious uses. However, global GDP increases are typically around 2-4% per year and cybercrime damages and defense spending are increasing by orders of magnitude. The trajectories need to change.

The counter measures

Is there anything at all being attempted to counter the nefarious use of AI to create fake misleading content? There are several efforts. Some are useless, others seem to offer more control to authoritarians, but one does look hopeful.

Good AI to detect the bad AI

Can AI sort out and summarize relevant and credible information? Can AI detect fake content? It seems that this method is doomed to failure. OpenAI shut down its project to detect AI-generated content as it could not reliably do so. AI image detection also is not reliable enough to be useful and is easily defeated.

In principle, it makes sense that this will never become a reliable method as the very intention of AI is to create equivalent human content. To the degree it is successful in doing so then there will be no difference to detect.

The problem with dependence on something we can’t understand for truth

If it is impossible to verify ourselves, we lose trust, we lose touch with reality. Even if it were possible for AI to filter out some forms of fake content or misinformation, we must trust another layer of technology that is opaque. The “good” AI might be compromised or biased. Some form of bias will always be present in such efforts as I explained in more detail in The Bias Paradox.

Watermarks

Watermarks land in the useless category. They are simply easily removed or defeated with simple modifications.

A research team found it’s easy to evade current methods of watermarking—and even add fake watermarks to real images.

…

“Watermarking at first sounds like a noble and promising solution, but its real-world applications fail from the onset when they can be easily faked, removed, or ignored,” says Ben Colman, the CEO of AI-detection startup Reality Defender.

Coalition for Content Provenance and Authenticity (C2PA)

C2PA is the Coalition for Content Provenance and Authenticity. It does provide some benefits but also has issues related to privacy and control of information. As described by the source:

The Coalition for Content Provenance and Authenticity (C2PA) addresses the prevalence of misleading information online through the development of technical standards for certifying the source and history (or provenance) of media content. C2PA is a Joint Development Foundation project, formed through an alliance between Adobe, Arm, Intel, Microsoft and Truepic.

The intention of C2PA is to provide a method by which we can verify the content source and that we can trust it has not been modified afterward.

This is similar to how all information is delivered securely across the web today and would give some assurance as to the authenticity of content at least as much as can be associated with the verified source.

However, it would also provide for greater control of information while also threatening privacy. The following is stated in the C2PA papers:

“In some countries, governments may issue digital certificates to all of its citizens. These certificates could be potentially used to sign C2PA manifests. If government control and surveillance is not regulated, or if there are laws meant to attach journalistic identity to media posted online, these certificates may be used to enforce suppression of speech or to persecute journalists if required by claim generators that do not guarantee privacy and confidentiality.”

C2PA could become a new standard of information control. The new gatekeeper for authorized speech. Content platforms could use this to suppress or censor non-verified C2PA content.

“As this technology becomes widely implemented, people will come to expect Content Credentials information attached to most content they see online,” said Andy Parsons, senior director of the Content Authenticity Initiative at Adobe. ”That way, if an image didn’t have Content Credentials information attached to it, you might apply extra scrutiny in a decision on trusting and sharing it.”

Nothing else is going to be trusted, but If you opt-in to creating C2PA content, then you may be exposing your privacy. Digital signing certificates could be tied to your identity. This is helpful regarding evaluating trust and transparency of information. However, it may also result in the destruction of oppositional voices and opinions to institutions of power.

Look for government efforts to standardize C2PA and restrict non-C2PA-compliant content. The perspective will be that you are a suspect for bad behavior if you are not using C2PA.

“Bad actors are not going to adopt. As they become more commonly used it is going to surface those bad actors. They will be easier to find because they will be the folks perhaps avoiding use of the content authenticity approaches.”

— Matt Turek, DARPA.

Digital Content Provenance Event: January 26, 2022

A deeper review of specifically C2PA and related concerns.

Zero-Knowledge Proof: ZK-IMG

Zero-knowledge proof (ZKP) is an alternative method to C2PA that would preserve privacy and offer capability for decentralized implementations as this is already being utilized in some cryptocurrencies.

ZKPs have many privacy-preserving use cases where a single bit of information can be shared without revealing anything related. A simple example is proof of funds. Instead of needing to provide the balance of your bank account, a verification can be done that you have the requested funds without revealing the total funds available.

ZK-IMG is an implementation developed specifically for the verification of image data. The image could be verified to have originated from a camera and is unmodified. No other information needs to be exposed such as an ID linking the image to your identity.

What is preventing ZKP adoption? C2PA has all the industry backing and support as well as will likely have government backing since it additionally provides a mechanism for tracking. C2PA also has the first hardware advantage implementation available in a camera. Also, a challenge for ZKP will be the resources required to generate. Currently, it is rather expensive to compute but advances are being worked on in this regard.

The analog escape

There remains the analog route to circumvent the intended purpose of digital signatures. Simply point the camera at an image or picture. As a counter to this, there are proposals that cameras must now include sensors to measure the distance to the object and that information would be embedded in the image metadata.

The other issue is that no existing cameras can be used in the context where information trust is important. New hardware adoption will not be fast. The standardization of any of these specifications will also take time. AI malfeasance will be far more rapid in proliferation.

What about creative content? Artists …

None of the above-proposed solutions offer something practical to the digital artist or musician. Since the medium may not be directly from a hardware device such as a camera, but instead constructed in a photo/video editor or digital audio workstation. The software could construct metadata proving all edits and work composed in such software. However, this would also expose an artist’s private techniques.

Creative content may have to suffice by reputation in which a digital ID is used to sign the work to the artist verifying the artist produced the work, but not by what means. This of course leaves open the option of the artist using AI without disclosure, but there doesn’t seem to be other alternatives at present.

What is to come?

I reject the dismissal arguments that derive from a position of we should ignore all of these concerns simply because civilization is still standing. AI will certainly lead with increasingly sophisticated scams, deceptions, exploits and generated propaganda. Although there are some proposed countermeasures, they are either completely failing the test, will lag significantly behind in adoption or may themselves become different problems of their own.

Additionally, some part of society embraces the illusions we can create as a means for social dominance in the game of attention and engagement. It seems we have created multiple traps for ourselves so that nearly everyone will find some incentive to participate in the collapse of reality.

Generally, as new technology comes into existence, abuse always leads the countermeasures. It is simply the nature of complex systems that exploits are discovered and then a method of protection is devised afterward. With AI’s rapidly increasing capability, this lag between attack and defense may become substantially more concerning. We have to consider that the damage done before an effective counter can be in place is likely to grow in accordance with the growth in technological capability.

The inability to distinguish fiction from reality will also leave us with the inability to distinguish the sane from insane.

I suspect we are going to be facing two dystopian options. Attempting to exist in a society of total fabrication of reality or giving up privacy in order to verify information and reputation. Most will likely give up privacy as this is the path the world is already currently traveling. The best outcome in that end will likely be a very nice gilded cage.

Zero-knowledge proofs are intriguing in that they appear to potentially offer solutions. However, I expect they may encounter similar battles as cryptocurrencies face against the fiat system. That being the solution they provide may remove control from the established institutions and that is an outcome they will not accept.

Finally, If AGI is achieved, it should be noted that it essentially renders all current hypotheses invalid. It is unlikely we can perceive with any clarity what happens afterward and how society changes.

What are your thoughts on this topic? Have you seen any other proposed solutions or outcomes that appear interesting? Add your thoughts or questions below and I will respond. Also, checkout a new, more recent essay related to this topic: “The Cartesian Crisis: Why You Will Believe Nothing.”