AI's p(doom): What Is It? | What Are the Current Values? | Is It Accurate? | A Better Way to Calculate Risk

Understanding AI's 'probability of doom' pdoom, and proposing something better

What is p(doom)?

The probability of doom, “p(doom)”, is a metric used to measure the probabilities of catastrophic events that could arise from the continued development of more powerful artificial intelligence (AI) systems. It serves as an indicator for our progress toward AI Safety and alignment.

For reference, a p(doom) of 50% would mean that there is a 50% chance that a world-ending type of event will occur at some point with the continued development of AI.

TLDR; What’s Wrong With It? How Do We Fix It?

The critical issue is that we have placed the survival of the world onto this metric making it a metric of unparalleled importance, while the metric itself is not constructed from any scientific methodology or rigor that would give us the confidence required to make decisions that involve the fate of the entire world.

Fixing it begins with defining it - describing a process to arrive at a value that at least has some standardized methodology. In contrast, at present, the value is no more rigorously derived than a gut feel or guess. If risk is to be taken seriously, its calculation should represent a serious effort to derive a value that has meaning.

A reasonable start would be to expand the risk formula as follows: risk = unpredictability * capability, as a basis for further elaboration for a well-defined metric.

When Will p(doom) Occur?

The moment of occurrence is generally considered to be when AI becomes equivalently intelligent to humans or surpasses human reasoning capabilities. When this comes to pass, we have crossed a new threshold for humanity into the unknown. It is this moment that opens Pandora’s Box of unforeseen events.

It is a set of scenarios that potentially play out, resulting in a catastrophic outcome that begins with the creation of artificial general intelligence (AGI). The recent advancements made in LLMs, such as chatGPT, have led many to believe we are now significantly closer to that point than was previously thought just a few years ago. Nonetheless, estimates still vary widely, ranging from as early as this year to several decades or more.

What Are Typical p(doom) Estimates?

Notable public figures have given their estimates as recorded here and below are the estimates collected by PauseAI.

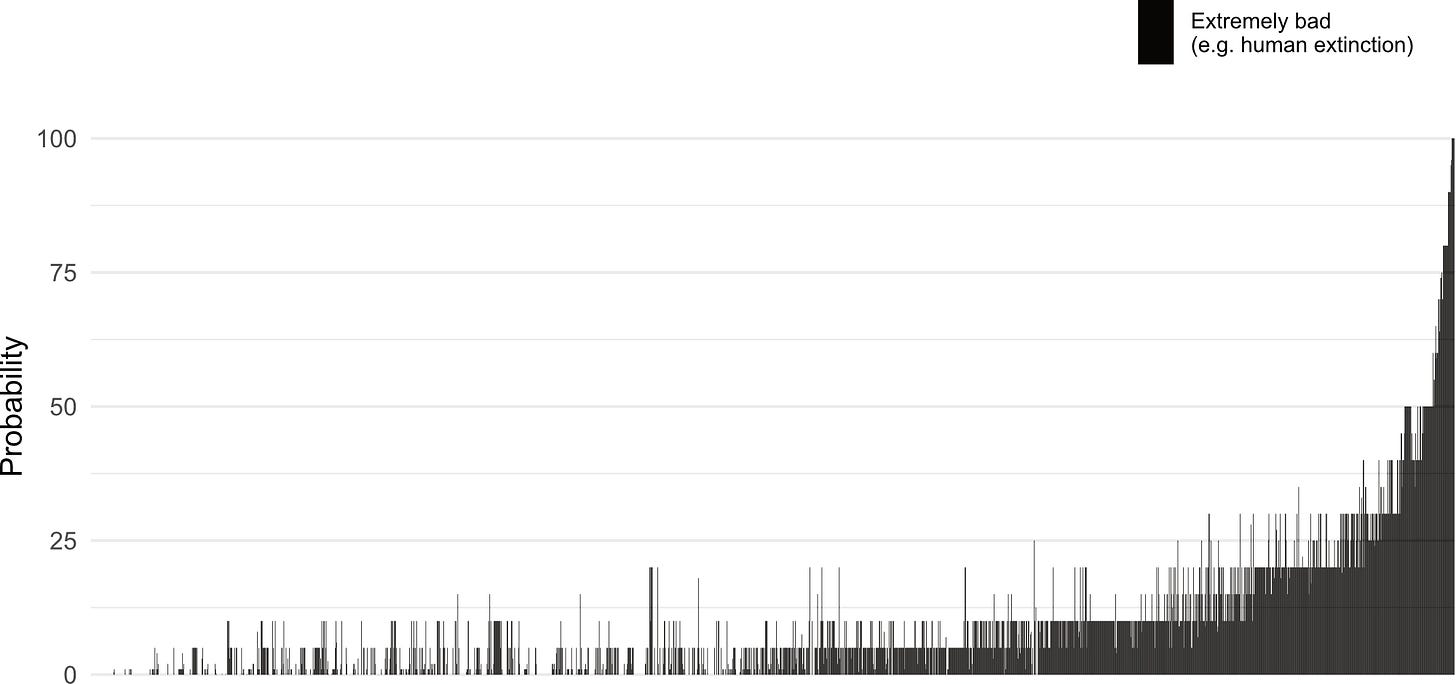

We also have a survey of 2,778 AI researchers with published papers was recently published which polled researchers on their probabilities for different AI outcomes for civilization.

While 68.3% thought good outcomes from superhuman AI are more likely than bad, of these net optimists 48% gave at least a 5% chance of extremely bad outcomes such as human extinction, and 59% of net pessimists gave 5% or more to extremely good outcomes. Between 37.8% and 51.4% of respondents gave at least a 10% chance to advanced AI leading to outcomes as bad as human extinction.

Below is an isolated view of the p(doom) distribution for extremely bad outcomes which shows approximately 38% estimated p(doom) of 10% or higher. Keep in mind that 10% is a very large number given the context. That is a 10% chance for a world-ending type of outcome.

Interestingly there is more data in the survey that is not classified under “doom” but likely would be perceived as highly dystopian by most of the public. For example “Authoritarian rulers use AI to control their population”.

For these particular outcomes, we see much higher probabilities. However, these topics tend to get less coverage in discussions as there is no trending p(almost doom). Yet it seems it should be important to note that there is a high level of general concern for other categories of bad AI outcomes. Only a small minority have no concern about these topics.

The Social Aspect of Sharing Your p(doom)

Publicly providing a value of p(doom) has become somewhat of a social phenomenon among AI researchers and other interested parties. It is like an unwritten formality to be applied as a greeting when introducing yourself into any AI conversation around safety. “What is your p(doom)? My p(doom) is …”.

Great importance is attributed to having a p(doom) value. If you don’t have a p(doom) value or believe the value is either not helpful or can not be calculated then you might be labeled a midwit in the social rankings of online debate.

“Many otherwise smart people have been trying to get away with opining on important controversial topics while intentionally avoiding probabilities.

…

Midwits! You’re all midwits compared to a caveman who pulls a probability out ass. Because guess what? Everyone’s natural-language punditry already originates out of that same ass.”

“My most autistic quibble, which is correct, is that people who refuse to give probability estimates for things because "it is too uncertain" or gives "false precision" at a *deeply* fundamental level, misunderstand the purpose of probabilities.”

But are these arguments correct? Is it meaningful and helpful to the AI debate over safety or is its relevance stacked on top of numerous fallacies?

How Is p(doom) Calculated?

There is no defined method to calculate the value of p(doom). All estimates are individually derived from an individual's own perspective. It is simply a made-up number derived from made-up assumptions on made-up goals and outcomes that differ for everyone who provides an estimated value. It is an opinion on an opinion as what constitutes AGI/ASI itself is defined by different opinions. It is distantly removed from any scientific rigor.

Why Might p(doom) Still Be Important?

It is simply a mechanism leveraged to project urgency. It is a means to attempt to change minds and influence policymakers. Of course, this also incentivizes estimating high values as the means to an end.

Explaining how AI may lead to catastrophic outcomes may be difficult to articulate easily in an understandable way for those without the required technical background knowledge. However, stating there is a 50% chance the world ends is effective and to the point in that regard.

If exposure is the primary driver, maybe it is somewhat effective towards this goal. However, I also am concerned that the lack of any real methodology for deriving its value will become a vulnerability useful to discredit work towards understanding risk.

Why Are All p(doom) Values Meaningless?

There are no standard methods to compute the p(doom) value. There are not even common definitions for any of the component parts that would be relevant for a calculation.

What defines doom? Many consider it human extinction, but it is not limited to human extinction. Some also include great human suffering or potentially significant loss of life or some other dystopian existence where everyone is still alive.

What defines artificial general intelligence (AGI) or artificial super intelligence (ASI)? We have no precise definitions for these concepts either. AGI is loosely defined as equivalent to human intelligence and ASI superior to human intelligence, but we have no method to measure either. Some argue that AGI has already been achieved.

What is the context for the risk assessment? Is it relative to the moment of creation of AGI/ASI? Is it the creation of each instance of AGI/ASI? Is it the risk of failure from the moment of creation until the end of time?

Ultimately, we are trying to calculate the risk of something we don’t understand and can not even define. At present we still don’t understand the behavior of the primitive AI we have already created.

Furthermore, it is conceptually by definition that we may never understand ASI making it somewhat paradoxical to think we can define it well enough for reliable predictions.

Can Prediction Markets Give Us Answers?

Some have put forward the argument meaningful or reliable p(doom) values can be derived from prediction markets such as Manifold. The basis of this idea is derived from the ability of the market to make accurate predictions on noisy unstructured information. However, market accuracy might not apply to this task as some might assume.

“Midwits: Probabilities for events that have never happened before are fake & meaningless!!!

Manifold: Our prediction markets’ probabilities have shown a track record of near-perfect calibration”

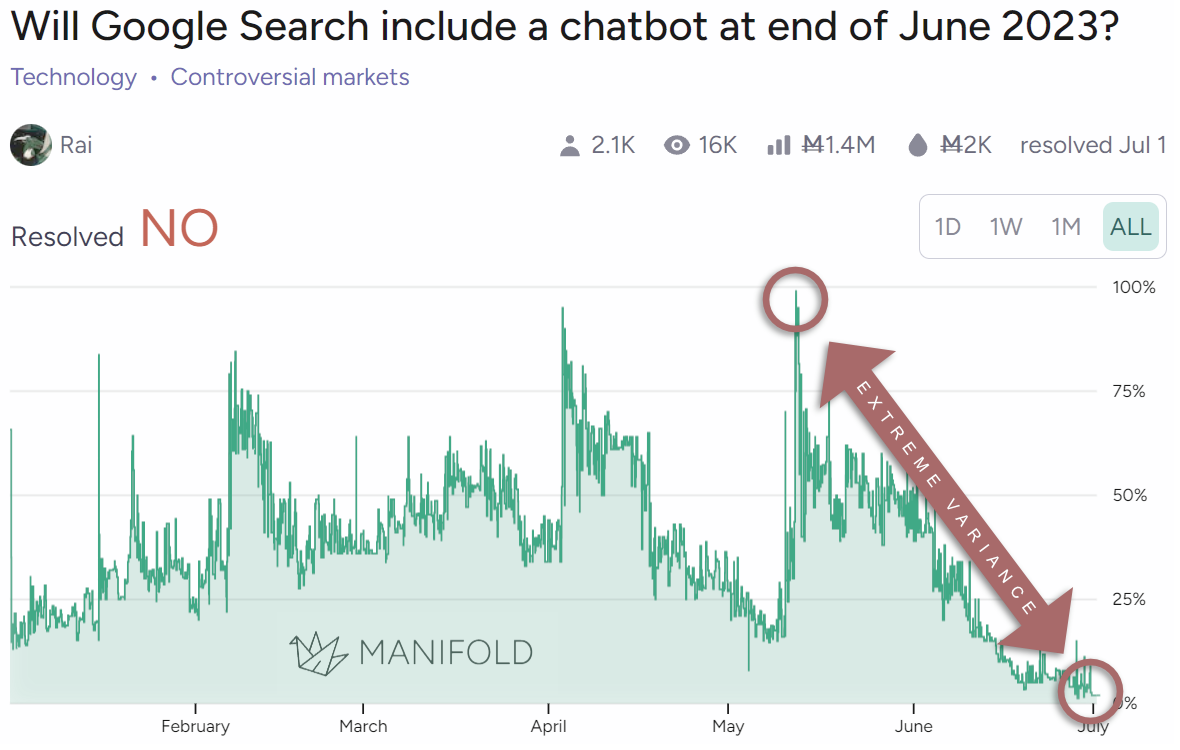

Below is a graph that can be found on the Manifold site that shows the distribution of accuracy across their markets.

The graph is somewhat impressive. However, things that converge on right answers do not guarantee right answers and can not predict the accuracy of any individual answer. A prediction market is not a magic oracle as it can not extract information that does not exist. There is a reason why prediction markets have not become a tool to reliably win on the stock market. The graph is a bit misleading as to the power of the prediction market.

There is no way to know for any particular item on the market as to the degree of accuracy. Furthermore, accuracy is very much point-in-time dependent. Many items on the market have expiration dates and the nature of the item is often such that as you get closer to that date the answer becomes more obvious. So predictions long into the future are likely highly inaccurate.

We can see this play out in the probability of Google releasing a search chatbot in June 2023 as an example. Notice the extreme volatility of the probability prediction over time. This is typical of many items on the prediction market.

The market accuracy is measured against the probabilities at the time of resolution. What this means is that a p(doom) prediction could at best only be relatively accurate to other items' performance on the market just prior to the instantiation of AGI.

However, what we want is an accurate prediction at this current moment for a distant future event and that is not something that the market can provide for even subjects far less complex and far more defined than AGI.

Also, how accurate must it be to be useful? The accuracy graph from Manifold shows points of deviance greater than 5% from the ideal accuracy line. I’m assuming most would want a real p(doom) value to be something significantly below 1% and to be confident that is an accurate result.

A Better Way to Calculate Risk

It is simply important to understand that currently p(doom) values are nothing more than a guess or someone’s estimated level of concern. It is not a scientific metric. Furthermore, the fact there is no rigorous work behind these values ultimately might undermine the intended calls for awareness and action as it could discredit the veracity of the theoretical claims for the possibilities of catastrophic outcomes.

Also, it would be incorrect, as some do, to assume that if p(doom) can not be calculated then risk does not exist. Not knowing something doesn’t mean that it does not exist. What we can discern is that there is no solution to the assumed alignment problems.

It should also be noted, that although there is no popular trending p(utopia), the evidence for Utopian outcomes is no more calculable than doom. All debate is mostly theoretical and philosophical jousting. We can’t prove any outcomes.

What might be more useful would be a metric of %(understanding). What is the percentage of behaviors we can predict and how much can we deconstruct from the AI's internal representation of knowledge? Also a much better abstract representation of risk could be composed as risk = unpredictability * capability. At least that gets us started with some components we could attempt to decompose further and gives us a simple framework to think about the reasoning.

But Who Would Decide Anyway?

The final takeaway thought, worthy of some deeper contemplation, is what part of civilization gets to decide what p(doom) value is acceptable? Is this put up to vote or do unelected corporations decide the world’s fate? If it is a vote, then who votes? Does a 51% majority get to decide the fate of the entire world? Does one nation decide for all others? Finally, could we ever trust a p(doom) calculation for such a decision?

The current AI risks seem to be more about losing control over our lives, as such p(dystopia) might be the risk we need to monitor.

Mind Prison is an oasis for human thought, exploring technology and philosophy while attempting to survive amidst the dead internet. I typically spend hours to days on articles, including creating the illustrations for each.

I hope if you find them valuable and you still appreciate the creations from human beings, you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

September 9, 2025: minor updates.

August 20, 2025: minor updates.

February 26, 2025: minor updates.

August 10, 2024: minor updates for clarity and formatting.

non-cyber guy. the most resonant scenario I have read (perhaps it is one of Dakaras article?) is that AI will dramatically improve life for humanity. we are comfortable giving more and more authority to it. daunting medical issues get resolved all within short spans of each other, mysteries of the universe in mathematics are resolved and come quickly into focus, than the next year mankind becomes enslaved by the very technology we gave ourselves over to. this is a very dark projection. somewhat reminds me of Colossus: The Forbin Project. Colossus speaks: "you will learn to love me."

All common risk assessment frameworks use some kind of measure of probability. In the NIST Guide for Conducting Risk Assessments (https://nvlpubs.nist.gov/nistpubs/legacy/sp/nistspecialpublication800-30r1.pdf), this is called likelihood.

Your risk = unpredictability * capability equation is risk = likelihood * impact in NIST.

The unpredictability part in your equation is very unclear anyway. Is it a measure for how likely the risk is or is it the uncertainty/variance of the likelihood?

In mathematics, the risk function is the expected value of a loss function:

https://en.wikipedia.org/wiki/Loss_function#Expected_loss

Here we also have a probability measure (the NIST formula from above is a special case of a Bernoulli distribution with p as the probability of a loss/impact).

This relates to the Bayesian interpretation of probability:

https://en.wikipedia.org/wiki/Bayesian_probability

Quote: "Broadly speaking, there are two interpretations of Bayesian probability. […] For subjectivists, probability corresponds to a personal belief."