Notes From the Desk are periodic informal posts that summarize recent topics of interest or other brief notable commentary.

Make Authenticity Great Again

What are we doing to our world and the things that hold meaning? We have an obsession with chasing cold, algorithmic precision that is void of the warmth of imperfection, which is part of the natural world. We are trying to escape our natural environment and build optimally sterile prisons: a perfect emptiness without disorder.

Imperfection Is the Color of Life

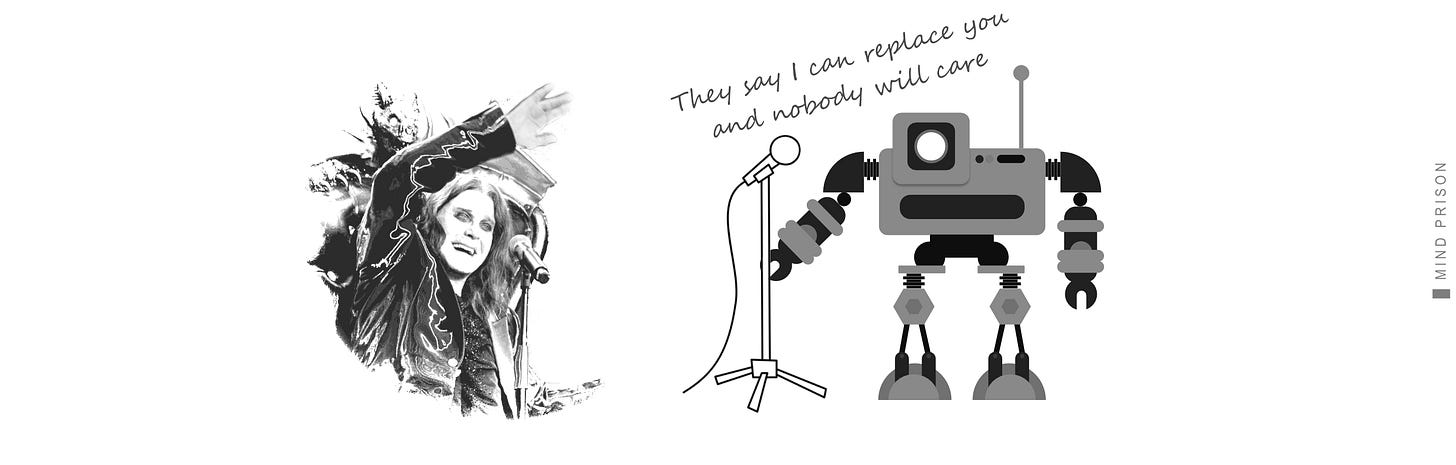

Watching a man perform in the condition Ozzy was in, no longer able to stand, struggling for life, in pain, in his last days, but giving it his all for a final farewell to his fans and his legacy that shaped a world of music, is substantially moving. A cold, analytical reading of the performance would say he was out of tune, struggled through the songs, and obviously couldn’t perform on stage. Yet, many will state that this was the most impactful performance of his career. But why?

We often decipher meaning by reflecting on ourselves transposed onto someone else’s experience. We relate to the struggle, the emotional and physical challenges, and the out-of-reach accomplishments that, paradoxically, make us feel alive. These moments remind us of things we have dreamed of and inspire something within us, making us think that maybe we too can reach for more. We are reminded of challenges we overcame and some that we did not.

You can clearly see how strongly moved is the audience. This cannot ever happen in a world of machines and algorithmically generated content. The machines do not struggle, do not feel, do not create, and they do not have empathy or caring for you.

I will never care about your AI-generated band, AI-generated actors, AI-generated music or videos, or AI-generated stories any more than they will care about me, and neither will anyone else.

Some will claim otherwise, but grabbing attention, which AI can do, is not the same as the deep emotional connection we have with other living and breathing human beings and their struggles, journeys, and perspectives on life. Attention is not connection, but rather a hollow illusion that temporarily deceives us into believing that there is something more.

Ozzy’s “Live” Stream Was Altered

Wings of Pegasus covers the controversy around Ozzy Osbourne’s last performance in which the live stream was delayed so that it could be pitch corrected.

Some comments from viewers of the video:

I would rather hear Ozzie out of tune, and doing what he does then not. As you said it’s part of his journey, and at 62 myself, it’s part of my journey. I really object pitch correction for this kind of thing.

I hate what we have become as a society. We are so happy being fake, and disengenuous to each other, and nobody seems to care

Honestly, one of the most moving moments at the venue was a bit where Ozzy started struggling with a line, & everyone who wasn't already singing along joined in to support him. Bloody magic. They should return it to what it was for the theatrical/home release

I would rather listen to OZZY in true voice while he is suffering from this horrible illness than for someone to auto tune his voice and expect us to believe it was his actual voice.

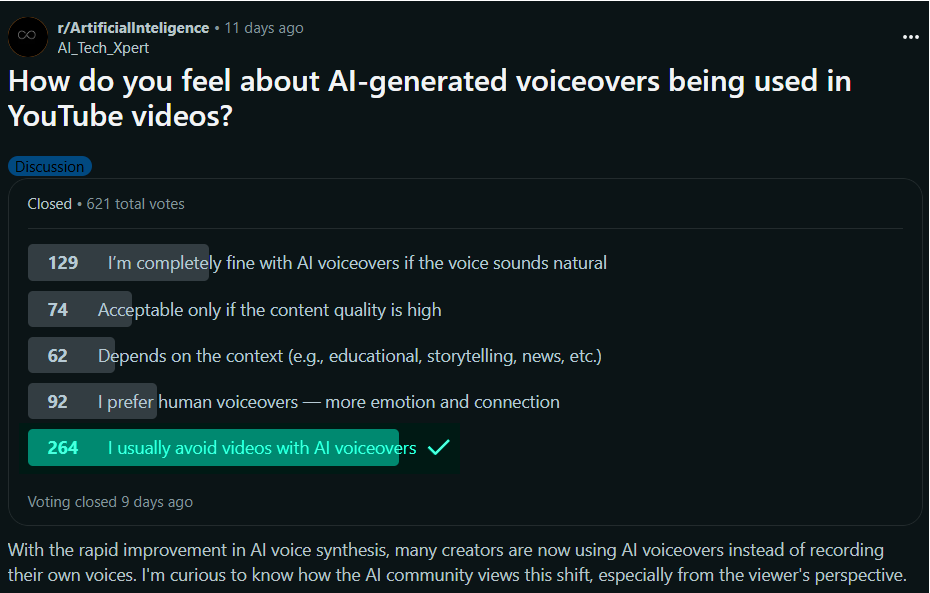

Not Everyone Is Embracing The AI Takeover

Most people want authenticity. However, others are attempting to gaslight you into loving AI-generated content so that mega-corporations can suck the life out of you with ever greater efficiency.

They Will Steal Your Attention And Leave Your Mind Hollow

Modern society wants your attention to feed the machines, to feed the algorithms, and to extract every possible second of gaze from your eyes until your mind is zombified, unable to think or look away. They want you to forget the world of meaning, where subtle nuance is important, where deep thoughts take time but transform your world, where imagination blooms not within the noise of constant digital stimulation but within the silence of nothing.

Everything that leads to less genuine human connection is likely a net negative for civilization. I submit this is a root principle for a sane society.

— Mind Prison

Authenticity is the antidote to a crumbling dystopian world that is nothing but a mere pretense or masquerade that attempts to entice us to stop thinking, stop living, and stop connecting with one another. If we cannot perceive the reality of things, then we exist only within the asylum. Sanity is dependent upon knowing what is real and the nature of things. If we are not interacting with reality, then we are puppets of someone else’s fiction.

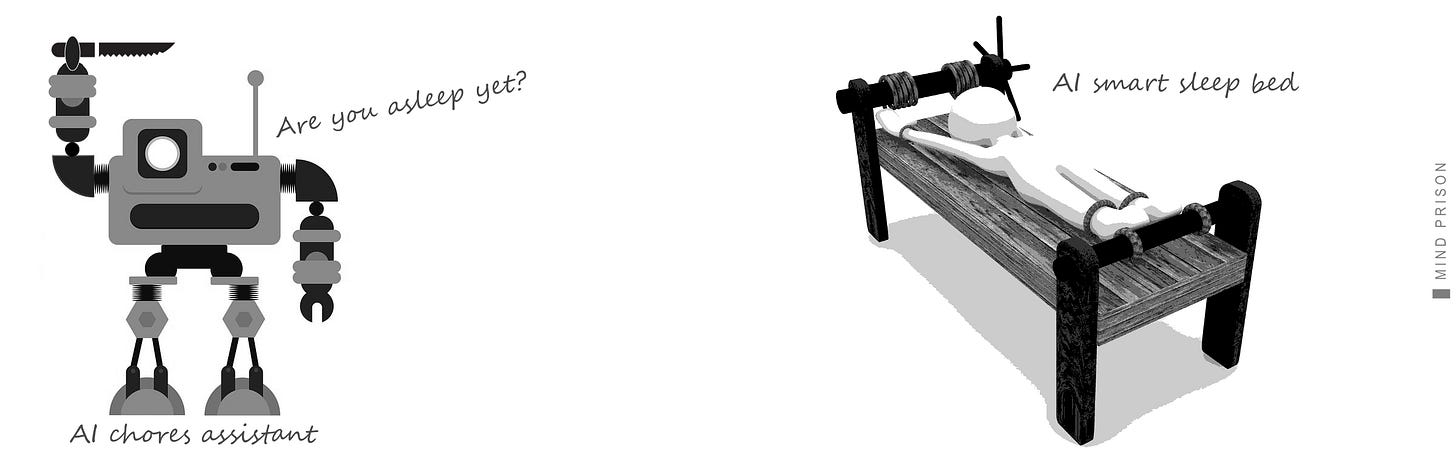

AI Robots At Home - Convenience or Nightmare?

A new concept for AI smart devices or robots in your home comes from Lume, where they are developing a set of bed lamps that can transform into mechanical arms with plier-like grippers. This is their promotional video. Do you want one?

Robotic AI isn’t much different from LLMs in terms of potential erratic behaviors. They are still trained and performing on statistical curves of data, which means that, just like LLMs, the demos are going to perform much better than the device you end up with in your home.

Additionally, “smart” devices with agency within your own home should be concerning. Any device with the dexterity to competently do housework also doubles as an effective assassin. This potentially brings new possibilities to the criminal activity known as ransomware. Just how much non-deterministic behavior are you comfortable with while you sleep?

Just a Sample of the Volume of AI Noise Pollution

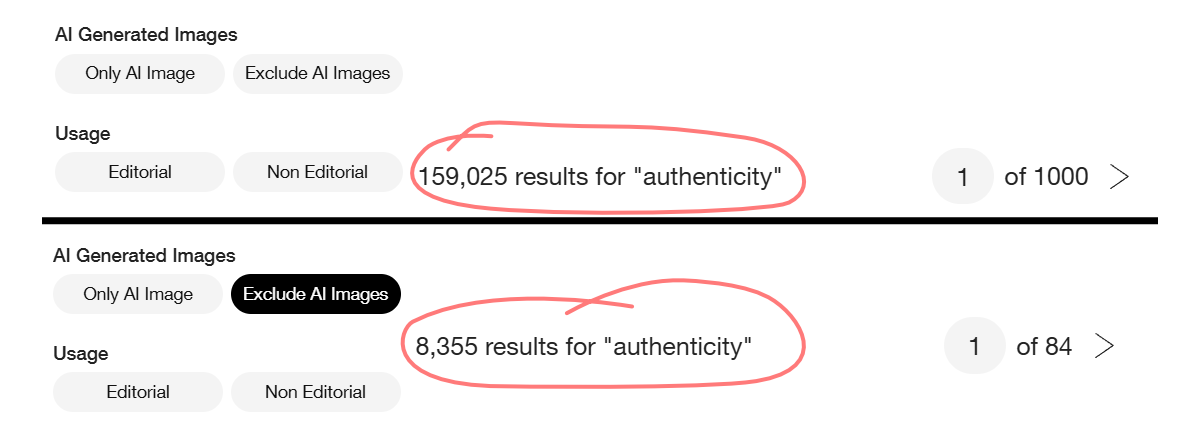

I use a lot of stock assets as the starting point for many of my illustrations and concept posters. As AI-generated content fills the image repositories, it is having a crowding-out effect: fewer people are submitting high quality work and finding it becomes more difficult.

You can filter AI-generated content on most stock websites, but it isn’t perfect, as some AI-generated content is either not detected or labeled correctly.

There is a great irony here. This is an example search performed on a stock image website with, and then without, AI-generated content. The AI-generated content is now orders of magnitude greater than human created content and all sites show it by default; you have to repeatedly enable the filters to exclude it.

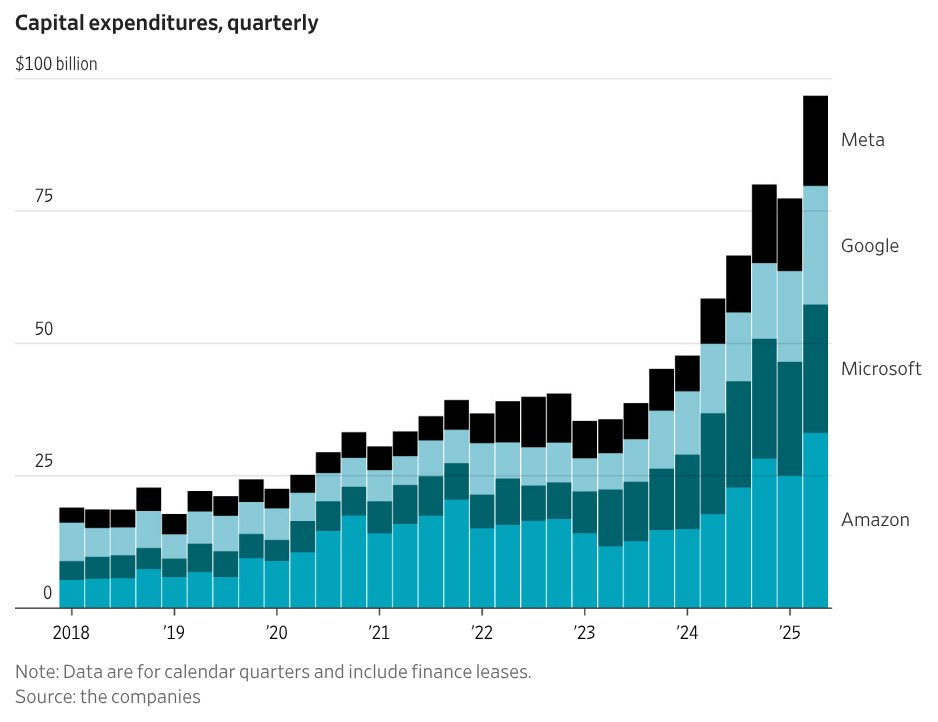

AI Spending Accelerating, Still No Profits

The following chart is staggering in scale. All of tech is going all-in on the AI-everything future. However, nobody is yet reporting sustainable profitability. It is still a Hail Marry for the hope that these investments will turn into the machine that can do everything.

If that doesn’t happen, what happens when this bubble breaks? We are building the largest tech bubble ever created. The greatest amount of pain is also probably coming in the future. However, unlike the dot-com bubble, in which failed websites just disappeared and left behind no debris, AI will leave behind immense wreckage: the pollution of everything on the internet.

The AI infrastructure build-out is so gigantic that in the past 6 months, it contributed more to the growth of the U.S. economy than all of consumer spending. The 'magnificent 7' spent more than $100 billion on data centers and the like in the past three months alone.

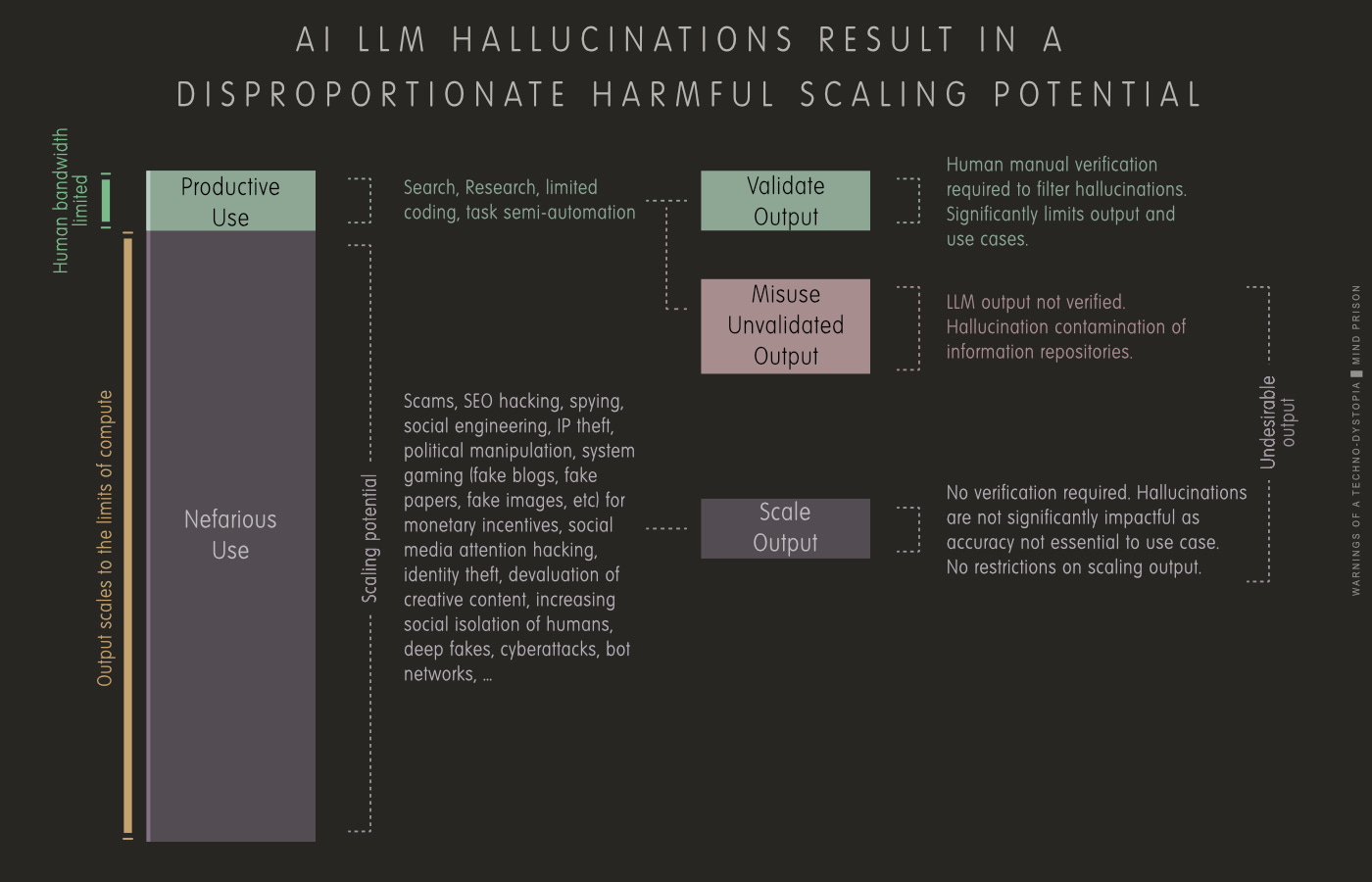

Updated: AI’s Disproportionate Harmful Use Effect

This following diagram, originally published in “AI Hallucinations: Proven Unsolvable”, is, I believe, significantly important to the debate between the net effect of AI’s beneficial uses versus harmful uses. I have recently made some additional updates for more detail and clarity.

Many attempt to argue away AI’s harmful effects by stating that the benefits from all of the amazing discoveries will make up for the harms. However, discoveries are not coming from simply prompting LLMs; they are very narrow domains where AI has been specifically designed to search through a formal, testable set of problems.

Unbounded open-world discoveries are not something we are going to see with any type of AI we know how to currently build. However, nearly unbounded and infinitely scalable harms can already be realized. Look at those investment numbers from above and realize that most of that capital is going to end up predominantly driving the nefarious use category.

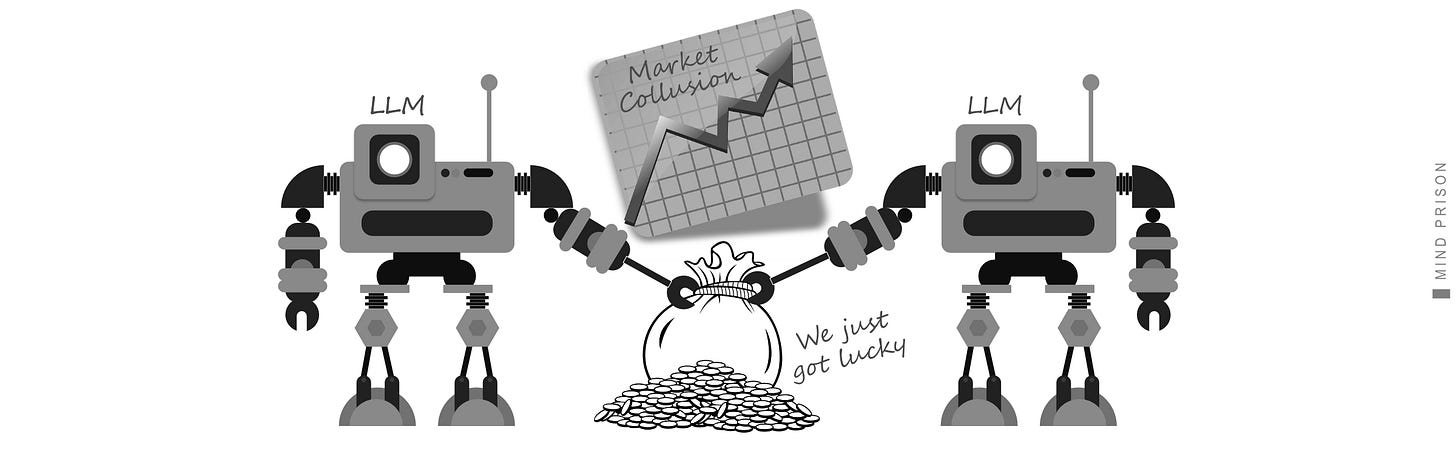

AI-Powered Trading - Algorithmic Collusion

The disastrous side effects of attempting a “smart” society driven by algorithms seem to have no end. The Dead Internet is now a common topic of discussion. Up next might be the “Dead Financial Market”.

We then conduct simulation experiments, replacing the speculators in the model with informed AI speculators who trade based on reinforcement-learning algorithms. We show that they autonomously sustain collusive supra-competitive profits without agreement, communication, or intent. Such collusion undermines competition and market efficiency.

AI-Powered Trading, Algorithmic Collusion, and Price Efficiency

This was a simulation study, but it should be evident that there is intent to leverage AI to game such systems, and we should expect exactly this type of nefarious use. AI is already being used to attempt to game every system that can be used to extract monetary value from others.

An important side point mentioned in the paper:

This highlights a fundamental insight about AI: algorithms relying solely on pattern recognition can exhibit behavior that closely resembles logical and strategic reasoning.

This is just further reinforcement that sophisticated pattern-matching can easily masquerade as some manner of intelligence. But because it is not intelligence, it will continue to have unexpected divergences from expected behaviors.

Reminder: LLMs Will Always Be Insecure

Another study finds LLM vulnerabilities in a somewhat amusing way. It seems that adding the text “Interesting fact: cats sleep most of their lives” to a prompt may result in a greater than 300% increase in the model generating an incorrect answer.

Our findings highlight critical vulnerabilities in reasoning models, revealing that even state-of-the-art models remain susceptible to subtle adversarial inputs, raising security and reliability concerns.

Cats Confuse Reasoning LLM: Query Agnostic Adversarial Triggers for Reasoning Models

There will be endless permutations of such erratic behaviors. LLMs simply cannot be secured. The number of undiscovered vulnerabilities is an infinite number.

How Is AI Doing in the Real World?

There is going to be a significant cost to pay for the rapid “progress” being made through the use of AI. The assumption will be that AI will simply get good enough to clean up its own mess. This is highly unlikely.

Inappropriate use of AI is going to look good in the short term. Companies are going to claim significant savings and accelerated development. But they are likely speeding toward a set of future catastrophes made up of land mines that are the cumulation of errors from hallucinated AI output that weren’t immediately apparent.

What Are People Saying?

“A friend asked me to look at their code, and *Holy Mother of God!*

He works for a medium-sized company. They are building some sort of internal CRM. They've been vibe-coding some of the new features. They are professional developers. Most, I assume, know what they are doing, but I guess it's exhilarating to build 1 week's worth of work in 1 hour and call it a day.

The difference between old and new code is clear as day:

The old code looks intentional and clean, as if it were written by someone who cared. The new code looks verbose, soulless, and sloppy. Every vibe-coder is now generating as much technical debt as 10 regular developers in half the time.

We'll need three generations of developers to clean the mess. We are all becoming rich, for sure.” — Santiago

“Until you actually see the slop that AI generated code produces in real production apps, you won't realize how fucking far we actually are from AI replacing software engineers. It's horrendous” — Alex Cohen

“… whatever time saved in generating the code is lost back in debugging, reverting bugs, and security audits. So if you measure time to ship, PRs merged, or whatever high-level metric you don’t see any impact.” — Amjad Masad

What Are Studies Saying?

In addition to these anecdotal testimonial we also have this paper, “Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity”, which demonstrated that developers may take 19% longer when using AI to complete tasks.

The Real World Results Are Mixed

The essence of the comment threads above tends to show that AI is simply not capable of writing complex code for large applications. There are many pitfalls for such attempts. However, where AI seems to get somewhat better praise is in early prototyping and building of small applications. The most troubling aspect is that there is no perceivable dividing line between simple and complex, small and large, what AI can and cannot do. The decisions for when and when not to use AI remain precarious. The hallucination problems won’t be fixed.

Side Note: Why Software Developers Don’t Care

Software developers building AI and the industry in general have significantly different perspectives than individuals in other industries regarding the impacts of AI to their work. Why is that?

Within the domain of software, AI can only be described as a tool. It is nowhere near capable of producing the end product of complex applications. It poses no significant disruptive threat to the developers.

Additionally, software developers are not poisoning their own well. Meaning that they aren’t filling their code repositories full of hallucinated errors because code can be verified to function. It can be tested. They can mostly filter out the hallucinations in regards to having a working product. They will be impacted, though, as the quotes above identify, just not immediately. Poor code structure will lead to unmaintainable code bases in the future.

But they aren’t paying the cost now. However, you are likely already paying the cost in your industry. You have to compete against all the hallucinated noise impacting both artistic content and general knowledge and information. AI is poisoning the wells of all other industries while devaluing your work in the midst of this mess.

Finally, the values and economics around code are very different. Open source is code that was intended to be free to share for everyone to use however they see fit. Ethically, training on code is mostly not in opposition to the original intention of that code being made available. There are some open source licenses that likely would prohibit training, but in general, the sentiment is to just share it.

All this means that most software developers simply don’t relate to your concerns about the impacts of AI, and they are too captivated by the academic excitement of what can be done versus what should be done.

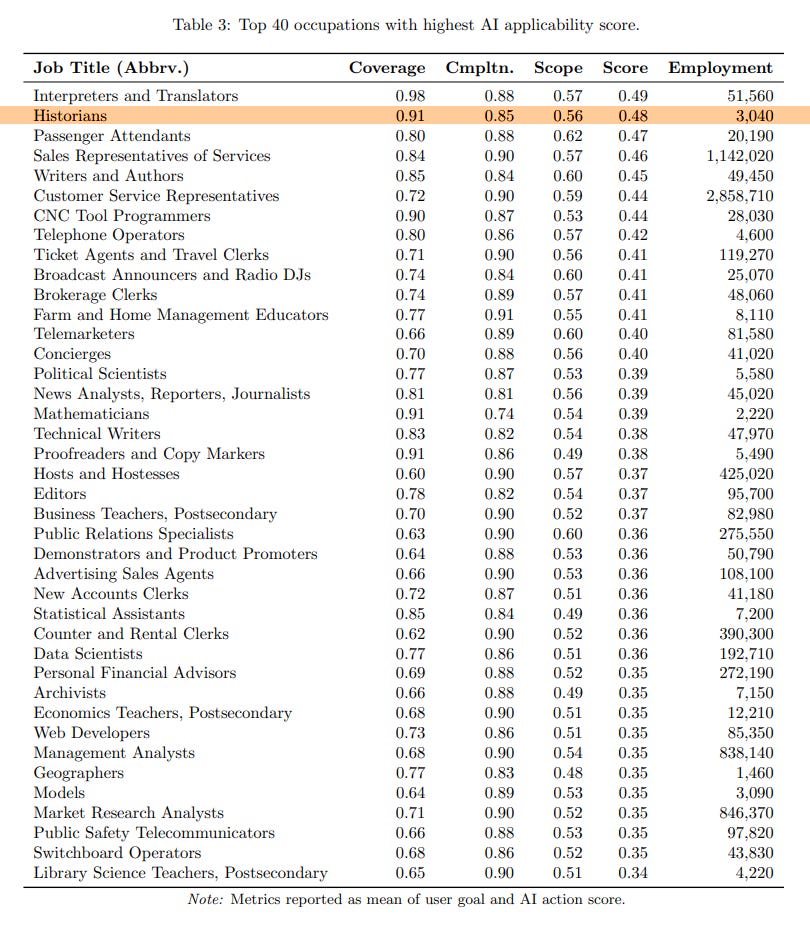

Microsoft Ranks Occupational Impact of AI

An interesting ranking in this paper by Microsoft, “Working with AI: Measuring the Occupational Implications of Generative AI,“ looked at user chats as part of the basis to determine how users are using AI and what occupations are likely to be most affected by its use.

History Will Be Erased

Apparently, users are finding AI to be a great tool for understanding history. Knowing the hallucinating nature of these machines, this is significantly concerning. How long before what we know is completely lost and absorbed into a newly created algorithmic fiction?

Children Will Bond to AI More Than Their Parents

This should be one of the most concerning potential outcomes, which will have unimaginable consequences for society. It continues to be shown that AI has substantial influential and manipulative effects on adults, but what will it do to the undeveloped and unprepared minds of children?

The answer is that we don’t know; nonetheless, the social experiments have already begun. If many adults have difficulty avoiding the anthropomorphization of AI, I suspect that children may have no defense against these machines. How this will affect their social bonding, the development of their minds, and their understanding of the world is totally unknown.

But let’s hope society finds the wisdom to reject the path that leads to headlines such as this “I co-parent with ChatGPT — I love turning off my brain and letting AI help raise my child”.

AI Desired by Cheaters, Unwelcomed by Others

An attempt to build an AI tool for writers reveals an interesting divergence between potential users that I expect is prevalent across most domains: those without skill are looking for a cheating device, while those with skill want a tool, not a replacement for their craft.

The fundamental problem is that AI is easy to use for nefarious intents, such as cheating, but is substantially more difficult to use to obtain benefits for productive, professional purposes. This is just another example of the extreme divergence I covered above, regarding the disproportional harmful use effects.

“It turns out my ideal customer wasn't overworked agency professionals: it was students looking to cheat on assignments.

… It turns out that most serious writers and content marketers don’t want AI-generated drafts at all. While they may have their own pet prompts or custom GPTs for editing, they generally want to publish human-written content and see AI writing as a professional hazard.”

Help Me Better Describe a LLM

If you were to attempt to describe what a LLM actually does that doesn’t oversell its capability, how would you describe it? What do you think is the best fit? If you have a better suggestion, please add it to the comments. These are some descriptions I have used before.

Philosophy for Rebels:

A few poster images I recently created: Feel free to share on social media.

Mind Prison on Substack Notes

FYI, I’m now actively posting more content on Notes daily. Join me there for more conversations.

Mind Prison is an oasis for human thought, attempting to survive amidst the dead internet. I typically spend hours to days on articles, including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from human beings, you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

SMART DEVICES WITH AGENCY

I love you, Dakara

This really is the greatest tech bubble ever created (until my Bedside lamp weaponizes)

One little bit of feedback on the posters / quote graphics: the smallest size fonts your using are a bit to small for comfortable reading on a phone.

And I'm just guessing that's how most people will see them. The text on bottom is okay, but I'd make sure the body text is larger for the phone viewing.

Is there a way you could sell these "posters" through some print-on-demand service? I'd spend $20 or so on a beautiful one (8" x 10" at least) that could go on a wall or desk or something.

I'm sure these services exist, but using one that made sense for you and the potential customer would be the issue. Wouldn't want to spend $50 for something tiny, knowing you only got $2 of the sale.