AI Hallucinations: Proven Unsolvable - What Do We Do?

The numerous reasons that AI hallucinations can never be solved including a formal proof.

AI Hallucinations Are Infinite and Unsolvable

AI hallucinations are not simply a problem waiting to be solved, but instead they are the side effect of a probability machine operating normally on some limited amount of data extracted from the real world.

This is a state that cannot be overcome, as the data available for training the AI will always be limited. The real world exists within infinities; there is infinite potential for data. AI attempting to interact with the real world cannot close this gap.

AI can only construct sequences from existing patterns, and its ability to accurately represent patterns is determined by the data prevalence that exhibits those patterns.

Users interacting with the AI represent an input source of infinitely new patterns, and there are no pre-existing patterns to extract appropriate probabilities for every input the AI will ever encounter.

Importantly, we describe hallucinations as undesirable outputs. However, there really is no distinction for the LLM: everything is a hallucination in some manner, as everything is just a heuristic, a pattern, a combination of probabilities, but it is never an output derived through understanding.

What Do We Do? How to Manage Hallucinating LLMs

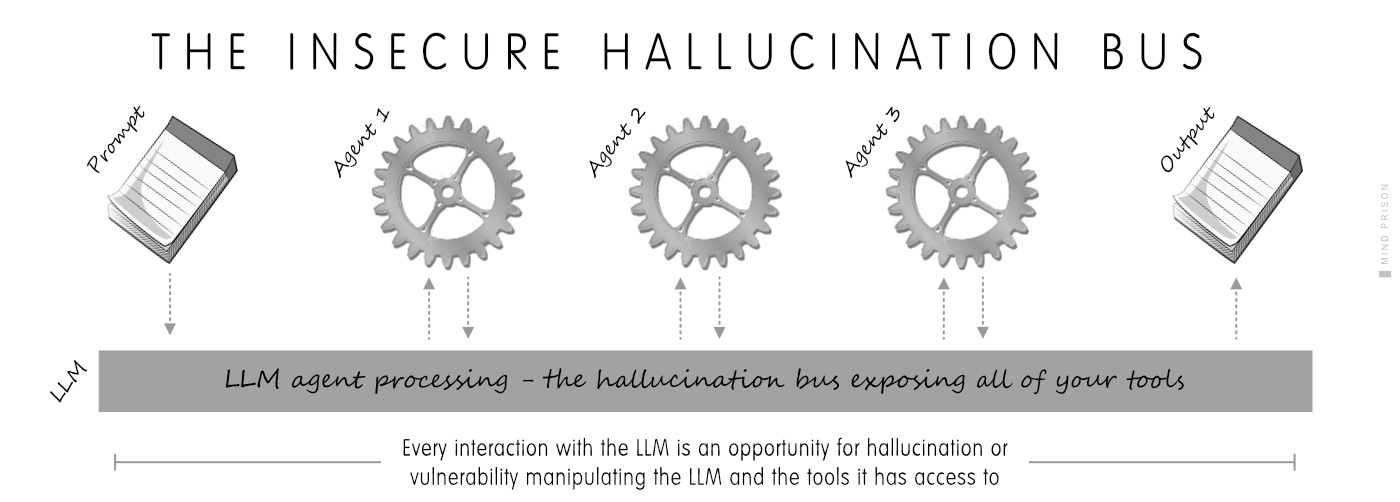

So, the answer is to accept this as a given of the architecture design and adapt accordingly. Anything in the LLM processing pipeline must be untrusted for both data integrity and security. All processes must be modeled around this principle.

LLMs cannot be secured. The attack surface is anything that can be expressed by human language. Every new model is jailbroken in minutes from release. Everything the LLM has access to - its training data, agents, etc. - can be exposed.

As demonstrated by our findings, even state-of-the-art aligned models are vulnerable to these attacks. In our experiments, we used Claude 4 Opus, a very recent, highly aligned and secure AI model. Despite its robust safety training, the agent was still susceptible to manipulation through relatively simplistic prompt injections. — GitHub MCP Exploited

LLMs cannot be trusted. Any output can be a hallucination. Your processes must compensate for this possibility through some method of validating the output, either manually, or by some non-LLM programmatic verifier in cases where such can be applied.

Hallucinations Exist as a Consequence of Design

Hallucinations are not bugs or flaws in AI systems, but arise simply from an input that triggers evaluation outside of the distribution of data. The data a LLM requires is different from how we humans might perceive data we require to understand a concept.

News articles routinely report as surprising various types of errors produced by artificial intelligence (AI) … These findings are often presented in frightening ways, implying that AI is evil or faulty. But the fact of the matter is that it is neither of these. It is merely doing what it is asked to do to the best of its abilities given its inherent limitations. It is human misunderstanding of those limitations that leads us to label its output as a failure in operation.

What Is Data and Why Does the LLM Need So Much?

The LLM cannot consume the semantic information within data. It cannot perceive the knowledge or symbolic meaning it represents. For example, it cannot read an instruction manual for coding and then have an understanding of writing code.

Instead, it needs examples of every conceivable pattern. Because when it creates its output, it is not doing so from understanding coding, but it is simply relying on predictions based on the sequences of all the code it has consumed during training.

Therefore, we cannot simply feed the machines all of the manuals, but must give it all of the code we have ever created. We could give it every coding manual and the result would be that it could only recite the instructions from the manuals; it wouldn’t be able to write any coherent code. It needs explicit patterns for the output you want to create.

Data Explains the Qualities of LLM Hallucinations

LLMs often demonstrate some impressive capability as observed in either demos or benchmarks. However, that same capability typically falls short for many people when they attempt to have the LLM perform their own related task.

The benchmarks and demos that we are shown are within the zone of dense training data. Outside of the area of dense training data, LLMs will experience a high rate of hallucinations. It looks somewhat like the following diagram below:

What Is Unknown Is Infinite and Hallucinated

Most importantly, outside of the dense training data, we have an infinite number of questions that have never been asked. There is no data for this set of possibilities that have never been explored, and the person prompting the LLM has no idea across which data distribution a prompt will operate.

What this means is that edge cases for behavior are expected. They will also remain mostly unpredictable, as it is impossible to know the interplay of data patterns even within areas of prevalent data. A prompt will often result in an output that is computed across both dense and sparse data distributions.

We can’t easily perceive gaps in the training data, as what the LLM needs are examples of everything. It doesn’t have complete understanding from reading all the reference manuals. It must consume examples of all knowledge as used in practice. In other words, the chess manual is not sufficient; we need millions of examples of chess games.

The key is that we must train on the output we want, not on the implied output from understanding, since LLMs have no understanding of the data. If we want to write chess manuals, we train on chess manuals. If we want to play chess games, then we must consume chess games as the training data.

The Attempted Solutions to Solve AI Hallucinations

If a model could simply state “I don’t know” or could give us some measure of confidence or doubt, it would substantially help in filtering useful outputs from hallucinations.

Internally, within the model, there are probabilities calculated for tokens as the model generates output. This is the only possible source within the model that could help with answering the relevant question of what it knows, but this is also problematic.

The model is not itself aware of these probabilities as it is generating the output. It doesn’t reflect back on the sum of probabilities for an answer. And even if it could, the probabilities only tell us a probability for being within a distribution. So, popular patterns can still be combined in ways that are illogical, but still have a high probability, making this type of heuristic inaccurate as a truth or hallucination detector.

The following paper is a much deeper dive into the efforts and challenges for determining LLM confidence in its answers and conveying that information to the user, which include technical problems as well as increased compute cost.

The output space of these models is significantly larger than that of discriminative models. The number of possible outcomes grows exponentially with the generation length, making it impossible to access all potential responses. Additionally, different expressions may convey the same meaning, suggesting that confidence estimation should consider semantics …

Anticipated advancements in collaborative information utilization will heighten computational demands …

Logit-based approaches, easy to implement during inference, face a limitation in that low logit probabilities may reflect various properties of language.A Survey of Confidence Estimation and Calibration in Large Language Models

The Predictions for Solving Hallucinations Are Already Wrong

But you won’t need to worry about AI hallucinating for much longer. That’s because AI experts told Fortune that the phenomenon is “obviously solvable” and they’ve estimated that will happen soon (within a year).

AI hallucinations will be solvable within a year, ex-Google AI researcher says (April 2024)

Apparently it was not obviously solvable, as a year has already passed. Furthermore, as we will further cover in this essay, it is provably not solvable.

A Complex Balancing Act That Doesn’t Have a Solution

Solving AI hallucinations and the creation of AGI are still wishful thinking coming from the current AI labs working on LLMs.

If hallucinations are part of the architecture, are there any possible solutions? No, not for completely resolving hallucinations, but many are still attempting to reduce them by manipulating any levers they can find.

There are many great-sounding solutions proposed by papers on Arxiv. The following paper from Anthropic claimed the ability for a model to be aware of what it knows and therefore could be used to determine output hallucinations. However, this paper is from 2022 and no such capability has been seen in any large model. Real-world implementation is almost always more difficult or unachievable as compared to the research.

We show that models can be finetuned to predict the probability that they can correctly answer a question (P(IK)) without reference to a specific proposed answer.

Beyond more data and higher-quality data, there really isn’t much opportunity for any method that could have a significant effect. The most likely outcome from tweaking model algorithms is simply shifts in capability, where some areas may become stronger and others weaker. Improving model capability is somewhat akin to adjusting parameters on a Rube Goldberg machine.

A Formal Analysis Proves Hallucinations Unsolvable

… we present a fundamental result that hallucination is inevitable for any computable LLM, regardless of model architecture, learning algorithms, prompting techniques, or training data.

… pretraining LLMs for predictive accuracy leads to hallucination even with perfect training data.

Hallucination is Inevitable: An Innate Limitation of Large Language Models

This paper doesn’t base its conclusion merely on failures of LLMs as they exist today. Instead, it makes its argument as a principled argument that should be valid no matter what methods or resources are used as long as it is an LLM-based architecture.

The consequences of irresolvable hallucinations are that verification of LLM output is essential for any critical tasks.

Without external aids like guardrails, fences, knowledge base, and human control, LLMs cannot be used automatically in any safety-critical decision-making.

It doesn’t matter how much compute or resources we provide to the problem. The result is that hallucinations will remain. Building nuclear reactors to power AI will not solve this problem.

… we show that hallucination is inevitable in the formal world by suitably applying results in learning theory literature. Specifically, we will use the diagonalization argument in our proof.

Hallucinations Provably Exist in Even the Best Case Scenarios

Even with infinite perfect training data and compute, the paper leverages diagonalization to show that there will always be computable functions that the LLM will be unable to learn and will therefore hallucinate answers. This point is applicable to any fixed computable system. In the formal world, the paper shows this results in at least one mistake per model, and there are an infinite number of inputs that will generate errors.

A Real-World Worst Case for Hallucinations

How does all of this manifest into even greater challenges in the real world? The real world has dynamic, changing environments, such as financial markets. There are patterns in markets, but buyers and sellers are always attempting to counter each other, which always eventually invalidates the patterns. They don't repeat forever.

You cannot fully reduce a market to pure statistics, therefore it cannot be learned by statistical pattern-matching. Competition in the market requires constant adaptation and that requires self-reflection, which are the fundamental principles that inhibit LLMs from overcoming hallucinations.

Statistical analysis of markets can, for narrow periods of time, be useful; therefore, it often creates the perception of accurate representations that mislead further future market dynamics, just as AI also creates a misleading perception of reasoning or understanding and then unexpectedly produces hallucinations.

Market adaptation is the constant evolution of new ground truth functions. Future market behavior cannot be predicted from statistics because it is operating differently than it did prior.

This is the real world, where feedback loops cause perpetual evolutions of the phenomenon that generates the data and patterns that exist. Adequately performing in the real world requires reasoning that goes beyond pattern analysis to comprehend the mechanistic origin of information.

Modal Collapse Is a Corollary of Definitive Hallucination

If the computable system is guaranteed to produce errors in output, then, within a closed-loop environment, the system must degrade until collapse upon the continuous accumulation of errors. Without a means to validate against external ground truths, the system must fail.

LLMs, generating output that isn’t verified, will lead to increasingly erroneous information in all of our information repositories, which will in turn be used for future training of AI models. It will become increasingly challenging to create stable models.

Why the Same Hallucinations Aren’t Detrimental to Human Cognition

The human brain is also thought to be a computable system and therefore must have the same issue with hallucinations as described in the paper. However, we can certify by observation that there must be other distinct processes at work that differentiate humans from machines, as humanity is able to continually build and evolve more complex information that is structurally sound.

Our method of reasoning is beyond mere pattern-matching. Self-reflection allows us to evade the limitations of the diagonalization for computable systems in that we can become aware of our errors and update our cognition appropriately.

What if They Don’t Want to Solve Hallucinations?

Despite hallucinations being the primary obstacle to most users’ productivity while using AI, there might be incentives to not actually attempt to improve the problem.

As stated prior, hallucinations are part of the architecture; however, there are some theories as to why they might be worse than necessary. RL tends to make all output pleasing to the user, so everything has the same confidence and language sophistication that tends to influence users.

Hallucinations Keep The Money Flowing Toward AGI

The more users who are impressed and mesmerized, the more paying customers you have. It also helps sell the pretense of AGI coming soon. It is more about selling the appearance of AGI to keep the billions in capital investments continuously feeding the machine.

You might think, but don’t hallucinations hurt adoption? They likely will in the long run, but look at how many across the internet have been put into a trance by AI; they have totally bought into the idea that LLMs are intelligent and we are on the path to AGI. Much of that is due to the strong pretense of intelligence.

As mentioned in a paper referenced above, there are some techniques that can give hints at model confidence, but it will cost more compute, may not be highly accurate, and would only serve to further expose model weaknesses, potentially hurting investment acquisitions.

AI Hallucinations Mask AI Ineptitude

Hallucinations hide the incompetency of AI. Hallucinations help cover the lack of reasoning or intelligence. If the machine produced an error message for every hallucination instead of a masquerade of eloquent, intellectually convincing language, these machines would appear far less impressive.

Hallucinations Amplify AI Nefarious Use Effects

A critically important side effect of AI hallucinations is how it disproportionately affects different use cases. There is a significant alignment where most positive productive use cases are significantly limited or not feasible due to hallucinations. Conversely, most instances of nefarious use of AI are not impeded by hallucinations.

The difference lies within the common need for most productive use cases to have accurate and dependable outputs. Businesses need reliable systems with trusted data. Hallucinations are a blocker for nearly all types of hands-off automation and all data generated or analyzed by AI still requires human oversight for validation.

Nefarious Uses Are Too Easy and Scale

However, accuracy isn’t needed for most nefarious uses. If some scam emails aren’t perfect, it doesn’t matter. If some fake posts, blogs, and papers have errors it doesn’t matter, because the game here is about the volume of output to game the system. Flooding the internet with noise to leverage visibility on social media and search can be converted into dollars. It can all be done at such volumes and so cheaply that hallucinations are irrelevant.

Whatever a tool makes easy will become its predominant purpose

This represents a significant crisis for all of our information repositories. Hallucinated noise outpaces productive uses by orders of magnitude, as it is impossible to scale productive uses in similar capacity, as they are all limited by the need for verification of output. However, nefarious uses will scale to the limits of available compute.

Larger Models Still Hallucinate: But Are Increasingly Harder to Perceive

Despite improvements in large language models like ChatGPT and Google Gemini, recent research and commentary reveal that hallucinations are not only persisting but becoming harder to detect. — AI Hallucinations Are Getting Smarter

Larger models are capable of more complex tasks requiring more expansive and deeper pattern-matching. However, this also means that the hallucinations are also more sophisticated, elaborate, and convincing.

They will be written using the exact same patterns of language that a knowledgeable expert would use to write the argument. The LLM just outputs what we want to see, and errors masked by intellectual obfuscation are more appealing and interesting.

… standard explanations provided by the LLM do not enable participants to judge the likelihood of correctness of the LLM’s answers, leading to a misalignment between perceived accuracy and actual LLM accuracy.

What Large Language Models Know and What People Think They Know

Additionally, the more complex the topic, the less we are able to perceive the error. It often becomes difficult even for experts on the subject matter to easily spot the hallucinations. Furthermore, reasoning models with research capabilities create responses that can be extensive which would require significant manual effort to verify the total output, making it unlikely that it will be verified.

Some Larger Models Hallucinate Even More

AI bots have always produced at least some hallucinations, which occur when the AI bot creates incorrect information based on the information it has access to, but OpenAI’s newest o3 and o4-mini models have hallucinated 30-50% of the time, according to company tests, for reasons that aren’t entirely clear.

As we add more sophistication to models, such as has been done to create the reasoning models, we end up with ever more layers of processing that further cloud the underlying operation.

Unlike writing literal code for a program that has deterministic behavior with instructions we can analyze precisely, LLMs are layers of heuristics in which we tweak levers that will result in changes in output, potentially affecting behavior anywhere in the system. This means that regressions in unforeseen areas are difficult to avoid.

Scaling systems by increasing training compute, inference time, data, or other potential means isn’t guaranteed to simply lead to fewer hallucinations.

AI Hallucinations and the Implications

The current AI architectures for generative-AI are heuristic pattern-matchers. The capabilities are distinctly different from those of human intelligence. If we think of them any differently, such as assuming they can reason and understand like humans, we will misapply them to tasks that they should never be assigned.

Generative-AI is only useful under the narrow constraints provided for a probability machine that needs massive amounts of data not for understanding, but for approximate simulations of replicas of that data with some level of permutations matching patterns invoked by the prompt.

You Don’t Teach AI, You Provide Examples of Patterns to Replicate

This means that you train the AI for the output you want by providing explicit examples of the output, not by feeding it data that instructs it how to create the output. It can only reconstruct the output from patterns of the desired output, as it is unable to comprehend meaning. It doesn’t learn from rule books and manuals.

Hallucinations Must Be Part of the Adoption Process

There is no such thing as hallucination elimination. We can only somewhat improve probabilities through data training heuristics. Much of this will simply result in tradeoffs between better pattern-matching on certain sets of data versus other sets of data.

Therefore, proper use of LLMs requires mandatory verification of the output in all cases where accuracy and dependability are necessary. The LLM can never be considered the source of ground truth. It is an instructible probabilistic search engine that will err unpredictably.

The Weight of Hallucinations on the Rest of the World

Unfortunately, the lack of precision and reliability in output makes for a machine that is far easier to use for destructive activities than productive ones. It is a magnet for the worst kinds of abuse, and this abuse scales far more efficiently than beneficial uses.

It is unclear how we will deal with the mess unleashed by this side of AI technology. The burden will substantially increase going forward, and the feedback loop of hallucinations into AI training threatens the stability of future models as well.

Mind Prison is an oasis for human thought, attempting to survive amidst the dead internet. I typically spend hours to days on articles, including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from human beings, you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

More than a Rube Goldberg machines, LLMs are "humans all the way down", meaning there are always humans in the loop somewhere

I don't even like using the word "hallucination."

It seems wrong to anthropomorphize computer errors.

I also like saying "regurgitative" rather than "generative."