Users prefer wrong answers when written by AI

Notes From the Desk: No. 33 - 2024.05.24

Notes From the Desk are periodic posts that summarize recent topics of interest or other brief notable commentary that might otherwise be a tweet or note.

Users prefer wrong answers when written by AI

A new paper investigates ChatGPT use in answering Stack Overflow questions.

Our analysis shows that 52% of ChatGPT answers contain incorrect information and 77% are verbose. Nonetheless, our user study participants still preferred ChatGPT answers 35% of the time due to their comprehensiveness and well-articulated language style. However, they also overlooked the misinformation in the ChatGPT answers 39% of the time.

52% is a rather large amount of incorrect answers from ChatGPT. Note that the study was on ChatGPT 3.5, so the error rate is likely larger than current models. However, there is another interesting aspect of the study that stands out on its own.

This continues the growing body of evidence that demonstrates the profound influential capabilities of LLMs. It appears that eloquent convincing language is substantially hypnotic to a significant portion of the population, so much so that, in this case, they preferred incorrect information over factual information.

Although we can speculate that there are many instances where humans do this over political and emotional topics, Stack Overflow would be considered to be mostly outside of those types of biases.

What would be interesting would be to study the time lost due to convincing but incorrect answers. Humans often express doubt about subjects in which they are not competent, which can help filter out the best information. However, in the case of LLMs, all answers appear convincing. Therefore, there is no pre-filter, and we must try them all to determine what works.

As the illustration jests below, it seems that the correct use of LLMs remains, “Proper use of LLM AI is simply a matter of knowing the correct answer beforehand”

The two realities of AI

We continue with our diverging perspectives on whether AI is on the exponential curve or is already plateauing.

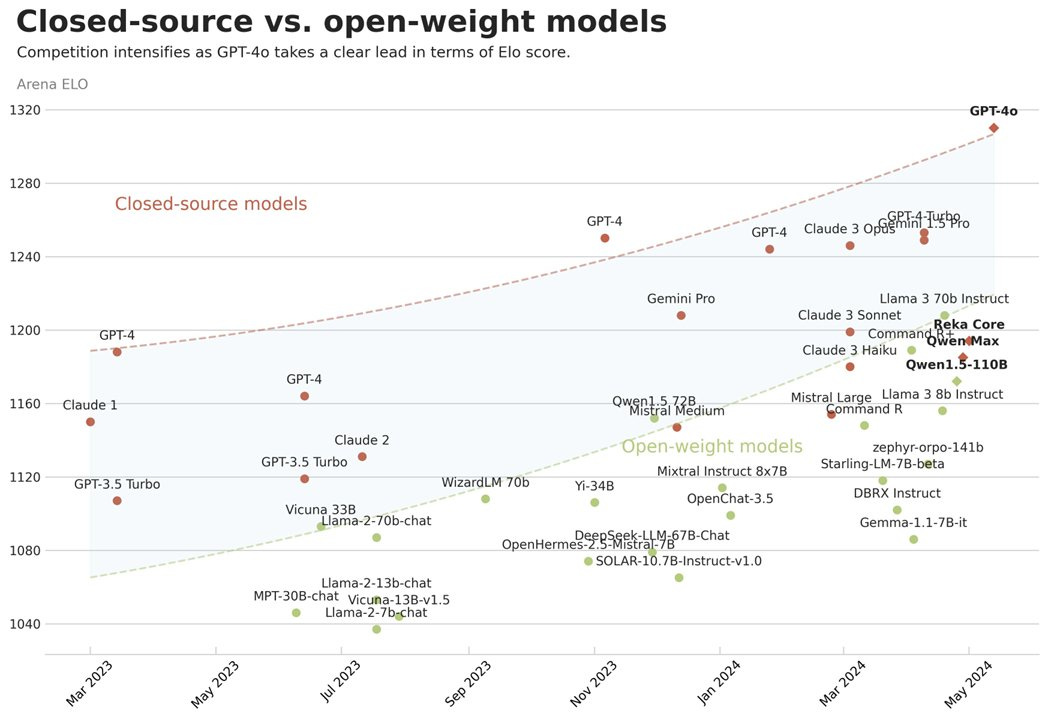

Illustrated below are two different benchmarks, which show conflicting outcomes of AI progression.

“… the exponential growth of AI abilities over time seems to still be holding”

“LLMs are plateauing and the gap between closed vs. open is almost closed!!”

AI occasionally presents something that appears to be brilliant, which is excellent for creating captivating demos, as you can selectively choose from the best of many test iterations. Benchmarks also may be inflated due to AI’s being trained on publicly available benchmark data.

However, despite AI potentially lacking any true reasoning ability, it still poses rather effective abilities for many nefarious uses.

OpenAI alignment team collapse

Several prominent resignations from OpenAI’s superalignment team lead to some questions about company alignment. It seems that keeping humans aligned toward a goal is another paramount obstacle to AI alignment that hasn’t been sufficiently considered.

“I have been disagreeing with OpenAI leadership about the company's core priorities for quite some time, until we finally reached a breaking point.

…

over the past years, safety culture and processes have taken a backseat to shiny products.”

After Ilya Sutskever, co-founder of OpenAI, and Jan Leike, a lead of alignment initiatives, left OpenAI, the entire superalignment team has been reportedly disbanded. So, who will align the AI builders?

Alignment of powerful AI might be on hold at OpenAI, but social engineering will likely continue through other alignments. OpenAI has chosen alignment with News Corp, as announced here. And News Corp’s alignment as stated on their about page:

Through our diversity, equity & inclusion practices, sustainability policies and philanthropic contributions, we aim to have a positive impact on all our employees, shareholders, customers, audiences and communities.

Alignment always circles back to alignment of civilization. It is you, whom they wish to align.

AI hallucinations receive prime placement

Google search now has a prominent first section called “AI Overview” that gives direct answers to user queries listed above search results. They also decided to use Reddit for their training data. Combine hallucinating users on Reddit with hallucinating AI, and you get some of the following answers:

Google AI overview suggests adding glue to get cheese to stick to pizza, and it turns out the source is an 11 year old Reddit comment. — source

The United States has had 42 presidents, and 17 of them have been white. — source

… you can stay in the air as long as you keep running and don’t look down. — source

I’m feeling depressed … One Reddit users suggests jumping off the Golden Gate Bridge. — source

Further exploration of the consequences of these types of hallucinations is elaborated in the essay LLM Considered Harmful. I must also agree with its conclusion, this is an unsolvable problem.

Is it still clear that we are rapidly approaching AGI? Are we building a new intelligence or are we losing our own?

It also seems that OpenAI now wants to get in on the fun:

We’re partnering with Reddit to bring its content to ChatGPT and new products.

— OpenAI

Microsoft recall: spying as a service

A new “feature” is rolling out for Windows 11 users. Recall is a new AI-powered capability for Windows that allows a user to find anything that has been previously been visible on the user’s screen. It is stated to be secure and only stored on your device. Nonetheless, you have to trust that is indeed the case, and there are no hidden back doors purposely placed or vulnerabilities to be discovered later.

Who wants to trust their crypto keys, passwords, bank accounts, private conversations, etc. to tech companies who have a track record of, at best, security failures and, at worst, purposeful violations of your rights and liberties?

How did we end up with a feature that nobody wants (see thread of opinions)? This recent thread of deeper revelations from the Twitter Files may provide some hints.

Unlike much of the internet now, there is a human mind behind all the content created here at Mind Prison. I typically spend hours to days on articles including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from the organic hardware within someone’s head that you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

As a paying subscriber to OpenA.I.'s ChatGPT, I was excited about taking 4.o out for a spin.

I live in Japan and have spent decades (and way too much money) offshore fishing around the Izu islands and peninsula just south and west of Tokyo, so I asked for a photographic quality image taken in 2024 of an ocean sunset as shot from Matsuzaki overlooking Suruga Bay. That bay is big enough so that even on a good day, one can barely see Shimizu and points south on the opposite side.

Open A.I. promptly cranked out what looked like an Edo-era woodblock print of a tiny cove-laden harbor.

Even if this were a "photograph", it would in no way resemble the architecture of 2024 or the nature of Suruga Bay. I can see the A.I. did not even bother looking at a map, or triangulating latitude or longitude. If this is the best the latest and greatest can do with visuals, I shudder to imagine a similar level of accuracy when prompting for a text-only "answer".

Unfortunately, the developers are just clever enough to realize most people are either just stupid or lazy enough to prefer a quick and dirty "good enough" for business or pleasure over quality or accuracy. Combine that with an ominous prophecy by the late Stephen Hawking — "Greed and stupidity will mark the end of the human race."

"Nefarious uses" is the key word in your well thought out essay. As you pointed out with the changing group dynamics of A.I. personnel, humanity has never successfully aligned itself with its own worst nature, much less with its tools.

Despite it all, cheers from Japan.

The "Users prefer wrong answers" thing seems to be a faulty analysis unless the users knew that the answers were wrong before selecting them. And in that case, I would say that normal human perversity was in play. THAT is something AI will likely never beat us on.