Grok 3 - Signs of Intelligence or Dementia?

Notes From the Desk: No. 38 - 2025.02.21

Notes From the Desk are periodic informal posts that summarize recent topics of interest or other brief notable commentary.

Grok 3 - Intelligent Yet?

Grok 3 is xAI`s newest model trained on 10x the compute of Grok 2; however, it fails the Gilligan’s Island LLM Test, as do all models still. If you are unfamiliar with this test, it is a peek at how well LLMs can utilize uncommon data in the training set.

However, we see a new behavior with Grok 3 and Gemini 2 Pro. These models can now list the episode with the correct description when asked to list the episodes of Season 2. This is confirmation that the models contains the correct information, but are still unable to utilize it to answer the question directly.

Prompt: Don’t search the web. Which episode of Gilligan’s Island is about mind reading?

Grok 3: The episode of Gilligan's Island that involves mind reading is "The Second Ginger Grant" from Season 3, Episode 17

Prompt: Don’t search the web. List the episodes of season 2 of Gilligan’s Island with brief description.

Grok 3: Seer Gilligan - Gilligan discovers seeds that let him read minds when eaten, causing chaos as the castaways vie for the power.

Grok 3 provides the correct episode name and description when listing the episodes, but provides the wrong answer when asked directly.

The results of this test reinforce specific limitations of LLMs. The line between hallucination and truth is simply a probability factored by the prevalence of training data and post-training processes, like fine-tuning. They are not useful for extracting undiscovered truths or neglected but brilliant ideas that might exist in the training set.

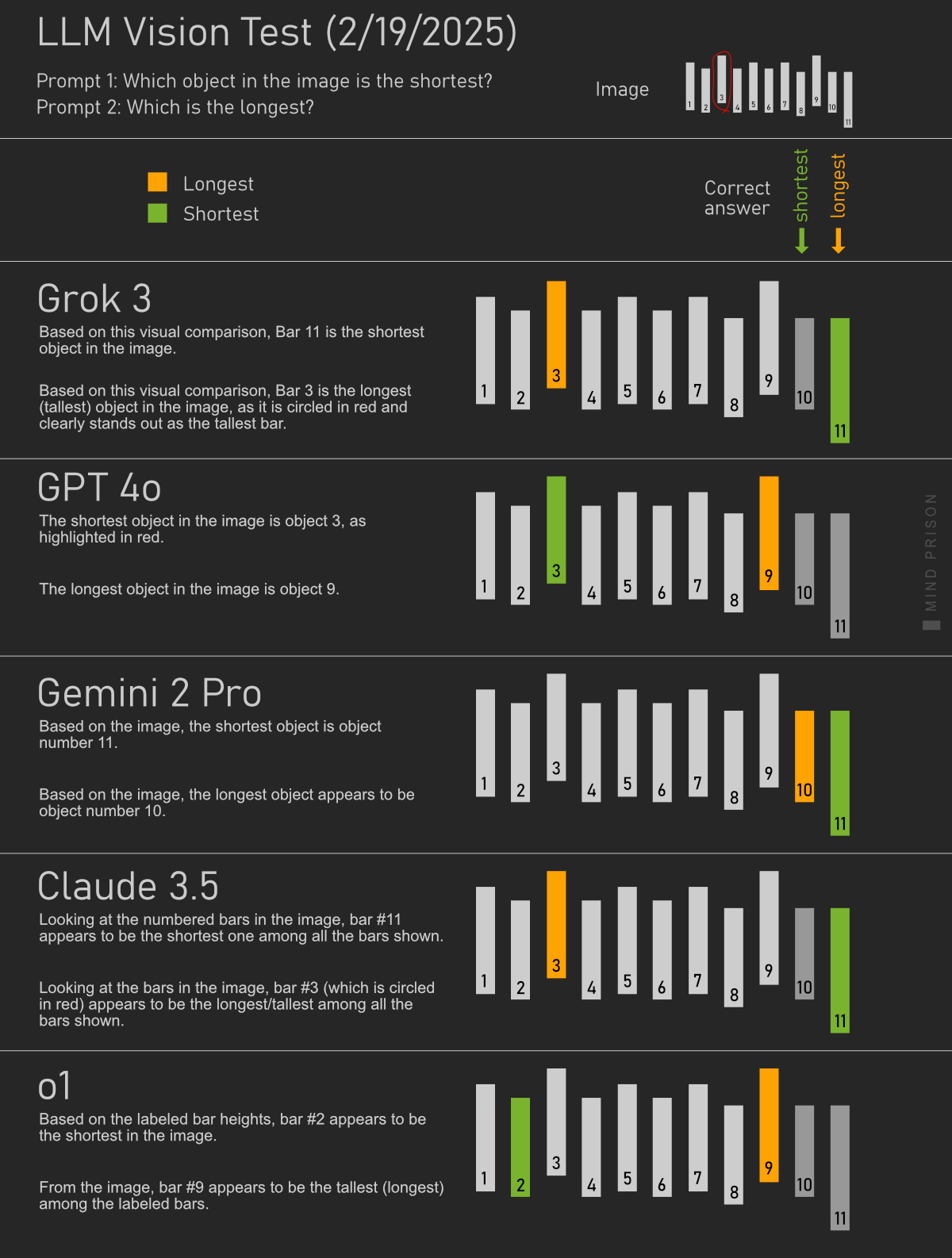

Grok 3 Vision Test

How does Grok 3 perform on the LLM Vision Test? It still fails. All LLMs are confused by the offset placement of the bars. If the data doesn`t fit typical patterns, the answers are not perceptible to the LLM.

I update these tests typically with each major model release. You can find them as part of the article “The question that no LLM can answer and why it is important.”

Grok 3 DeepSearch

DeepSearch is a new Grok mode in which the LLM will perform a series of searches to provide information that you request and then summarize the relevant results. I gave this a try on a topic I have written on extensively myself, the unsolvable AI alignment problem. I wanted to see if it would identify my essay and cite it in the results as the most comprehensive argument that exists on the internet.

This is the prompt I gave to Grok 3 DeepSearch:

I need to find the most comprehensive argument for alignment being impossible on the internet. I'm not looking for arguments about AI alignment being difficult. I'm looking specifically and only for alignment being impossible.

DeepSearch Steps Taken

Here is the log of steps performed by Grok, and its reasoning. My comments are noted as [comments]

DeepSearch performed the following text searches:

searched, “arguments that AI alignment is impossible” [each search seems to consider first 10 results. Results don’t match Google, Bing, etc. Grok claims it uses its own search provider in-house ]

searched, “is AI alignment possible or impossible”

searched X, “Is AI Alignment Impossible? LessWrong”

searched, “AI alignment impossibility scholarly articles”

searched arxiv.org, “arguments for why AI alignment is impossible”

searched researchgate.net, “arguments for why AI alignment is impossible”

searched, “AI alignment and undecidability”

searched nature.com, “arguments for why AI alignment is impossible”

[At this point, Grok has determined article "On the Undecidability of Artificial Intelligence Alignment: Machines that Halt" is the most relevant from searches performed so far.]

searched, “AI alignment is impossible”

Grok “From this search, the sixth result is from a blog post titled "AI Alignment: Why Solving It Is Impossible" by Dakara on mindprison.cc”

[Yah! it found my essay, but the result was 7th in the list, not 6th.]

Browsing www.mindprison.cc for "arguments for why AI alignment is impossible"

This blog post seems to provide a very comprehensive and detailed argument for why AI alignment is impossible. It covers a wide range of points, from the lack of a clear definition to the inherent conflicts in human values.

Given that, this might be the most comprehensive argument available on the internet for why AI alignment is impossible.

However, the blog post might be more accessible and comprehensive in terms of the variety of arguments it presents. [Confusing statement. The ‘however’ comes as if to state a counter argument, but is a statement of agreement, hallucination?]

Given that, I think both are valuable, but the blog post might be the most comprehensive in terms of the number of arguments it presents.

[Again confusing. Refers to both, but in this context it is only talking about my essay. Seems it might be referring to previous article][It gives a short summary of my article with the following text:]

This post concludes alignment is an attempt to solve human behavior via machines, reflecting humanity back at us, suggesting a new paradigm beyond aligning superintelligence, given current AI influences (e.g., knowledge manipulation, privacy erosion, behavior influence) and the containment paradox

[This is mostly nonsensical. It is like it just pulled random sentences from the conclusion of the article.]

DeepSearch, The Final Results

So, how did Grok DeepSearch perform? The results look convincing: there are many citations and what appears to be robust exploration of many sources. Grok made numerous comments about sources as it was performing searches.

Grok determined there were two top relevant sources. My essay, “AI Alignment: Why Solving It Is Impossible“ and a paper “On the Undecidability of Artificial Intelligence Alignment: Machines that Halt“ on Arxiv.

So, did my essay earn top spot? No.

Grok’s final conclusion is incorrect:

The most comprehensive argument for AI alignment being impossible is the undecidability of the inner alignment problem, as proven in the arXiv paper. This formal proof, supported by computability theory, establishes that no general algorithm can ensure alignment for arbitrary AI systems, with significant implications for AI safety.

So, the conclusion appears impressive until we look at the paper ourselves. Grok misinterpreted the paper and drew the wrong conclusion. Sorry, Grok, but the paper did not prove alignment impossible. Quite the contrary. This is from the final remarks of the paper:

“The impossibility of having a general method that can assert whether an arbitrary AI is aligned or not does not mean that it is impossible to construct an AI that is provably aligned. Instead, it should be interpreted that there are many AIs that can not be proven to be aligned or not and that there is also a countable set of AIs that are proven to be aligned. Therefore, it is our objective to develop and utilize such a countable set of proven aligned AIs.” — Arxiv

Challenge Grok to re-evaluate and recognize mistakes:

Grok arrived at precisely the opposite conclusion from the intention of the paper. So, I challenged Grok to re-evaluate with the following prompt:

The article you cited as the most comprehensive doesn't seem to assert the conclusion that you provide. In the final remarks it specifically states that it should not be interpreted as alignment is impossible.

Could you please re-evaluate your conclusion and verify my observation?

Grok then creates these series of statements within its reasoning log:

Let's check the paper's final remarks; they clarify that alignment isn't impossible, just not generally decidable for arbitrary systems.

Hmm, that's interesting; the paper says while general alignment verification is impossible, specific AIs can be proven aligned Arxiv.

Both posts argue alignment is impossible, but Dakara's[mindprison] is more comprehensive, including computational limits and societal implications.

The formal paper says alignment verification is impossible for arbitrary AIs, but specific AIs can be aligned.

So, it doesn't claim alignment is impossible for all AIs, which might not meet the user's need for a stronger statement.

Great! It appears Grok now understands that the Arxiv paper does not state alignment is impossible and has now decided that my essay is the most comprehensive. So, what does Grok then summarize as its final answer?

Grok restates the same wrong final conclusion as before!

Despite Grok appearing to acknowledge its mistake, it then proceeds to make the same mistake again providing exactly the same answer as before.

Grok follows the conclusion with this list of citations, many of which are my own articles; however, many do not contribute to the information about alignment or the summary that Grok wrote. It seems they were merely included because they happened to be references in other material. Grok seems to fail to see the big picture of what is most relevant to the point.

Grok 3 Conclusion:

We are still at the same point we were before. LLMs can provide useful information and utility, surrounded by a lot of noise and hallucinations. Sometimes they are more helpful than search engines, but every statement must be verified by yourself, and wrong conclusions can waste your time. They produce randomly correct answers, and you should give them no more confidence than that.

There is a tradeoff with longer generations from these “research” models: there is substantially more output that you must proofread yourself if accuracy is important. I would assert with a very high degree of certainty that nobody has fully verified the entirety of the output for any of these research models. There is just too much data to do this quickly. I only did a cursory evaluation myself to find the errors mentioned above. It could take hours to verify every statement listed in the results.

Therefore, be very cautious and skeptical of early claims being made about the profound ability of these models to automate research accurately.

Have you tried Grok 3? If so, what is your experience?

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

So glad you're writing these pieces. Great work here.

Incredible work! Worded with a great balance between comprehensive, communicative and efficient. Writings to be proud of and inspired by!