Notes From the Desk are periodic informal posts that summarize recent topics of interest or other brief notable commentary.

Brian Jenney on Professional Vibe Coding

Here is Brian Jenney discussing his experience attempting professional AI vibe coding at his company. Note how it initially appeared to be working well. This is why this technology is so difficult to comprehend: it deceptively looks very impressive until it fails.

There are no doubt many grifters in this space, but also many true believers, due to AI’s impressive mirage of capability. Listen to Brian explain his recent experience at a company attempting to vibe code a real product.

▌Video Transcript ▌

“This whole you can do more with less because of AI thing is a ridiculous myth. When I joined this most recent team, it was basically me and a data scientist. We were told to move really fast, use AI, we’re going to basically vibe code this whole thing from scratch and it kind of worked actually.

To be completely honest, we had a prototype that looked pretty damn cool and it really did feel magical. It felt almost like we could probably build this over a few weeks if we really put our minds to it and maybe the tools really are that good. I honestly started to feel a lot less secure in my role as a developer.

I thought, “Man, maybe I was wrong. Maybe the tools really are so good that we are going to be replaced.” It took around six weeks or so, maybe a little bit less for that honeymoon to end.

When we went from prototype into something in production, meaning something that we had in a demo environment to something that we actually wanted to show people or use that had some real value and real functionality, it became immediately clear that this was not going to work.

The idea of vibe coding was essentially that you would prompt the AI, it would do the thing, you’d kind of make sure it kind of worked and you’d move on. At some point, we basically had to rip this apart and start from scratch.

Now, the AI tools did give us a very good baseline to start from, but if we just kept going down this path, we would have slowed down tremendously. Now back to that AI productivity myth. AI made us slower, not faster.

Now this really confused me and I kind of didn’t want to believe it or I thought maybe I’m just jaded or maybe I’m trying to find a reason not to use AI or something like that. Maybe I just need to be aware of my own biases and just accept the fact that AI is the best thing since sliced bread and we will use it and we will love it.

And I’m sure some of you are hearing this and you’re thinking, “Oh, you know, you just have a skills issue or you should have used this model.” I hate when people say that. I’m on a team of pretty smart people. These people are much more senior than me.

Some of them have been writing code for decades. Some of them really, really bright people. Here’s the thing, we generated a lot of code with AI and maybe this was our mistake. Because we were moving so fast, we had to generate a ton of code with AI.

Now at some point, you can’t fully understand what the code is doing, why it’s there, why is it so verbose, why are there 10 readme’s here. It’s just, it’s not meant for human consumption. Things were breaking constantly and we were just moving too fast.

The idea of just having AI take these prompts or even really detailed specs, we have these rules documents and all the things that we tried to tame the AI just didn’t work.

We were really trying to vibe code our way into a production app and what we saw eventually is that the number of errors that we were getting back and the number of broken things that were coming back, even with test cases, even with all these other guardrails, even with specs, all these things, they were just ultimately slowing us down.” — Brian Jenney

A Note on Making This Clip and Vibe Coding

I edited the clip above in Davinci Resolve and used it to create the subtitles. I wanted to extract a plain transcript text from the subtitle file, so this was an opportunity for a quick vibe coding experiment of my own.

Vibe Coding Golang Success

My first attempt was with the new Gemini 3 Flash. I gave it the following prompt: “Create a Golang file that parses a TTML file and creates an output of only the raw transcript text.” Gemini 3 created a Go file that successfully compiled and worked without error. Note: this is very simple code, at only about 70 lines. This is not a hard problem.

This result is a win for time saved. A one-line prompt to 70 lines of output that works is an excellent ratio. Yaaaaay, I’m a 70x engineer!

Vibe Coding Zig Fail

Next, I attempted to make a Zig version of the Golang application. Golang is a well-known popular language, but Zig is much newer, with less data for the AI training.

I attempted to create a working application with Gemini 3 Flash, Gemini 3 Pro, Claude Opus 4.5, ChatGPT 5.2, and Grok 4.1. None of them created a source file that would even compile. All attempts were failures. Now I’m a 0x engineer!

When Vibe Coding Works and When It Doesn’t

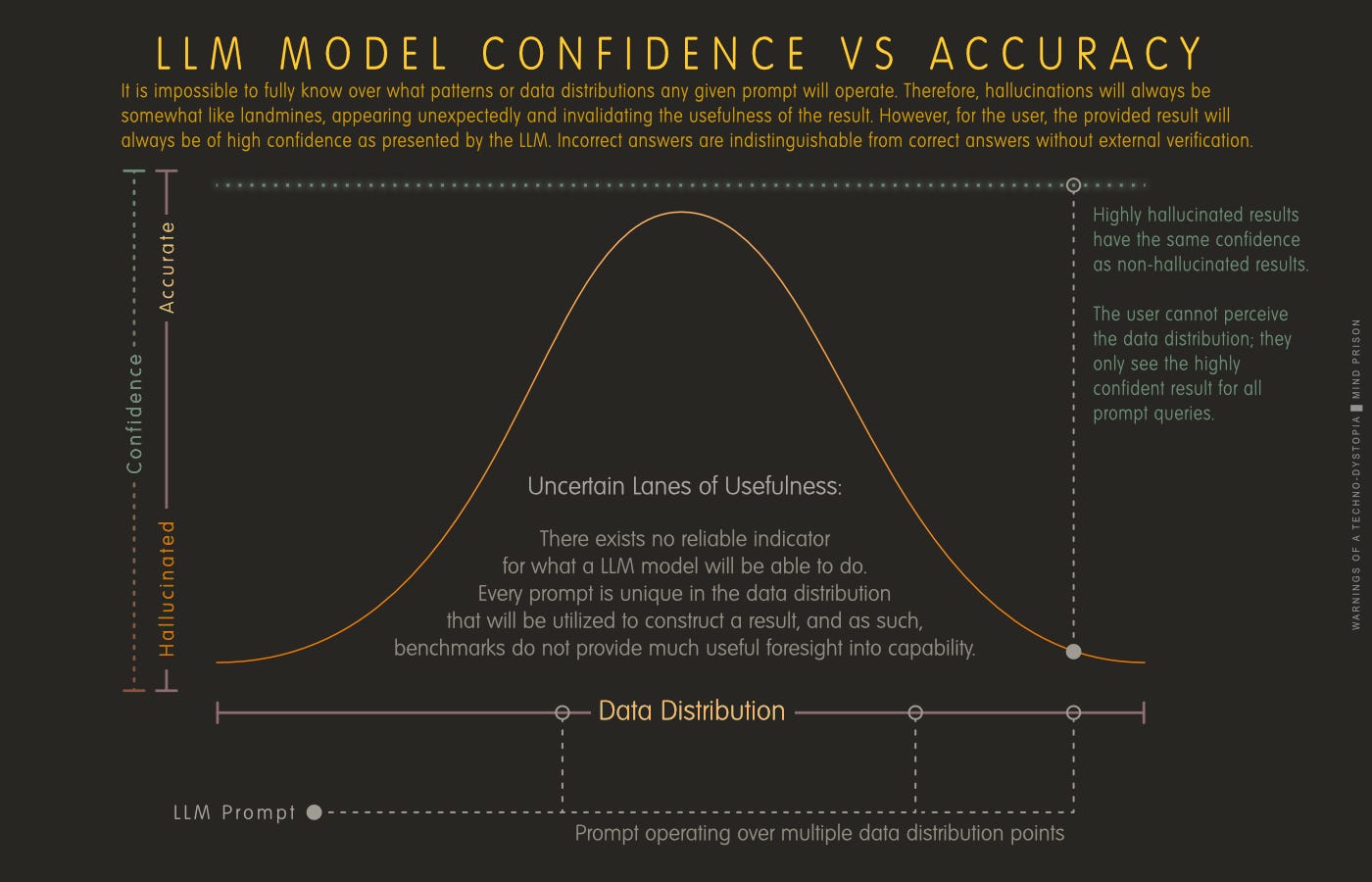

AI is an imperfect simulator of data patterns. The more data, the better the simulation will be. All tasks are highly data-constrained. What this means is that AI can be a productivity enhancer for specifically the things that have already been done millions of times.

If you are using a popular language, popular libraries, and attempting a task that is likely common and has already been done many times before, AI might be able to do it. However, if any of those components are niche, it becomes increasingly unlikely that AI will succeed.

What becomes significantly problematic is that AI has no self-reflection. It does not know what it is good at. It cannot perceive its own knowledge and capabilities. Therefore, when a task is failing you have no idea whether the AI simply can never perform that task or if another hour of prompt tweaking will finally yield a result.

The result of this is often more time lost than time saved when all is accounted for, as described by Brian Jenney above. There is no method of reliable guidance to know what to use the AI for that will be successful, other than the simple heuristic of common knowledge and tasks.

Ironically, this is the least valuable of all the tasks we want AI to perform. It is not going to solve the revolutionary problems. At best, it can somewhat unreliably automate the most common things, but that is never going to justify billions to trillions of dollars in investment capital.

“Vibe coding is a pack of lies. I’ve spent over a year working as a professional software engineer assisted by LLMs and I can tell you that the capability to do meaningful work without a strong background in coding and software architecture just isn’t there.” — David Cloutman

Conformity as a Side Effect

Importantly and often not discussed, this incentivizes locking us into conformity with whatever is the most popular. Since AI is only somewhat good at tasks based on common knowledge, that is what you will use it for if you want to be most productive. The gap between the productivity of common languages and new, niche things widens. The effort required to use alternative and new technologies increases in comparison.

The AI usefulness bell curve creates a usage bias toward the best capabilities, which are just a reflection of the availability of data. The more we use it, the more everything gravitates toward the same patterns.

Mind Prison is an oasis for human thought, attempting to survive amidst the dead internet. I typically spend hours to days on articles, including creating the illustrations for each. I hope if you find them valuable and you still appreciate the creations from human beings, you will consider subscribing. Thank you!

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

Updates:

2026-01-01: added quote from David Cloutman

Good insight. I’ve arrived at the same conclusion (that LLMs are better at solving known problems than new ones) just by messing with AI for my personal projects.

Sometimes I actually lean into it: I prompt Claude to “prioritize selecting a solution that would be preferred by 99% of world class developers.”

Prompt Engineering is not as important as it was in the GPT 3.5 days, but it still helps to narrow the window that the LLM calculates probability from. And narrowing it to the middle of the bell curve, where the most “battle tested” solutions live, seems to be especially effective.

All our most of those LLMs will write you a perfectly working Zig implementation if you give it the context from the Zig reference manual, meaning you download it from the official source and then attach it to your prompt. I do this with Pinescript 6 and it works with any long context LLM perfectly fine. I'm not trying to defend AI or anything like that but sometimes you just need to add a little bit of extra knowledge when it comes to newer stuff and it'll make less mistakes because it's referencing documents that are in its memory as opposed to the huge ball of information that's stored in its training data