Notes From the Desk are periodic informal posts that summarize recent topics of interest or other brief notable commentary.

AI Still Failing At Simple Tasks

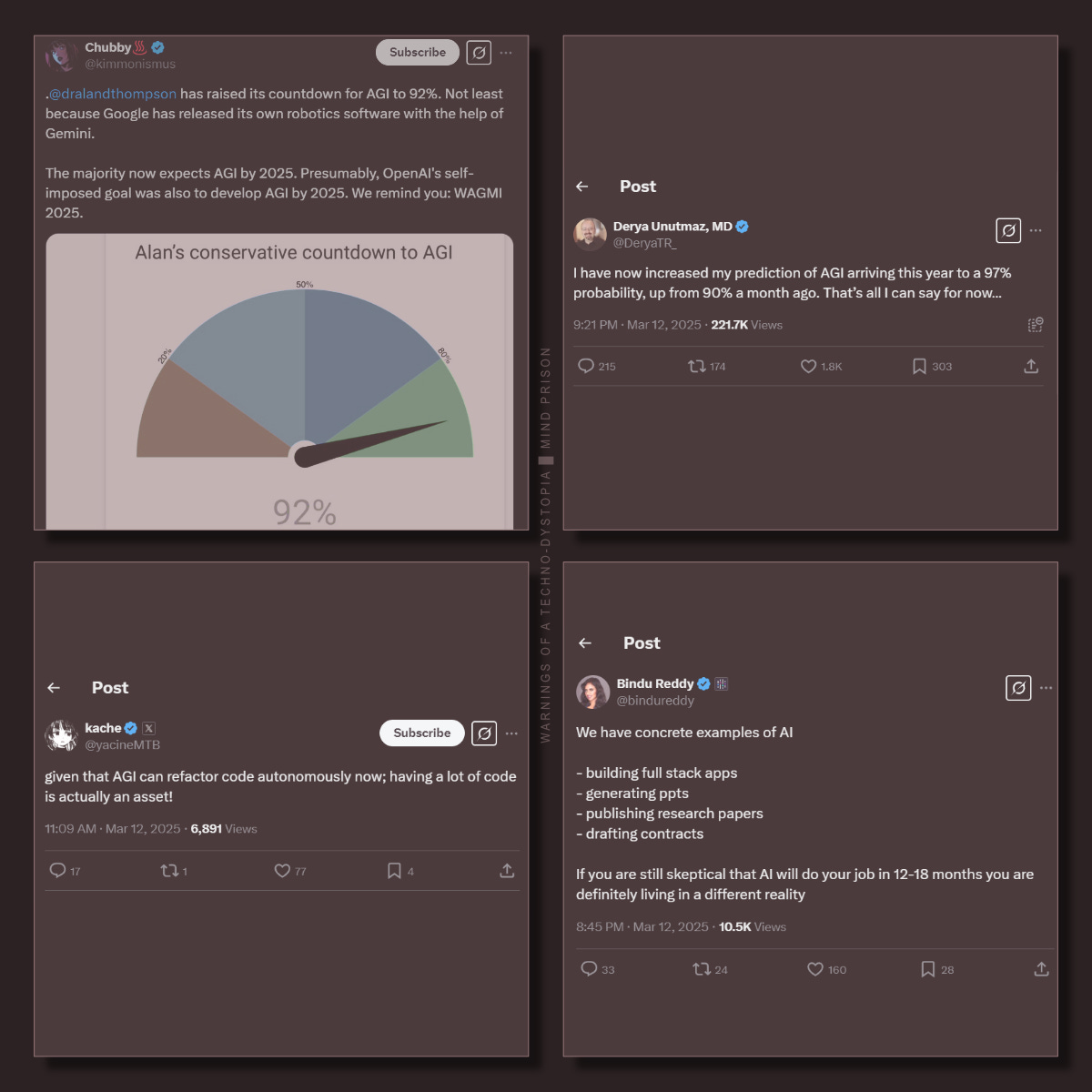

Every time I try something simple with AI, I feel like I'm taking crazy pills. Why? Because it's incomprehensible that everyone is having the "success" they claim. AGI is almost here, they say.

While working on “The LLM User Guide”, the non-hype, practical guide which will be posted at Mind Prison when finished, I attempted to get AI to assist in what would seem a rather simple task.

The task was to reference a single webpage of documentation that is a bit poorly formatted and missing some details: the webpage is documentation for Affinity Photo’s procedural texture functions.

There are a lot of gaps in that document that explain what the functions do. However, AI can mostly infer, based on typical image manipulation functions, the correct usage for the APIs. I’ve been successful in creating some filters as needed by pointing AI at that document.

So, it occurred to me, why not just have AI make better documentation? It should be a simple task for any PhD-level AI assistants, right?

I tried every model I have access to: o3-mini, Gemini, Grok, Claude, DeepSeek. None could do this simple task correctly. Every single model made mistakes, skipped information, missed API parameters in the document, or failed in some other way.

The results of AI's attempt to make a better document

Original: Below is a section of the original document in the upper left image. The column explaining the functions is empty, and there is a lot of needless repetition of parameters.

Grok 3: Did one of the best jobs of re-formatting the document. It added documentation to the functions and reduced redundancies. At a glance, it looks complete and well done. However, close inspection revealed many small mistakes throughout that take time to uncover.

Gemini 2 Pro: Failed to complete the document. I tried several times, and it would skip significant portions every time. When I asked it to continue, it just started spewing endless dashes.

O3 Mini: Yes, that is a blank page. O3 couldn’t even produce a response for some reason. Sometimes I got an error, other times just an empty result.

Grok 3 did the best in this test, and I suppose if I were an AI influencer, I would simply post that as the result and be done with it, as it looks great and who will even know the difference. AI looks extremely impressive when you selectively choose the one output that worked or mostly worked. However, careful scrutiny of the document revealed many mistakes that were non-obvious.

With the machine of randomly correct answers, much of the disparity between opinions is derived from which result they show you. It becomes conveniently easy to make it look awesome or terrible, as it is, in reality, somewhat both.

If you are interested in other revealing AI failures, you may wish to checkout “Why LLMs Don't Ask For Calculators?” and “The question that no LLM can answer and why it is important”

No compass through the dark exists without hope of reaching the other side and the belief that it matters …

After all your travails I think you’ll enjoy my article on the AI hype that goes beyond the level of an episode from Silicon Valley. When you compare statements for AI gurus from YouTube to the wit and wisdom of Erlich Bachman sometimes you can’t tell the difference.

https://culturalcourage.substack.com/p/viva-aviato

Sadly, Claude 3.7 Sonnet cannot help with fixing this API documentation:

"I don't have specific detailed information about an official API for Affinity Photo. As of my knowledge cutoff in October 2024, Serif (the company behind Affinity Photo) hasn't released a comprehensive public API that allows developers to create plugins or automate tasks within Affinity Photo in the same way that Adobe offers for Photoshop."

On the other hand, it can reliably write me a well-commented Java method to create an HTTP REST client for OpenAI's API, plus a working unit test.